Compare commits

merge into: scisharp:master

scisharp:0.60.5-eager-tensor-resolved

scisharp:SafeHandles

scisharp:master

scisharp:rnn-dev

scisharp:tf.keras-0.3.image-classification

scisharp:heads/v0.1.0-Tensor

scisharp:v0.10.x-convolutional-neural-network

scisharp:v0.100.4-04-18

scisharp:v0.100.4-LoadModel

scisharp:v0.11-maintenance

scisharp:v0.110.4-Transformer

scisharp:v0.12-rnn

scisharp:v0.13-lstm

scisharp:v0.14-maintenance

scisharp:v0.15-tensorflow1.15

scisharp:v0.20-tensorflow2.3

scisharp:v0.30-keras

scisharp:v0.40-tensorflow2.4-tstring

scisharp:v0.50-tensorflow2.5

scisharp:v0.6.x-control-flow

scisharp:heads/v0.60-tf.numpy

scisharp:v0.60.4-TensorFlowOpLayer

scisharp:v0.70-tflite

scisharp:v0.70.0-TimeSeries

scisharp:heads/v0.70.2-NET6

scisharp:v0.8.0-logistic-regression

scisharp:v0.9.x-neural-network

pull from: scisharp:v0.20-tensorflow2.3

scisharp:0.60.5-eager-tensor-resolved

scisharp:SafeHandles

scisharp:master

scisharp:rnn-dev

scisharp:tf.keras-0.3.image-classification

scisharp:v0.1.0-Tensor

scisharp:v0.10.x-convolutional-neural-network

scisharp:v0.100.4-04-18

scisharp:v0.100.4-LoadModel

scisharp:v0.11-maintenance

scisharp:v0.110.4-Transformer

scisharp:v0.12-rnn

scisharp:v0.13-lstm

scisharp:v0.14-maintenance

scisharp:v0.15-tensorflow1.15

scisharp:v0.20-tensorflow2.3

scisharp:v0.30-keras

scisharp:v0.40-tensorflow2.4-tstring

scisharp:v0.50-tensorflow2.5

scisharp:v0.6.x-control-flow

scisharp:v0.60-tf.numpy

scisharp:v0.60.4-TensorFlowOpLayer

scisharp:v0.70-tflite

scisharp:v0.70.0-TimeSeries

scisharp:v0.70.2-NET6

scisharp:v0.8.0-logistic-regression

scisharp:v0.9.x-neural-network

13 Commits

13 Commits

| Author | SHA1 | Message | Date |

|---|---|---|---|

|

|

954bbe597a | disable TF_UpdateEdge. | 5 years ago |

|

|

a93636b158 | Add MethodBoundaryAspect.Fody. | 5 years ago |

|

|

4726a7dbd4 | Fix get operation by pointer. | 5 years ago |

|

|

2431f840dd | fix image resize. | 5 years ago |

|

|

050fd2c63e | Add ConcreteFunction. | 5 years ago |

|

|

6c72af1503 | Add ConcreteFunction to support dataset map. | 5 years ago |

|

|

e7da957bcb | add FuncGraph. | 5 years ago |

|

|

8579575706 | add function test. | 5 years ago |

|

|

c7642ab08e | update docs of constant. | 5 years ago |

|

|

293f202589 | update document. | 5 years ago |

|

|

375fe28f57 | Add TF_UpdateEdge APIs. | 5 years ago |

|

|

f226ad704f | add overload for Layer call function, be able to input array and return array. | 5 years ago |

|

|

c6c1125f54 | Chagne return type to Tensor for assign_add. | 5 years ago |

79 changed files with 1502 additions and 291 deletions

Unified View

Diff Options

-

+45 -9docs/source/Constant.md

-

+2 -1docs/source/EagerMode.md

-

+1 -1docs/source/Graph.md

-

+14 -21docs/source/HelloWorld.md

-

+10 -9docs/source/Tensor.md

-

+41 -0docs/source/_static/constant/n-index-formula-offset.svg

-

+33 -0docs/source/_static/constant/n-index-formula.svg

-

BINdocs/source/_static/contiguous-block-of-memory-ndarray-example-1.png

-

BINdocs/source/_static/contiguous-block-of-memory.png

-

BINdocs/source/_static/tensor-constant-ndarray.png

-

BINdocs/source/_static/tensor-naming.png

-

+17 -0src/TensorFlowNET.Console/MemoryTestingCases.cs

-

+3 -0src/TensorFlowNET.Console/Program.cs

-

+1 -1src/TensorFlowNET.Console/TensorFlowNET.Console.csproj

-

+4 -0src/TensorFlowNET.Core/APIs/tf.array.cs

-

+26 -0src/TensorFlowNET.Core/APIs/tf.autograph.cs

-

+29 -15src/TensorFlowNET.Core/APIs/tf.control_flow.cs

-

+3 -0src/TensorFlowNET.Core/APIs/tf.image.cs

-

+1 -6src/TensorFlowNET.Core/APIs/tf.nn.cs

-

+3 -0src/TensorFlowNET.Core/APIs/tf.train.cs

-

+3 -0src/TensorFlowNET.Core/Data/DatasetManager.cs

-

+1 -1src/TensorFlowNET.Core/Data/DatasetV2.cs

-

+6 -4src/TensorFlowNET.Core/Data/MapDataset.cs

-

+10 -0src/TensorFlowNET.Core/Data/TensorSliceDataset.cs

-

+5 -1src/TensorFlowNET.Core/Eager/EagerRunner.TFE_FastPathExecute.cs

-

+1 -1src/TensorFlowNET.Core/Eager/EagerTensor.Creation.cs

-

+6 -0src/TensorFlowNET.Core/Eager/EagerTensor.cs

-

+31 -0src/TensorFlowNET.Core/Eager/c_api.eager.cs

-

+58 -0src/TensorFlowNET.Core/Functions/ConcreteFunction.cs

-

+4 -1src/TensorFlowNET.Core/Functions/c_api.function.cs

-

+1 -0src/TensorFlowNET.Core/Gradients/gradients_util.cs

-

+45 -0src/TensorFlowNET.Core/Graphs/AutoGraph.cs

-

+80 -0src/TensorFlowNET.Core/Graphs/AutoGraphAttribute.cs

-

+61 -0src/TensorFlowNET.Core/Graphs/FuncGraph.cs

-

+1 -1src/TensorFlowNET.Core/Graphs/c_api.graph.cs

-

+1 -1src/TensorFlowNET.Core/Keras/Engine/Flatten.cs

-

+37 -3src/TensorFlowNET.Core/Keras/Engine/Layer.cs

-

+1 -1src/TensorFlowNET.Core/Keras/Layers/BatchNormalization.cs

-

+1 -1src/TensorFlowNET.Core/Keras/Layers/Conv.cs

-

+1 -1src/TensorFlowNET.Core/Keras/Layers/Dense.cs

-

+1 -1src/TensorFlowNET.Core/Keras/Layers/Dropout.cs

-

+1 -1src/TensorFlowNET.Core/Keras/Layers/Embedding.cs

-

+2 -2src/TensorFlowNET.Core/Keras/Layers/LSTM.cs

-

+1 -1src/TensorFlowNET.Core/Keras/Layers/Pooling2D.cs

-

+1 -1src/TensorFlowNET.Core/Keras/Layers/Rescaling.cs

-

+6 -3src/TensorFlowNET.Core/Keras/Preprocessings/Preprocessing.paths_and_labels_to_dataset.cs

-

+43 -6src/TensorFlowNET.Core/Layers/Layer.cs

-

+1 -1src/TensorFlowNET.Core/Operations/ControlFlows/WhileContext.cs

-

+2 -2src/TensorFlowNET.Core/Operations/NnOps/BasicLSTMCell.cs

-

+3 -3src/TensorFlowNET.Core/Operations/NnOps/BasicRNNCell.cs

-

+1 -1src/TensorFlowNET.Core/Operations/NnOps/rnn.cs

-

+1 -1src/TensorFlowNET.Core/Operations/Operation.Instance.cs

-

+3 -1src/TensorFlowNET.Core/Operations/Operation.cs

-

+25 -2src/TensorFlowNET.Core/Operations/control_flow_ops.cs

-

+3 -2src/TensorFlowNET.Core/Operations/dataset_ops.cs

-

+44 -25src/TensorFlowNET.Core/Operations/image_ops_impl.cs

-

+13 -5src/TensorFlowNET.Core/Tensorflow.Binding.csproj

-

+2 -0src/TensorFlowNET.Core/Tensors/Tensor.Creation.cs

-

+1 -1src/TensorFlowNET.Core/Tensors/Tensor.Explicit.cs

-

+1 -2src/TensorFlowNET.Core/Tensors/Tensor.cs

-

+12 -3src/TensorFlowNET.Core/Tensors/tensor_util.cs

-

+1 -1src/TensorFlowNET.Core/Training/Optimizer.cs

-

+2 -2src/TensorFlowNET.Core/Variables/BaseResourceVariable.cs

-

+2 -2src/TensorFlowNET.Core/Variables/IVariableV1.cs

-

+2 -2src/TensorFlowNET.Core/Variables/RefVariable.cs

-

+67 -64src/TensorFlowNET.Core/Variables/ResourceVariable.cs

-

+1 -1src/TensorFlowNET.Core/Variables/state_ops.cs

-

+2 -0src/TensorFlowNET.Core/ops.cs

-

+1 -0src/TensorFlowNet.Benchmarks/Tensorflow.Benchmark.csproj

-

+4 -4test/TensorFlowNET.UnitTest/Dataset/DatasetTest.cs

-

+1 -2test/TensorFlowNET.UnitTest/EagerModeTestBase.cs

-

+70 -3test/TensorFlowNET.UnitTest/ImageTest.cs

-

+54 -0test/TensorFlowNET.UnitTest/ManagedAPI/ControlFlowApiTest.cs

-

+70 -0test/TensorFlowNET.UnitTest/ManagedAPI/FunctionApiTest.cs

-

+456 -9test/TensorFlowNET.UnitTest/NativeAPI/CApiFunctionTest.cs

-

+2 -1test/TensorFlowNET.UnitTest/NativeAPI/CSession.cs

-

+7 -0test/TensorFlowNET.UnitTest/NativeAPI/c_test_util.cs

-

+6 -1test/TensorFlowNET.UnitTest/Tensorflow.UnitTest.csproj

-

+0 -56test/TensorFlowNET.UnitTest/img_test/TestCrop.cs

+ 45

- 9

docs/source/Constant.md

View File

| @@ -1,6 +1,6 @@ | |||||

| # Chapter. Constant | |||||

| # Chapter 2. Constant | |||||

| In TensorFlow, a constant is a special Tensor that cannot be modified while the graph is running. Like in a linear model $\tilde{y_i}=\boldsymbol{w}x_i+b$, constant $b$ can be represented as a Constant Tensor. Since the constant is a Tensor, it also has all the data characteristics of Tensor, including: | |||||

| In TensorFlow, a constant is a special Tensor that cannot be modified while the graph is running. Like in a linear model `y = ax + b`, constant `b` can be represented as a `Constant` Tensor. Since the constant is a Tensor, it also has all the data characteristics of Tensor, including: | |||||

| * value: scalar value or constant list matching the data type defined in TensorFlow; | * value: scalar value or constant list matching the data type defined in TensorFlow; | ||||

| * dtype: data type; | * dtype: data type; | ||||

| @@ -9,9 +9,9 @@ In TensorFlow, a constant is a special Tensor that cannot be modified while the | |||||

| ##### How to create a Constant | |||||

| ### How to create a Constant | |||||

| TensorFlow provides a handy function to create a Constant. In TF.NET, you can use the same function name `tf.constant` to create it. TF.NET takes the same name as python binding to the API. Naming, although this will make developers who are used to C# naming habits feel uncomfortable, but after careful consideration, I decided to give up the C# convention naming method. | |||||

| TensorFlow provides a handy function to create a Constant. In TF.NET, you can use the same function name `tf.constant` to create it. TF.NET takes the same name as python binding for the API. Naming, although this will make developers who are used to C# naming convention feel uncomfortable, but after careful consideration, I decided to give up the C# convention naming method. One of reason is for model developer, they don't have to learn a totally new different APIs. | |||||

| Initialize a scalar constant: | Initialize a scalar constant: | ||||

| @@ -24,21 +24,57 @@ var c4 = tf.constant("Big Tree"); // string | |||||

| Initialize a constant through ndarray: | Initialize a constant through ndarray: | ||||

| TF.NET works very well with `NumSharp`'s `NDArray`. You can create a tensor from .NET primitive data type and NDArray as well. An `ndarray` is a (usually fixed-size) multidimensional container of items of the same type and size. The number of dimensions and items in an array is defined by its `shape`, which is a tuple of N non-negative integers that specify the sizes of each dimension. | |||||

| ```csharp | ```csharp | ||||

| // dtype=int, shape=(2, 3) | // dtype=int, shape=(2, 3) | ||||

| var nd = np.array(new int[][] | |||||

| var nd = np.array(new int[,] | |||||

| { | { | ||||

| new int[]{3, 1, 1}, | |||||

| new int[]{2, 3, 1} | |||||

| {1, 2, 3}, | |||||

| {4, 5, 6} | |||||

| }); | }); | ||||

| var tensor = tf.constant(nd); | var tensor = tf.constant(nd); | ||||

| ``` | ``` | ||||

| ##### Dive in Constant | |||||

| ### Dive in Constant | |||||

| Now let's explore how `constant` works in `eager` mode inside the black box. | |||||

| Let's continue using the last examples, we're going to initialize a tensor in an ndarray of `[shape(2, 3), int32]`. | |||||

| ##### NDArray | |||||

| The first thing we need to know is about `ndarray`'s memory model. The ndarray memory model is a very important data structure, and almost all underlying computation are inseparable from this datb a structure. One fundamental aspect of the ndarray is that an array is seen as a "chunk" of memory starting at some location. The interpretation of this memory depends on the stride information. A segment of memory is inherently 1-dimensional, and there are many different schemes for arranging the items of an N-dimensional array in a 1-dimensional block. `ndarray` objects can accommodate any strided indexing scheme. In a strided scheme, the N-dimensional index <img src="_static\constant\n-index-formula.svg"/> corresponds to the offset (in bytes) : <img src="_static\constant\n-index-formula-offset.svg" />. | |||||

| <img src="_static\contiguous-block-of-memory.png" /> | |||||

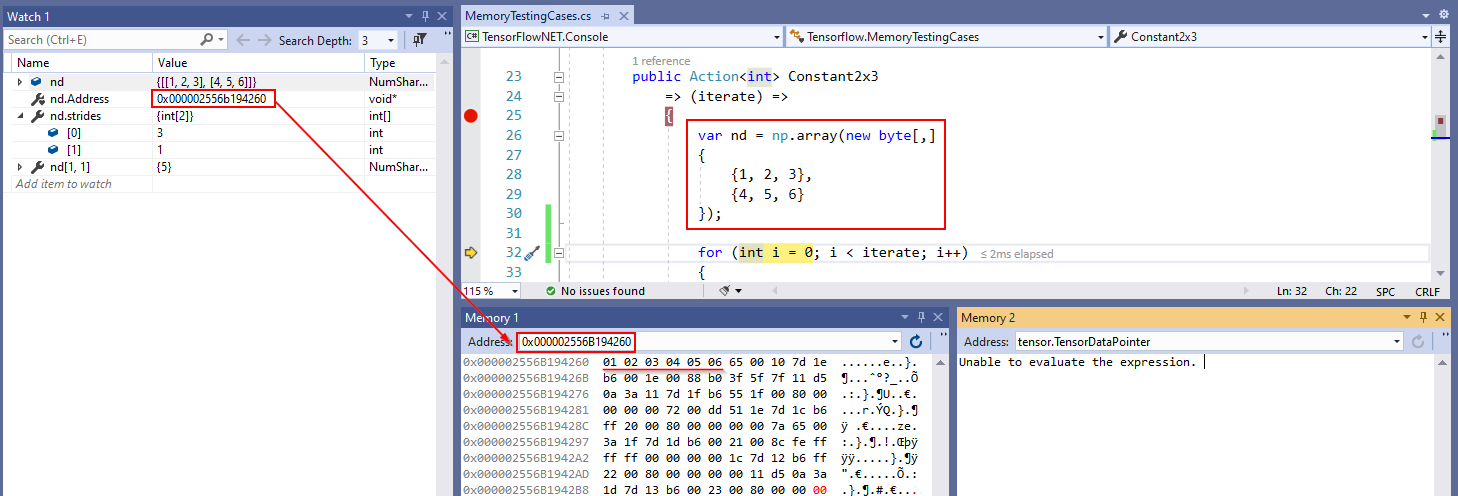

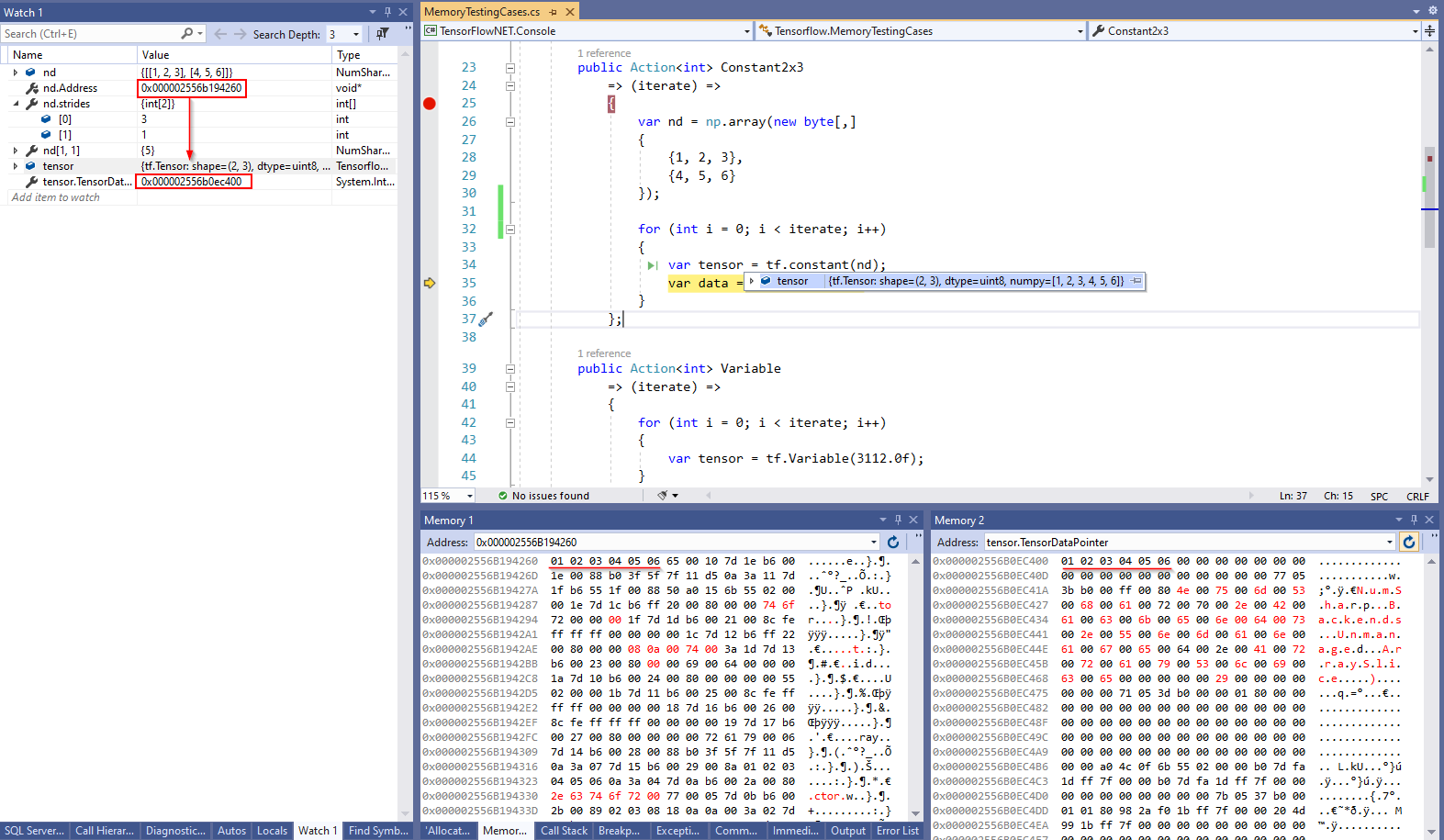

| If we take a look at the real memory allocation in Visual Studio, below diagram helps us understand the data structure more intuitively. The strides keep track the size of every single dimension, help identify the actual offset in heap memory. The formula to calculate offset is: `offset = i * strides[0] + j * strides[1]`. | |||||

| For example: if you want to seek the value in `[1, 1]`, you just need to calculate `1 * 3 + 1 * 1 = 4`, converted to pointer is `0x000002556B194260 + 4 = 0x000002556B194264` where has a value `05`. | |||||

| <img src="_static\contiguous-block-of-memory-ndarray-example-1.png"/> | |||||

| Now let's explore how `constant` works. | |||||

| Through the above diagram, we know how the data is stored in memory, and then we will look at how the data is transferred to `TensorFlow`. | |||||

| ##### Tensor | |||||

| If you don't understand very well what `Tensor` is, you can go back to the chapter `Tensor` there is pretty much explanation if you skipped that chapter. Tensor is actually an NDArray that is with more than 2 dimensions. | |||||

| TensorFlow will decide whether to copy the data or use the same pointer. Normally speaking, it's more safe whenever you copy data for the following process, especially in interoperating between .NET runtime and C++ runtime that they all have their own garbage collection (GC) mechanism, application will crash if someone access a block of destroyed memory. `TF_STRING` and `TF_RESOURCE` tensors have a different representation in `TF_Tensor` than they do in `tensorflow::Tensor`. Other types have the same representation, so copy only if it is safe to do so. | |||||

| <img src="_static\tensor-constant-ndarray.png" /> | |||||

| Before tensorflow is creating the `TF_Tensor`, it checks the shape and data size. If the size doesn't match, it will return `nullptr` pointer. | |||||

| ##### Get the data of Tensor | |||||

| For `eager` mode, it's pretty simple to view the actual value in a `tensor`. | |||||

| ```csharp | |||||

| var data = tensor.numpy() | |||||

| ``` | |||||

| The `data` will be a `ndarray` variable. | |||||

| ##### Other functions to create a Constant | ##### Other functions to create a Constant | ||||

+ 2

- 1

docs/source/EagerMode.md

View File

| @@ -1,2 +1,3 @@ | |||||

| # Chapter. Eager Mode | |||||

| # Chapter 4. Eager Mode | |||||

| TensorFlow's eager execution is an imperative programming environment that evaluates operations immediately, without building graphs: operations return concrete values instead of constructing a computational graph to run later. This makes it easy to get started with TensorFlow and debug models, and it reduces boilerplate as well. | |||||

+ 1

- 1

docs/source/Graph.md

View File

| @@ -1,4 +1,4 @@ | |||||

| # Chapter. Graph | |||||

| # Chapter 3. Graph | |||||

| TensorFlow uses a **dataflow graph** to represent your computation in terms of the dependencies between individual operations. A graph defines the computation. It doesn't compute anything, it doesn't hold any values, it just defines the operations that you specified in your code. | TensorFlow uses a **dataflow graph** to represent your computation in terms of the dependencies between individual operations. A graph defines the computation. It doesn't compute anything, it doesn't hold any values, it just defines the operations that you specified in your code. | ||||

+ 14

- 21

docs/source/HelloWorld.md

View File

| @@ -10,7 +10,7 @@ Let's run a classic HelloWorld program first and see if TensorFlow is running on | |||||

| ### Install the TensorFlow.NET SDK | ### Install the TensorFlow.NET SDK | ||||

| TensorFlow.NET uses the .NET Standard 2.0 standard, so your new project Target Framework can be .NET Framework or .NET Core. All the examples in this book are using .NET Core 2.2 and Microsoft Visual Studio Community 2017. To start building TensorFlow program you just need to download and install the .NET SDK (Software Development Kit). You have to download the latest .NET Core SDK from offical website: https://dotnet.microsoft.com/download. | |||||

| TensorFlow.NET uses the .NET Standard 2.0 standard, so your new project Target Framework can be .NET Framework or .NET Core/ .NET 5. All the examples in this book are using .NET Core 3.1 and Microsoft Visual Studio Community 2019. To start building TensorFlow program you just need to download and install the .NET SDK (Software Development Kit). You have to download the latest .NET Core SDK from offical website: https://dotnet.microsoft.com/download. | |||||

| @@ -38,9 +38,9 @@ PM> Install-Package SciSharp.TensorFlow.Redist-Windows-GPU | |||||

| ### Start coding Hello World | ### Start coding Hello World | ||||

| After installing the TensorFlow.NET package, you can use the `using Tensorflow` to introduce the TensorFlow library. | |||||

| After installing the TensorFlow.NET package, you can use the `using static Tensorflow.Binding` to introduce the TensorFlow .NET library. | |||||

| TensorFlow 2.x enabled `Eager Mode` by default. About what eager mode is, I will introduce it in detail in the following chapters. | |||||

| ```csharp | ```csharp | ||||

| using System; | using System; | ||||

| @@ -51,33 +51,26 @@ namespace TensorFlowNET.Examples | |||||

| /// <summary> | /// <summary> | ||||

| /// Simple hello world using TensorFlow | /// Simple hello world using TensorFlow | ||||

| /// </summary> | /// </summary> | ||||

| public class HelloWorld : IExample | |||||

| class Program | |||||

| { | { | ||||

| public void Run() | |||||

| static void Main(string[] args) | |||||

| { | { | ||||

| /* Create a Constant op | |||||

| The op is added as a node to the default graph. | |||||

| The value returned by the constructor represents the output | |||||

| of the Constant op. */ | |||||

| var hello = tf.constant("Hello, TensorFlow!"); | var hello = tf.constant("Hello, TensorFlow!"); | ||||

| // Start tf session | |||||

| using (var sess = tf.Session()) | |||||

| { | |||||

| // Run the op | |||||

| var result = sess.run(hello); | |||||

| Console.WriteLine(result); | |||||

| } | |||||

| Console.WriteLine(hello); | |||||

| } | } | ||||

| } | } | ||||

| } | } | ||||

| ``` | ``` | ||||

| After CTRL + F5 run, you will get the output. | After CTRL + F5 run, you will get the output. | ||||

| ```cmd | ```cmd | ||||

| 2019-01-05 10:53:42.145931: I tensorflow/core/platform/cpu_feature_guard.cc:141] Your CPU supports instructions that this TensorFlow binary was not compiled to use: AVX2 | |||||

| Hello, TensorFlow! | |||||

| Press any key to continue . . . | |||||

| 9/20/2020 2:15:09 AM Starting Hello World | |||||

| tf.Tensor: shape=(), dtype=string, numpy=Hello, TensorFlow.NET! | |||||

| 9/20/2020 2:15:09 AM Completed Hello World | |||||

| Example: Hello World in 0.1273463s is OK! | |||||

| TensorFlow.NET v0.20.1.0 | |||||

| TensorFlow Binary v2.3.0 | |||||

| 1 of 21 example(s) are completed. | |||||

| Press [Enter] to continue... | |||||

| ``` | ``` | ||||

| This sample code can be found at [here](https://github.com/SciSharp/SciSharp-Stack-Examples/blob/master/src/TensorFlowNET.Examples/HelloWorld.cs). | This sample code can be found at [here](https://github.com/SciSharp/SciSharp-Stack-Examples/blob/master/src/TensorFlowNET.Examples/HelloWorld.cs). | ||||

+ 10

- 9

docs/source/Tensor.md

View File

| @@ -1,4 +1,4 @@ | |||||

| # Chapter. Tensor | |||||

| # Chapter 1. Tensor | |||||

| ### Represents one of the outputs of an Operation | ### Represents one of the outputs of an Operation | ||||

| @@ -6,13 +6,13 @@ | |||||

| ##### What is Tensor? | ##### What is Tensor? | ||||

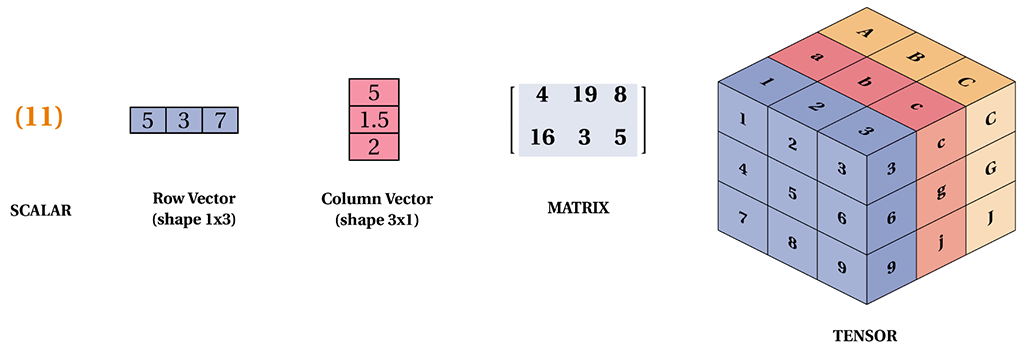

| Tensor holds a multi-dimensional array of elements of a single data type which is very similar with numpy's ndarray. When the dimension is zero, it can be called a scalar. When the dimension is 2, it can be called a matrix. When the dimension is greater than 2, it is usually called a tensor. If you are very familiar with numpy, then understanding Tensor will be quite easy. | |||||

| Tensor holds a multi-dimensional array of elements of a single data type which is very similar with `NumPy`'s `ndarray`. When the dimension is zero, it can be called a scalar. When the dimension is 2, it can be called a matrix. When the dimension is greater than 2, it is usually called a tensor. If you are very familiar with `NumPy`, then understanding Tensor will be quite easy. | |||||

| <img src="_static\tensor-naming.png"> | |||||

| ##### How to create a Tensor? | ##### How to create a Tensor? | ||||

| There are many ways to initialize a Tensor object in TF.NET. It can be initialized from a scalar, string, matrix or tensor. | |||||

| There are many ways to initialize a Tensor object in TF.NET. It can be initialized from a scalar, string, matrix or tensor. But the best way to create a Tensor is using high level APIs like `tf.constant`, `tf.zeros` and `tf.ones`. We'll talk about constant more detail in next chapter. | |||||

| ```csharp | ```csharp | ||||

| // Create a tensor holds a scalar value | // Create a tensor holds a scalar value | ||||

| @@ -32,13 +32,9 @@ Console.WriteLine($"t1: {t1}, t2: {t2}, t3: {t3}"); | |||||

| ##### Data Structure of Tensor | ##### Data Structure of Tensor | ||||

| TF uses column major order. If we use NumSharp to generate a 2 x 3 matrix, if we access the data from 0 to 5 in order, we won't get a number of 1-6, but we get the order of 1, 4, 2, 5, 3, 6. a set of numbers. | TF uses column major order. If we use NumSharp to generate a 2 x 3 matrix, if we access the data from 0 to 5 in order, we won't get a number of 1-6, but we get the order of 1, 4, 2, 5, 3, 6. a set of numbers. | ||||

| ```cs | |||||

| ```csharp | |||||

| // Generate a matrix:[[1, 2, 3], [4, 5, 6]] | // Generate a matrix:[[1, 2, 3], [4, 5, 6]] | ||||

| var nd = np.array(1f, 2f, 3f, 4f, 5f, 6f).reshape(2, 3); | var nd = np.array(1f, 2f, 3f, 4f, 5f, 6f).reshape(2, 3); | ||||

| // The index will be 0 2 4 1 3 5, it's column-major order. | // The index will be 0 2 4 1 3 5, it's column-major order. | ||||

| @@ -49,3 +45,8 @@ var nd = np.array(1f, 2f, 3f, 4f, 5f, 6f).reshape(2, 3); | |||||

|  |  | ||||

|  |  | ||||

| ##### Index/ Slice of Tensor | |||||

| Tensor element can be accessed by `index` and `slice` related operations. Through some high level APIs, we can easily access specific dimension's data. | |||||

+ 41

- 0

docs/source/_static/constant/n-index-formula-offset.svg

View File

| @@ -0,0 +1,41 @@ | |||||

| <?xml version='1.0' encoding='UTF-8'?> | |||||

| <!-- This file was generated by dvisvgm 2.9.1 --> | |||||

| <svg version='1.1' xmlns='http://www.w3.org/2000/svg' xmlns:xlink='http://www.w3.org/1999/xlink' width='85.429852pt' height='34.493011pt' viewBox='190.161786 79.900371 85.429852 34.493011'> | |||||

| <defs> | |||||

| <path id='g2-78' d='M6.312329-4.574844C6.40797-4.96538 6.583313-5.156663 7.157161-5.180573C7.236862-5.180573 7.300623-5.228394 7.300623-5.332005C7.300623-5.379826 7.260772-5.443587 7.181071-5.443587C7.12528-5.443587 6.973848-5.419676 6.38406-5.419676C5.746451-5.419676 5.642839-5.443587 5.571108-5.443587C5.443587-5.443587 5.419676-5.355915 5.419676-5.292154C5.419676-5.188543 5.523288-5.180573 5.595019-5.180573C6.081196-5.164633 6.081196-4.94944 6.081196-4.837858C6.081196-4.798007 6.081196-4.758157 6.049315-4.630635L5.172603-1.139726L3.251806-5.300125C3.188045-5.443587 3.172105-5.443587 2.980822-5.443587H1.944707C1.801245-5.443587 1.697634-5.443587 1.697634-5.292154C1.697634-5.180573 1.793275-5.180573 1.960648-5.180573C2.024408-5.180573 2.263512-5.180573 2.446824-5.132752L1.378829-.852802C1.283188-.454296 1.075965-.278954 .541968-.263014C.494147-.263014 .398506-.255044 .398506-.111582C.398506-.063761 .438356 0 .518057 0C.549938 0 .73325-.02391 1.307098-.02391C1.936737-.02391 2.056289 0 2.12802 0C2.1599 0 2.279452 0 2.279452-.151432C2.279452-.247073 2.191781-.263014 2.13599-.263014C1.849066-.270984 1.609963-.318804 1.609963-.597758C1.609963-.637609 1.633873-.749191 1.633873-.757161L2.677958-4.917559H2.685928L4.901619-.143462C4.95741-.01594 4.96538 0 5.053051 0C5.164633 0 5.172603-.03188 5.204483-.167372L6.312329-4.574844Z'/> | |||||

| <path id='g2-107' d='M2.327273-5.292154C2.335243-5.308095 2.359153-5.411706 2.359153-5.419676C2.359153-5.459527 2.327273-5.531258 2.231631-5.531258C2.199751-5.531258 1.952677-5.507347 1.769365-5.491407L1.323039-5.459527C1.147696-5.443587 1.067995-5.435616 1.067995-5.292154C1.067995-5.180573 1.179577-5.180573 1.275218-5.180573C1.657783-5.180573 1.657783-5.132752 1.657783-5.061021C1.657783-5.037111 1.657783-5.021171 1.617933-4.877709L.486177-.342715C.454296-.223163 .454296-.175342 .454296-.167372C.454296-.03188 .565878 .079701 .71731 .079701C.988294 .079701 1.052055-.175342 1.083935-.286924C1.163636-.621669 1.370859-1.466501 1.458531-1.801245C1.896887-1.753425 2.430884-1.601993 2.430884-1.147696C2.430884-1.107846 2.430884-1.067995 2.414944-.988294C2.391034-.884682 2.375093-.773101 2.375093-.73325C2.375093-.263014 2.725778 .079701 3.188045 .079701C3.52279 .079701 3.730012-.167372 3.833624-.318804C4.024907-.613699 4.152428-1.091905 4.152428-1.139726C4.152428-1.219427 4.088667-1.243337 4.032877-1.243337C3.937235-1.243337 3.921295-1.195517 3.889415-1.052055C3.785803-.67746 3.57858-.143462 3.203985-.143462C2.996762-.143462 2.948941-.318804 2.948941-.533998C2.948941-.637609 2.956912-.73325 2.996762-.916563C3.004732-.948443 3.036613-1.075965 3.036613-1.163636C3.036613-1.817186 2.215691-1.960648 1.809215-2.016438C2.10411-2.191781 2.375093-2.462765 2.470735-2.566376C2.909091-2.996762 3.267746-3.291656 3.650311-3.291656C3.753923-3.291656 3.849564-3.267746 3.913325-3.188045C3.482939-3.132254 3.482939-2.757659 3.482939-2.749689C3.482939-2.574346 3.618431-2.454795 3.793773-2.454795C4.008966-2.454795 4.24807-2.630137 4.24807-2.956912C4.24807-3.227895 4.056787-3.514819 3.658281-3.514819C3.196015-3.514819 2.781569-3.164134 2.327273-2.709838C1.865006-2.255542 1.665753-2.16787 1.538232-2.11208L2.327273-5.292154Z'/> | |||||

| <path id='g0-88' d='M15.135243 16.737235L16.581818 12.911582H16.282939C15.816687 14.154919 14.54944 14.96787 13.174595 15.326526C12.923537 15.386301 11.75193 15.697136 9.456538 15.697136H2.247572L8.332752 8.5599C8.416438 8.464259 8.440349 8.428394 8.440349 8.368618C8.440349 8.344707 8.440349 8.308842 8.356663 8.18929L2.785554 .573848H9.336986C10.938979 .573848 12.026899 .74122 12.134496 .765131C12.780075 .860772 13.820174 1.06401 14.764633 1.661768C15.063512 1.853051 15.876463 2.391034 16.282939 3.359402H16.581818L15.135243 0H1.004234C.729265 0 .71731 .011955 .681445 .083686C.669489 .119552 .669489 .3467 .669489 .478207L6.993773 9.133748L.800996 16.390535C.681445 16.533998 .681445 16.593773 .681445 16.605729C.681445 16.737235 .789041 16.737235 1.004234 16.737235H15.135243Z'/> | |||||

| <path id='g1-0' d='M5.571108-1.809215C5.69863-1.809215 5.873973-1.809215 5.873973-1.992528S5.69863-2.175841 5.571108-2.175841H1.004234C.876712-2.175841 .70137-2.175841 .70137-1.992528S.876712-1.809215 1.004234-1.809215H5.571108Z'/> | |||||

| <path id='g5-61' d='M8.069738-3.873474C8.237111-3.873474 8.452304-3.873474 8.452304-4.088667C8.452304-4.315816 8.249066-4.315816 8.069738-4.315816H1.028144C.860772-4.315816 .645579-4.315816 .645579-4.100623C.645579-3.873474 .848817-3.873474 1.028144-3.873474H8.069738ZM8.069738-1.649813C8.237111-1.649813 8.452304-1.649813 8.452304-1.865006C8.452304-2.092154 8.249066-2.092154 8.069738-2.092154H1.028144C.860772-2.092154 .645579-2.092154 .645579-1.876961C.645579-1.649813 .848817-1.649813 1.028144-1.649813H8.069738Z'/> | |||||

| <path id='g4-11' d='M3.809714-3.172105H4.774097V-3.435118H3.785803V-4.327771C3.785803-5.045081 4.184309-5.387796 4.534994-5.387796C4.558904-5.387796 4.654545-5.387796 4.766127-5.347945C4.622665-5.276214 4.582814-5.140722 4.582814-5.037111C4.582814-4.829888 4.734247-4.678456 4.941469-4.678456C5.156663-4.678456 5.300125-4.829888 5.300125-5.037111C5.300125-5.379826 4.96538-5.610959 4.542964-5.610959C4.24807-5.610959 3.905355-5.507347 3.642341-5.276214C3.379328-5.587049 2.909091-5.610959 2.693898-5.610959C1.896887-5.610959 .884682-5.212453 .884682-4.311831V-3.435118H.231133V-3.172105H.884682V-.621669C.884682-.263014 .789041-.263014 .278954-.263014V0C.589788-.02391 1.036115-.02391 1.171606-.02391C1.331009-.02391 1.761395-.02391 2.072229 0V-.263014C1.562142-.263014 1.466501-.263014 1.466501-.621669V-3.172105H3.227895V-.621669C3.227895-.263014 3.132254-.263014 2.622167-.263014V0C2.933001-.02391 3.371357-.02391 3.56264-.02391C4.032877-.02391 4.048817-.02391 4.566874 0V-.263014H4.407472C3.825654-.263014 3.809714-.350685 3.809714-.637609V-3.172105ZM1.44259-3.435118V-4.303861C1.44259-5.076961 2.13599-5.387796 2.677958-5.387796C2.749689-5.387796 3.052553-5.387796 3.283686-5.260274C3.076463-5.180573 3.052553-5.00523 3.052553-4.925529C3.052553-4.861768 3.068493-4.686426 3.251806-4.606725C3.227895-4.511083 3.227895-4.367621 3.227895-4.327771V-3.435118H1.44259Z'/> | |||||

| <path id='g4-48' d='M3.897385-2.542466C3.897385-3.395268 3.809714-3.913325 3.5467-4.423412C3.196015-5.124782 2.550436-5.300125 2.11208-5.300125C1.107846-5.300125 .74122-4.550934 .629639-4.327771C.342715-3.745953 .326775-2.956912 .326775-2.542466C.326775-2.016438 .350685-1.211457 .73325-.573848C1.099875 .01594 1.689664 .167372 2.11208 .167372C2.494645 .167372 3.180075 .047821 3.57858-.74122C3.873474-1.315068 3.897385-2.024408 3.897385-2.542466ZM2.11208-.055791C1.841096-.055791 1.291158-.183313 1.123786-1.020174C1.036115-1.474471 1.036115-2.223661 1.036115-2.638107C1.036115-3.188045 1.036115-3.745953 1.123786-4.184309C1.291158-4.99726 1.912827-5.076961 2.11208-5.076961C2.383064-5.076961 2.933001-4.941469 3.092403-4.216189C3.188045-3.777833 3.188045-3.180075 3.188045-2.638107C3.188045-2.16787 3.188045-1.45056 3.092403-1.004234C2.925031-.167372 2.375093-.055791 2.11208-.055791Z'/> | |||||

| <path id='g4-49' d='M2.502615-5.076961C2.502615-5.292154 2.486675-5.300125 2.271482-5.300125C1.944707-4.98132 1.522291-4.790037 .765131-4.790037V-4.527024C.980324-4.527024 1.41071-4.527024 1.872976-4.742217V-.653549C1.872976-.358655 1.849066-.263014 1.091905-.263014H.812951V0C1.139726-.02391 1.825156-.02391 2.183811-.02391S3.235866-.02391 3.56264 0V-.263014H3.283686C2.526526-.263014 2.502615-.358655 2.502615-.653549V-5.076961Z'/> | |||||

| <path id='g4-61' d='M5.826152-2.654047C5.945704-2.654047 6.105106-2.654047 6.105106-2.83736S5.913823-3.020672 5.794271-3.020672H.781071C.661519-3.020672 .470237-3.020672 .470237-2.83736S.629639-2.654047 .749191-2.654047H5.826152ZM5.794271-.964384C5.913823-.964384 6.105106-.964384 6.105106-1.147696S5.945704-1.331009 5.826152-1.331009H.749191C.629639-1.331009 .470237-1.331009 .470237-1.147696S.661519-.964384 .781071-.964384H5.794271Z'/> | |||||

| <path id='g4-101' d='M3.291656-1.817186C3.466999-1.817186 3.514819-1.817186 3.514819-2.000498C3.514819-2.709838 3.124284-3.55467 2.000498-3.55467C1.012204-3.55467 .239103-2.733748 .239103-1.745455C.239103-.71731 1.099875 .079701 2.10411 .079701C3.116314 .079701 3.514819-.773101 3.514819-.956413C3.514819-.988294 3.490909-1.067995 3.387298-1.067995C3.299626-1.067995 3.283686-1.012204 3.267746-.964384C2.980822-.191283 2.295392-.167372 2.15193-.167372C1.793275-.167372 1.42665-.334745 1.187547-.70137S.948443-1.578082 .948443-1.817186H3.291656ZM.956413-2.024408C1.028144-3.140224 1.705604-3.331507 2.000498-3.331507C2.933001-3.331507 2.964882-2.207721 2.972852-2.024408H.956413Z'/> | |||||

| <path id='g4-111' d='M3.985056-1.697634C3.985056-2.693898 3.164134-3.55467 2.11208-3.55467S.239103-2.693898 .239103-1.697634S1.091905 .079701 2.11208 .079701C3.140224 .079701 3.985056-.70137 3.985056-1.697634ZM2.11208-.167372C1.681694-.167372 1.346949-.374595 1.171606-.653549C.972354-.980324 .948443-1.370859 .948443-1.769365C.948443-2.072229 .948443-2.550436 1.195517-2.893151C1.40274-3.172105 1.737484-3.331507 2.11208-3.331507C2.526526-3.331507 2.86924-3.132254 3.052553-2.8533C3.267746-2.518555 3.275716-2.088169 3.275716-1.769365C3.275716-1.40274 3.259776-.964384 3.036613-.629639C2.82142-.310834 2.462765-.167372 2.11208-.167372Z'/> | |||||

| <path id='g4-115' d='M2.83736-3.347447C2.83736-3.474969 2.83736-3.55467 2.733748-3.55467C2.693898-3.55467 2.669988-3.55467 2.542466-3.427148C2.526526-3.419178 2.454795-3.347447 2.430884-3.347447C2.422914-3.347447 2.406974-3.347447 2.359153-3.379328C2.231631-3.466999 2.000498-3.55467 1.641843-3.55467C.526027-3.55467 .278954-2.948941 .278954-2.566376C.278954-2.16787 .573848-1.936737 .597758-1.912827C.916563-1.673724 1.099875-1.641843 1.633873-1.546202C2.008468-1.474471 2.622167-1.362889 2.622167-.820922C2.622167-.510087 2.414944-.143462 1.681694-.143462C.876712-.143462 .645579-.765131 .541968-1.187547C.510087-1.291158 .502117-1.331009 .406476-1.331009C.278954-1.331009 .278954-1.267248 .278954-1.115816V-.127522C.278954 0 .278954 .079701 .382565 .079701C.430386 .079701 .438356 .071731 .581818-.079701C.621669-.119552 .70934-.223163 .749191-.263014C1.107846 .063761 1.482441 .079701 1.689664 .079701C2.701868 .079701 3.052553-.502117 3.052553-1.028144C3.052553-1.41071 2.82142-1.968618 1.872976-2.14396C1.809215-2.1599 1.362889-2.239601 1.331009-2.239601C1.083935-2.295392 .70934-2.462765 .70934-2.781569C.70934-3.020672 .884682-3.355417 1.641843-3.355417C2.534496-3.355417 2.574346-2.701868 2.590286-2.478705C2.598257-2.414944 2.654047-2.391034 2.709838-2.391034C2.83736-2.391034 2.83736-2.446824 2.83736-2.598257V-3.347447Z'/> | |||||

| <path id='g4-116' d='M1.482441-3.172105H2.669988V-3.435118H1.482441V-4.901619H1.235367C1.227397-4.176339 .900623-3.419178 .159402-3.395268V-3.172105H.876712V-.996264C.876712-.063761 1.594022 .079701 1.960648 .079701C2.494645 .079701 2.81345-.398506 2.81345-.996264V-1.44259H2.566376V-1.012204C2.566376-.462267 2.319303-.167372 2.016438-.167372C1.482441-.167372 1.482441-.852802 1.482441-.980324V-3.172105Z'/> | |||||

| <path id='g3-110' d='M2.462765-3.502864C2.486675-3.574595 2.785554-4.172354 3.227895-4.554919C3.53873-4.841843 3.945205-5.033126 4.411457-5.033126C4.889664-5.033126 5.057036-4.674471 5.057036-4.196264C5.057036-3.514819 4.566874-2.15193 4.327771-1.506351C4.220174-1.219427 4.160399-1.06401 4.160399-.848817C4.160399-.310834 4.531009 .119552 5.104857 .119552C6.216687 .119552 6.635118-1.637858 6.635118-1.709589C6.635118-1.769365 6.587298-1.817186 6.515567-1.817186C6.40797-1.817186 6.396015-1.78132 6.336239-1.578082C6.06127-.597758 5.606974-.119552 5.140722-.119552C5.021171-.119552 4.829888-.131507 4.829888-.514072C4.829888-.812951 4.961395-1.171606 5.033126-1.338979C5.272229-1.996513 5.774346-3.335492 5.774346-4.016936C5.774346-4.734247 5.355915-5.272229 4.447323-5.272229C3.383313-5.272229 2.82142-4.519054 2.606227-4.220174C2.570361-4.901619 2.080199-5.272229 1.554172-5.272229C1.171606-5.272229 .908593-5.045081 .705355-4.638605C.490162-4.208219 .32279-3.490909 .32279-3.443088S.37061-3.335492 .454296-3.335492C.549938-3.335492 .561893-3.347447 .633624-3.622416C.824907-4.351681 1.0401-5.033126 1.518306-5.033126C1.793275-5.033126 1.888917-4.841843 1.888917-4.483188C1.888917-4.220174 1.769365-3.753923 1.685679-3.383313L1.350934-2.092154C1.303113-1.865006 1.171606-1.327024 1.111831-1.111831C1.028144-.800996 .896638-.239103 .896638-.179328C.896638-.011955 1.028144 .119552 1.207472 .119552C1.350934 .119552 1.518306 .047821 1.613948-.131507C1.637858-.191283 1.745455-.609714 1.80523-.848817L2.068244-1.924782L2.462765-3.502864Z'/> | |||||

| <path id='g3-115' d='M2.725778-2.391034C2.929016-2.355168 3.251806-2.283437 3.323537-2.271482C3.478954-2.223661 4.016936-2.032379 4.016936-1.458531C4.016936-1.08792 3.682192-.119552 2.295392-.119552C2.044334-.119552 1.147696-.155417 .908593-.812951C1.3868-.753176 1.625903-1.123786 1.625903-1.3868C1.625903-1.637858 1.458531-1.769365 1.219427-1.769365C.956413-1.769365 .609714-1.566127 .609714-1.028144C.609714-.32279 1.327024 .119552 2.283437 .119552C4.100623 .119552 4.638605-1.219427 4.638605-1.841096C4.638605-2.020423 4.638605-2.355168 4.25604-2.737733C3.957161-3.024658 3.670237-3.084433 3.024658-3.21594C2.701868-3.287671 2.187796-3.395268 2.187796-3.93325C2.187796-4.172354 2.402989-5.033126 3.53873-5.033126C4.040847-5.033126 4.531009-4.841843 4.65056-4.411457C4.124533-4.411457 4.100623-3.957161 4.100623-3.945205C4.100623-3.694147 4.327771-3.622416 4.435367-3.622416C4.60274-3.622416 4.937484-3.753923 4.937484-4.25604S4.483188-5.272229 3.550685-5.272229C1.984558-5.272229 1.566127-4.040847 1.566127-3.550685C1.566127-2.642092 2.450809-2.450809 2.725778-2.391034Z'/> | |||||

| </defs> | |||||

| <g id='page1'> | |||||

| <use x='190.161786' y='100.290628' xlink:href='#g3-110'/> | |||||

| <use x='197.149392' y='102.083891' xlink:href='#g4-111'/> | |||||

| <use x='201.383575' y='102.083891' xlink:href='#g4-11'/> | |||||

| <use x='206.323455' y='102.083891' xlink:href='#g4-115'/> | |||||

| <use x='209.663758' y='102.083891' xlink:href='#g4-101'/> | |||||

| <use x='213.427476' y='102.083891' xlink:href='#g4-116'/> | |||||

| <use x='220.539691' y='100.290628' xlink:href='#g5-61'/> | |||||

| <use x='232.965172' y='85.346607' xlink:href='#g2-78'/> | |||||

| <use x='240.535477' y='85.346607' xlink:href='#g1-0'/> | |||||

| <use x='247.121983' y='85.346607' xlink:href='#g4-49'/> | |||||

| <use x='233.52636' y='88.933163' xlink:href='#g0-88'/> | |||||

| <use x='234.439516' y='114.393382' xlink:href='#g2-107'/> | |||||

| <use x='239.061132' y='114.393382' xlink:href='#g4-61'/> | |||||

| <use x='245.647638' y='114.393382' xlink:href='#g4-48'/> | |||||

| <use x='253.348664' y='100.290628' xlink:href='#g3-115'/> | |||||

| <use x='258.86267' y='102.083891' xlink:href='#g2-107'/> | |||||

| <use x='263.982417' y='100.290628' xlink:href='#g3-110'/> | |||||

| <use x='270.970023' y='102.083891' xlink:href='#g2-107'/> | |||||

| </g> | |||||

| </svg> | |||||

+ 33

- 0

docs/source/_static/constant/n-index-formula.svg

View File

| @@ -0,0 +1,33 @@ | |||||

| <?xml version='1.0' encoding='UTF-8'?> | |||||

| <!-- This file was generated by dvisvgm 2.9.1 --> | |||||

| <svg version='1.1' xmlns='http://www.w3.org/2000/svg' xmlns:xlink='http://www.w3.org/1999/xlink' width='83.908685pt' height='11.955168pt' viewBox='56.413267 56.787049 83.908685 11.955168'> | |||||

| <defs> | |||||

| <path id='g0-0' d='M5.571108-1.809215C5.69863-1.809215 5.873973-1.809215 5.873973-1.992528S5.69863-2.175841 5.571108-2.175841H1.004234C.876712-2.175841 .70137-2.175841 .70137-1.992528S.876712-1.809215 1.004234-1.809215H5.571108Z'/> | |||||

| <path id='g3-48' d='M3.897385-2.542466C3.897385-3.395268 3.809714-3.913325 3.5467-4.423412C3.196015-5.124782 2.550436-5.300125 2.11208-5.300125C1.107846-5.300125 .74122-4.550934 .629639-4.327771C.342715-3.745953 .326775-2.956912 .326775-2.542466C.326775-2.016438 .350685-1.211457 .73325-.573848C1.099875 .01594 1.689664 .167372 2.11208 .167372C2.494645 .167372 3.180075 .047821 3.57858-.74122C3.873474-1.315068 3.897385-2.024408 3.897385-2.542466ZM2.11208-.055791C1.841096-.055791 1.291158-.183313 1.123786-1.020174C1.036115-1.474471 1.036115-2.223661 1.036115-2.638107C1.036115-3.188045 1.036115-3.745953 1.123786-4.184309C1.291158-4.99726 1.912827-5.076961 2.11208-5.076961C2.383064-5.076961 2.933001-4.941469 3.092403-4.216189C3.188045-3.777833 3.188045-3.180075 3.188045-2.638107C3.188045-2.16787 3.188045-1.45056 3.092403-1.004234C2.925031-.167372 2.375093-.055791 2.11208-.055791Z'/> | |||||

| <path id='g3-49' d='M2.502615-5.076961C2.502615-5.292154 2.486675-5.300125 2.271482-5.300125C1.944707-4.98132 1.522291-4.790037 .765131-4.790037V-4.527024C.980324-4.527024 1.41071-4.527024 1.872976-4.742217V-.653549C1.872976-.358655 1.849066-.263014 1.091905-.263014H.812951V0C1.139726-.02391 1.825156-.02391 2.183811-.02391S3.235866-.02391 3.56264 0V-.263014H3.283686C2.526526-.263014 2.502615-.358655 2.502615-.653549V-5.076961Z'/> | |||||

| <path id='g2-58' d='M2.199751-.573848C2.199751-.920548 1.912827-1.159651 1.625903-1.159651C1.279203-1.159651 1.0401-.872727 1.0401-.585803C1.0401-.239103 1.327024 0 1.613948 0C1.960648 0 2.199751-.286924 2.199751-.573848Z'/> | |||||

| <path id='g2-59' d='M2.331258 .047821C2.331258-.645579 2.10411-1.159651 1.613948-1.159651C1.231382-1.159651 1.0401-.848817 1.0401-.585803S1.219427 0 1.625903 0C1.78132 0 1.912827-.047821 2.020423-.155417C2.044334-.179328 2.056289-.179328 2.068244-.179328C2.092154-.179328 2.092154-.011955 2.092154 .047821C2.092154 .442341 2.020423 1.219427 1.327024 1.996513C1.195517 2.139975 1.195517 2.163885 1.195517 2.187796C1.195517 2.247572 1.255293 2.307347 1.315068 2.307347C1.41071 2.307347 2.331258 1.422665 2.331258 .047821Z'/> | |||||

| <path id='g2-110' d='M2.462765-3.502864C2.486675-3.574595 2.785554-4.172354 3.227895-4.554919C3.53873-4.841843 3.945205-5.033126 4.411457-5.033126C4.889664-5.033126 5.057036-4.674471 5.057036-4.196264C5.057036-3.514819 4.566874-2.15193 4.327771-1.506351C4.220174-1.219427 4.160399-1.06401 4.160399-.848817C4.160399-.310834 4.531009 .119552 5.104857 .119552C6.216687 .119552 6.635118-1.637858 6.635118-1.709589C6.635118-1.769365 6.587298-1.817186 6.515567-1.817186C6.40797-1.817186 6.396015-1.78132 6.336239-1.578082C6.06127-.597758 5.606974-.119552 5.140722-.119552C5.021171-.119552 4.829888-.131507 4.829888-.514072C4.829888-.812951 4.961395-1.171606 5.033126-1.338979C5.272229-1.996513 5.774346-3.335492 5.774346-4.016936C5.774346-4.734247 5.355915-5.272229 4.447323-5.272229C3.383313-5.272229 2.82142-4.519054 2.606227-4.220174C2.570361-4.901619 2.080199-5.272229 1.554172-5.272229C1.171606-5.272229 .908593-5.045081 .705355-4.638605C.490162-4.208219 .32279-3.490909 .32279-3.443088S.37061-3.335492 .454296-3.335492C.549938-3.335492 .561893-3.347447 .633624-3.622416C.824907-4.351681 1.0401-5.033126 1.518306-5.033126C1.793275-5.033126 1.888917-4.841843 1.888917-4.483188C1.888917-4.220174 1.769365-3.753923 1.685679-3.383313L1.350934-2.092154C1.303113-1.865006 1.171606-1.327024 1.111831-1.111831C1.028144-.800996 .896638-.239103 .896638-.179328C.896638-.011955 1.028144 .119552 1.207472 .119552C1.350934 .119552 1.518306 .047821 1.613948-.131507C1.637858-.191283 1.745455-.609714 1.80523-.848817L2.068244-1.924782L2.462765-3.502864Z'/> | |||||

| <path id='g1-78' d='M6.312329-4.574844C6.40797-4.96538 6.583313-5.156663 7.157161-5.180573C7.236862-5.180573 7.300623-5.228394 7.300623-5.332005C7.300623-5.379826 7.260772-5.443587 7.181071-5.443587C7.12528-5.443587 6.973848-5.419676 6.38406-5.419676C5.746451-5.419676 5.642839-5.443587 5.571108-5.443587C5.443587-5.443587 5.419676-5.355915 5.419676-5.292154C5.419676-5.188543 5.523288-5.180573 5.595019-5.180573C6.081196-5.164633 6.081196-4.94944 6.081196-4.837858C6.081196-4.798007 6.081196-4.758157 6.049315-4.630635L5.172603-1.139726L3.251806-5.300125C3.188045-5.443587 3.172105-5.443587 2.980822-5.443587H1.944707C1.801245-5.443587 1.697634-5.443587 1.697634-5.292154C1.697634-5.180573 1.793275-5.180573 1.960648-5.180573C2.024408-5.180573 2.263512-5.180573 2.446824-5.132752L1.378829-.852802C1.283188-.454296 1.075965-.278954 .541968-.263014C.494147-.263014 .398506-.255044 .398506-.111582C.398506-.063761 .438356 0 .518057 0C.549938 0 .73325-.02391 1.307098-.02391C1.936737-.02391 2.056289 0 2.12802 0C2.1599 0 2.279452 0 2.279452-.151432C2.279452-.247073 2.191781-.263014 2.13599-.263014C1.849066-.270984 1.609963-.318804 1.609963-.597758C1.609963-.637609 1.633873-.749191 1.633873-.757161L2.677958-4.917559H2.685928L4.901619-.143462C4.95741-.01594 4.96538 0 5.053051 0C5.164633 0 5.172603-.03188 5.204483-.167372L6.312329-4.574844Z'/> | |||||

| <path id='g4-40' d='M3.88543 2.905106C3.88543 2.86924 3.88543 2.84533 3.682192 2.642092C2.486675 1.43462 1.817186-.537983 1.817186-2.976837C1.817186-5.296139 2.379078-7.292653 3.765878-8.703362C3.88543-8.810959 3.88543-8.834869 3.88543-8.870735C3.88543-8.942466 3.825654-8.966376 3.777833-8.966376C3.622416-8.966376 2.642092-8.105604 2.056289-6.933998C1.446575-5.726526 1.171606-4.447323 1.171606-2.976837C1.171606-1.912827 1.338979-.490162 1.960648 .789041C2.666002 2.223661 3.646326 3.000747 3.777833 3.000747C3.825654 3.000747 3.88543 2.976837 3.88543 2.905106Z'/> | |||||

| <path id='g4-41' d='M3.371357-2.976837C3.371357-3.88543 3.251806-5.36787 2.582316-6.75467C1.876961-8.18929 .896638-8.966376 .765131-8.966376C.71731-8.966376 .657534-8.942466 .657534-8.870735C.657534-8.834869 .657534-8.810959 .860772-8.607721C2.056289-7.400249 2.725778-5.427646 2.725778-2.988792C2.725778-.669489 2.163885 1.327024 .777086 2.737733C.657534 2.84533 .657534 2.86924 .657534 2.905106C.657534 2.976837 .71731 3.000747 .765131 3.000747C.920548 3.000747 1.900872 2.139975 2.486675 .968369C3.096389-.251059 3.371357-1.542217 3.371357-2.976837Z'/> | |||||

| </defs> | |||||

| <g id='page1'> | |||||

| <use x='56.413267' y='65.753425' xlink:href='#g4-40'/> | |||||

| <use x='60.965593' y='65.753425' xlink:href='#g2-110'/> | |||||

| <use x='67.953199' y='67.546688' xlink:href='#g3-48'/> | |||||

| <use x='72.685513' y='65.753425' xlink:href='#g2-59'/> | |||||

| <use x='77.929672' y='65.753425' xlink:href='#g2-110'/> | |||||

| <use x='84.917278' y='67.546688' xlink:href='#g3-49'/> | |||||

| <use x='89.649593' y='65.753425' xlink:href='#g2-59'/> | |||||

| <use x='94.893752' y='65.753425' xlink:href='#g2-58'/> | |||||

| <use x='98.145413' y='65.753425' xlink:href='#g2-58'/> | |||||

| <use x='101.397074' y='65.753425' xlink:href='#g2-58'/> | |||||

| <use x='104.648735' y='65.753425' xlink:href='#g2-59'/> | |||||

| <use x='109.892894' y='65.753425' xlink:href='#g2-110'/> | |||||

| <use x='116.8805' y='67.546688' xlink:href='#g1-78'/> | |||||

| <use x='124.450805' y='67.546688' xlink:href='#g0-0'/> | |||||

| <use x='131.037312' y='67.546688' xlink:href='#g3-49'/> | |||||

| <use x='135.769627' y='65.753425' xlink:href='#g4-41'/> | |||||

| </g> | |||||

| </svg> | |||||

BIN

docs/source/_static/contiguous-block-of-memory-ndarray-example-1.png

View File

BIN

docs/source/_static/contiguous-block-of-memory.png

View File

BIN

docs/source/_static/tensor-constant-ndarray.png

View File

BIN

docs/source/_static/tensor-naming.png

View File

+ 17

- 0

src/TensorFlowNET.Console/MemoryTestingCases.cs

View File

| @@ -1,6 +1,7 @@ | |||||

| using System; | using System; | ||||

| using System.Collections.Generic; | using System.Collections.Generic; | ||||

| using System.Text; | using System.Text; | ||||

| using NumSharp; | |||||

| using static Tensorflow.Binding; | using static Tensorflow.Binding; | ||||

| namespace Tensorflow | namespace Tensorflow | ||||

| @@ -18,6 +19,22 @@ namespace Tensorflow | |||||

| var tensor = tf.constant(3112.0f); | var tensor = tf.constant(3112.0f); | ||||

| } | } | ||||

| }; | }; | ||||

| public Action<int> Constant2x3 | |||||

| => (iterate) => | |||||

| { | |||||

| var nd = np.array(new byte[,] | |||||

| { | |||||

| {1, 2, 3}, | |||||

| {4, 5, 6} | |||||

| }); | |||||

| for (int i = 0; i < iterate; i++) | |||||

| { | |||||

| var tensor = tf.constant(nd); | |||||

| var data = tensor.numpy(); | |||||

| } | |||||

| }; | |||||

| public Action<int> Variable | public Action<int> Variable | ||||

| => (iterate) => | => (iterate) => | ||||

| { | { | ||||

+ 3

- 0

src/TensorFlowNET.Console/Program.cs

View File

| @@ -15,6 +15,9 @@ namespace Tensorflow | |||||

| int batchSize = 1000; | int batchSize = 1000; | ||||

| // explaination of constant | |||||

| mm.Execute(10, 100 * batchSize, cases.Constant2x3); | |||||

| // 1 million float tensor 68M. | // 1 million float tensor 68M. | ||||

| mm.Execute(10, 100 * batchSize, cases.Constant); | mm.Execute(10, 100 * batchSize, cases.Constant); | ||||

+ 1

- 1

src/TensorFlowNET.Console/TensorFlowNET.Console.csproj

View File

| @@ -1,4 +1,4 @@ | |||||

| <Project Sdk="Microsoft.NET.Sdk"> | |||||

| <Project Sdk="Microsoft.NET.Sdk"> | |||||

| <PropertyGroup> | <PropertyGroup> | ||||

| <OutputType>Exe</OutputType> | <OutputType>Exe</OutputType> | ||||

+ 4

- 0

src/TensorFlowNET.Core/APIs/tf.array.cs

View File

| @@ -29,6 +29,10 @@ namespace Tensorflow | |||||

| /// A convenient alias for None, useful for indexing arrays. | /// A convenient alias for None, useful for indexing arrays. | ||||

| /// </summary> | /// </summary> | ||||

| public Slice newaxis = Slice.NewAxis; | public Slice newaxis = Slice.NewAxis; | ||||

| /// <summary> | |||||

| /// A convenient alias for ... | |||||

| /// </summary> | |||||

| public Slice ellipsis = Slice.Ellipsis; | |||||

| /// <summary> | /// <summary> | ||||

| /// BatchToSpace for N-D tensors of type T. | /// BatchToSpace for N-D tensors of type T. | ||||

+ 26

- 0

src/TensorFlowNET.Core/APIs/tf.autograph.cs

View File

| @@ -0,0 +1,26 @@ | |||||

| /***************************************************************************** | |||||

| Copyright 2020 Haiping Chen. All Rights Reserved. | |||||

| Licensed under the Apache License, Version 2.0 (the "License"); | |||||

| you may not use this file except in compliance with the License. | |||||

| You may obtain a copy of the License at | |||||

| http://www.apache.org/licenses/LICENSE-2.0 | |||||

| Unless required by applicable law or agreed to in writing, software | |||||

| distributed under the License is distributed on an "AS IS" BASIS, | |||||

| WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. | |||||

| See the License for the specific language governing permissions and | |||||

| limitations under the License. | |||||

| ******************************************************************************/ | |||||

| using Tensorflow.Graphs; | |||||

| using Tensorflow.Operations; | |||||

| namespace Tensorflow | |||||

| { | |||||

| public partial class tensorflow | |||||

| { | |||||

| public AutoGraph autograph = new AutoGraph(); | |||||

| } | |||||

| } | |||||

+ 29

- 15

src/TensorFlowNET.Core/APIs/tf.control_flow.cs

View File

| @@ -15,17 +15,22 @@ | |||||

| ******************************************************************************/ | ******************************************************************************/ | ||||

| using System; | using System; | ||||

| using static Tensorflow.Binding; | |||||

| namespace Tensorflow | namespace Tensorflow | ||||

| { | { | ||||

| public partial class tensorflow | public partial class tensorflow | ||||

| { | { | ||||

| public Tensor cond(Tensor pred, | |||||

| Tensor true_value, | |||||

| Tensor false_false) | |||||

| => control_flow_ops.cond(pred, () => true_value, () => false_false); | |||||

| public Tensor cond(Tensor pred, | public Tensor cond(Tensor pred, | ||||

| Func<ITensorOrOperation> true_fn = null, | Func<ITensorOrOperation> true_fn = null, | ||||

| Func<ITensorOrOperation> false_fn = null, | Func<ITensorOrOperation> false_fn = null, | ||||

| bool strict = false, | |||||

| string name = null) | string name = null) | ||||

| => control_flow_ops.cond(pred, true_fn, false_fn, strict: strict, name: name); | |||||

| => control_flow_ops.cond(pred, true_fn, false_fn, name: name); | |||||

| /// <summary> | /// <summary> | ||||

| /// Create an op that groups multiple operations. | /// Create an op that groups multiple operations. | ||||

| @@ -37,22 +42,31 @@ namespace Tensorflow | |||||

| public Operation group<T>(T[] inputs, string name = null) where T : ITensorOrOperation | public Operation group<T>(T[] inputs, string name = null) where T : ITensorOrOperation | ||||

| => control_flow_ops.group(inputs, name: name); | => control_flow_ops.group(inputs, name: name); | ||||

| /*public Tensor while_loop(Func<Tensor, Tensor> cond, Func<Tensor, Tensor> body, Tensor[] loop_vars, | |||||

| TensorShape shape_invariants = null, | |||||

| public Tensor while_loop(Func<Tensor, Tensor> cond, | |||||

| Func<Tensor, Tensor> body, | |||||

| Tensor loop_vars, | |||||

| int parallel_iterations = 10) | |||||

| { | |||||

| Func<Tensor[], Tensor> cond1 = x | |||||

| => cond(x[0]); | |||||

| Func<Tensor[], Tensor[]> body1 = x | |||||

| => new[] { body(x[0]) }; | |||||

| var results = control_flow_ops.while_loop(cond1, | |||||

| body1, | |||||

| new[] { loop_vars }); | |||||

| return results[0]; | |||||

| } | |||||

| public Tensor[] while_loop(Func<Tensor[], Tensor> cond, | |||||

| Func<Tensor[], Tensor[]> body, | |||||

| Tensor[] loop_vars, | |||||

| int parallel_iterations = 10, | int parallel_iterations = 10, | ||||

| bool back_prop = true, | |||||

| bool swap_memory = false, | |||||

| string name = null, | |||||

| int? maximum_iterations = null, | |||||

| bool return_same_structure = false) | |||||

| string name = null) | |||||

| => control_flow_ops.while_loop(cond, body, loop_vars, | => control_flow_ops.while_loop(cond, body, loop_vars, | ||||

| shape_invariants: shape_invariants, | |||||

| parallel_iterations: parallel_iterations, | parallel_iterations: parallel_iterations, | ||||

| back_prop: back_prop, | |||||

| swap_memory: swap_memory, | |||||

| name: name, | |||||

| maximum_iterations: maximum_iterations, | |||||

| return_same_structure: return_same_structure);*/ | |||||

| name: name); | |||||

| public _ControlDependenciesController control_dependencies(ITensorOrOperation[] control_inputs) | public _ControlDependenciesController control_dependencies(ITensorOrOperation[] control_inputs) | ||||

| => ops.control_dependencies(control_inputs); | => ops.control_dependencies(control_inputs); | ||||

+ 3

- 0

src/TensorFlowNET.Core/APIs/tf.image.cs

View File

| @@ -208,6 +208,9 @@ namespace Tensorflow | |||||

| => image_ops_impl.non_max_suppression_padded(boxes, scores, max_output_size, iou_threshold, score_threshold, pad_to_max_output_size, | => image_ops_impl.non_max_suppression_padded(boxes, scores, max_output_size, iou_threshold, score_threshold, pad_to_max_output_size, | ||||

| name, sorted_input, canonicalized_coordinates, tile_size); | name, sorted_input, canonicalized_coordinates, tile_size); | ||||

| public Tensor resize(Tensor image, TensorShape size) | |||||

| => image_ops_impl.resize_images_v2(image, size); | |||||

| public Tensor resize_bilinear(Tensor images, Tensor size, bool align_corners = false, bool half_pixel_centers = false, string name = null) | public Tensor resize_bilinear(Tensor images, Tensor size, bool align_corners = false, bool half_pixel_centers = false, string name = null) | ||||

| => gen_image_ops.resize_bilinear(images, size, align_corners: align_corners, half_pixel_centers: half_pixel_centers, name: name); | => gen_image_ops.resize_bilinear(images, size, align_corners: align_corners, half_pixel_centers: half_pixel_centers, name: name); | ||||

+ 1

- 6

src/TensorFlowNET.Core/APIs/tf.nn.cs

View File

| @@ -182,12 +182,7 @@ namespace Tensorflow | |||||

| => nn_impl.sigmoid_cross_entropy_with_logits(labels: labels, logits: logits, name: name); | => nn_impl.sigmoid_cross_entropy_with_logits(labels: labels, logits: logits, name: name); | ||||

| public Tensor softmax(Tensor logits, int axis = -1, string name = null) | public Tensor softmax(Tensor logits, int axis = -1, string name = null) | ||||

| { | |||||

| if (axis == -1) | |||||

| return gen_nn_ops.softmax(logits, name); | |||||

| else | |||||

| throw new NotImplementedException(""); | |||||

| } | |||||

| => gen_nn_ops.softmax(logits, name); | |||||

| /// <summary> | /// <summary> | ||||

+ 3

- 0

src/TensorFlowNET.Core/APIs/tf.train.cs

View File

| @@ -44,6 +44,9 @@ namespace Tensorflow | |||||

| public Optimizer AdamOptimizer(float learning_rate, TF_DataType dtype, string name = "Adam") | public Optimizer AdamOptimizer(float learning_rate, TF_DataType dtype, string name = "Adam") | ||||

| => new AdamOptimizer(learning_rate, name: name, dtype: dtype); | => new AdamOptimizer(learning_rate, name: name, dtype: dtype); | ||||

| public Optimizer AdamOptimizer(IVariableV1 learning_rate, string name = "Adam") | |||||

| => new AdamOptimizer(learning_rate.AsTensor(), name: name); | |||||

| public Optimizer AdamOptimizer(Tensor learning_rate, string name = "Adam") | public Optimizer AdamOptimizer(Tensor learning_rate, string name = "Adam") | ||||

| => new AdamOptimizer(learning_rate, name: name); | => new AdamOptimizer(learning_rate, name: name); | ||||

+ 3

- 0

src/TensorFlowNET.Core/Data/DatasetManager.cs

View File

| @@ -25,6 +25,9 @@ namespace Tensorflow | |||||

| public IDatasetV2 from_tensor_slices(Tensor features, Tensor labels) | public IDatasetV2 from_tensor_slices(Tensor features, Tensor labels) | ||||

| => new TensorSliceDataset(features, labels); | => new TensorSliceDataset(features, labels); | ||||

| public IDatasetV2 from_tensor_slices(string[] array) | |||||

| => new TensorSliceDataset(array); | |||||

| public IDatasetV2 from_tensor_slices(NDArray array) | public IDatasetV2 from_tensor_slices(NDArray array) | ||||

| => new TensorSliceDataset(array); | => new TensorSliceDataset(array); | ||||

+ 1

- 1

src/TensorFlowNET.Core/Data/DatasetV2.cs

View File

| @@ -52,7 +52,7 @@ namespace Tensorflow | |||||

| public IDatasetV2 map(Func<Tensor, Tensor> map_func, | public IDatasetV2 map(Func<Tensor, Tensor> map_func, | ||||

| bool use_inter_op_parallelism = true, | bool use_inter_op_parallelism = true, | ||||

| bool preserve_cardinality = false, | |||||

| bool preserve_cardinality = true, | |||||

| bool use_legacy_function = false) | bool use_legacy_function = false) | ||||

| => new MapDataset(this, | => new MapDataset(this, | ||||

| map_func, | map_func, | ||||

+ 6

- 4

src/TensorFlowNET.Core/Data/MapDataset.cs

View File

| @@ -1,6 +1,10 @@ | |||||

| using System; | using System; | ||||

| using System.Collections.Generic; | using System.Collections.Generic; | ||||

| using System.Linq; | |||||

| using System.Text; | using System.Text; | ||||

| using Tensorflow.Functions; | |||||

| using Tensorflow.Graphs; | |||||

| using static Tensorflow.Binding; | |||||

| namespace Tensorflow | namespace Tensorflow | ||||

| { | { | ||||

| @@ -15,12 +19,10 @@ namespace Tensorflow | |||||

| bool preserve_cardinality = false, | bool preserve_cardinality = false, | ||||

| bool use_legacy_function = false) : base(input_dataset) | bool use_legacy_function = false) : base(input_dataset) | ||||

| { | { | ||||

| foreach(var input in input_dataset) | |||||

| { | |||||

| var data = map_func(input.Item1); | |||||

| } | |||||

| var func = new ConcreteFunction(map_func, input_dataset.element_spec[0].dtype); | |||||

| variant_tensor = ops.map_dataset(input_dataset.variant_tensor, | variant_tensor = ops.map_dataset(input_dataset.variant_tensor, | ||||

| func, | |||||

| output_types, | output_types, | ||||

| output_shapes); | output_shapes); | ||||

| } | } | ||||

+ 10

- 0

src/TensorFlowNET.Core/Data/TensorSliceDataset.cs

View File

| @@ -11,6 +11,16 @@ namespace Tensorflow.Data | |||||

| { | { | ||||

| public class TensorSliceDataset : DatasetSource | public class TensorSliceDataset : DatasetSource | ||||

| { | { | ||||

| public TensorSliceDataset(string[] array) | |||||

| { | |||||

| var element = tf.constant(array); | |||||

| _tensors = new[] { element }; | |||||

| var batched_spec = new[] { element.ToTensorSpec() }; | |||||

| structure = batched_spec.Select(x => x._unbatch()).ToArray(); | |||||

| variant_tensor = ops.tensor_slice_dataset(_tensors, output_shapes); | |||||

| } | |||||

| public TensorSliceDataset(NDArray array) | public TensorSliceDataset(NDArray array) | ||||

| { | { | ||||

| var element = tf.constant(array); | var element = tf.constant(array); | ||||

+ 5

- 1

src/TensorFlowNET.Core/Eager/EagerRunner.TFE_FastPathExecute.cs

View File

| @@ -6,6 +6,7 @@ using static Tensorflow.Binding; | |||||

| using Tensorflow.Util; | using Tensorflow.Util; | ||||

| using System.Runtime.InteropServices; | using System.Runtime.InteropServices; | ||||

| using Tensorflow.Contexts; | using Tensorflow.Contexts; | ||||

| using Tensorflow.Functions; | |||||

| namespace Tensorflow.Eager | namespace Tensorflow.Eager | ||||

| { | { | ||||

| @@ -385,7 +386,10 @@ namespace Tensorflow.Eager | |||||

| status.Check(true); | status.Check(true); | ||||

| break; | break; | ||||

| case TF_AttrType.TF_ATTR_FUNC: | case TF_AttrType.TF_ATTR_FUNC: | ||||

| c_api.TFE_OpSetAttrFunctionName(op, key, value.ToString(), value.ToString().Length); | |||||

| if (value is ConcreteFunction func) | |||||

| c_api.TFE_OpSetAttrFunctionName(op, key, func.Name, func.Name.Length); | |||||

| else | |||||

| throw new NotImplementedException("TF_AttrType.TF_ATTR_FUNC"); | |||||

| break; | break; | ||||

| default: | default: | ||||

| throw new NotImplementedException($"SetOpAttrScalar for {type}"); | throw new NotImplementedException($"SetOpAttrScalar for {type}"); | ||||

+ 1

- 1

src/TensorFlowNET.Core/Eager/EagerTensor.Creation.cs

View File

| @@ -70,8 +70,8 @@ namespace Tensorflow.Eager | |||||

| protected override void DisposeUnmanagedResources(IntPtr handle) | protected override void DisposeUnmanagedResources(IntPtr handle) | ||||

| { | { | ||||

| base.DisposeUnmanagedResources(handle); | |||||

| //print($"deleting DeleteTensorHandle {Id} {_handle.ToString("x16")}"); | //print($"deleting DeleteTensorHandle {Id} {_handle.ToString("x16")}"); | ||||

| c_api.TF_DeleteTensor(_handle); | |||||

| } | } | ||||

| } | } | ||||

| } | } | ||||

+ 6

- 0

src/TensorFlowNET.Core/Eager/EagerTensor.cs

View File

| @@ -19,6 +19,12 @@ namespace Tensorflow.Eager | |||||

| public override int rank => c_api.TFE_TensorHandleNumDims(EagerTensorHandle, tf.Status.Handle); | public override int rank => c_api.TFE_TensorHandleNumDims(EagerTensorHandle, tf.Status.Handle); | ||||

| public override void set_shape(TensorShape shape) | |||||

| { | |||||

| if (!shape.is_compatible_with(this.shape)) | |||||

| throw new ValueError($"Tensor's shape is not compatible."); | |||||

| } | |||||

| public static int GetRank(IntPtr handle) | public static int GetRank(IntPtr handle) | ||||

| { | { | ||||

| var tfe_tensor_handle = c_api.TFE_EagerTensorHandle(handle); | var tfe_tensor_handle = c_api.TFE_EagerTensorHandle(handle); | ||||

+ 31

- 0

src/TensorFlowNET.Core/Eager/c_api.eager.cs

View File

| @@ -78,6 +78,37 @@ namespace Tensorflow | |||||

| [DllImport(TensorFlowLibName)] | [DllImport(TensorFlowLibName)] | ||||

| public static extern SafeContextHandle TFE_NewContext(SafeContextOptionsHandle opts, SafeStatusHandle status); | public static extern SafeContextHandle TFE_NewContext(SafeContextOptionsHandle opts, SafeStatusHandle status); | ||||

| /// <summary> | |||||

| /// Adds a function (created from TF_GraphToFunction or | |||||

| /// TF_FunctionImportFunctionDef) to the context, allowing it to be executed with | |||||

| /// TFE_Execute by creating an op with the same name as the function. | |||||

| /// </summary> | |||||

| /// <param name="ctx"></param> | |||||

| /// <param name="function"></param> | |||||

| /// <param name="status"></param> | |||||

| [DllImport(TensorFlowLibName)] | |||||

| public static extern void TFE_ContextAddFunction(SafeContextHandle ctx, IntPtr function, SafeStatusHandle status); | |||||

| /// <summary> | |||||

| /// Removes a function from the context. Once removed, you can no longer | |||||

| /// TFE_Execute it or TFE_Execute any TFE_Op which has it as an attribute or any | |||||

| /// other function which calls it as an attribute. | |||||

| /// </summary> | |||||

| /// <param name="ctx"></param> | |||||

| /// <param name="name"></param> | |||||

| /// <param name="status"></param> | |||||

| [DllImport(TensorFlowLibName)] | |||||

| public static extern void TFE_ContextRemoveFunction(SafeContextHandle ctx, string name, SafeStatusHandle status); | |||||

| /// <summary> | |||||

| /// Checks whether a function is registered under `name`. | |||||

| /// </summary> | |||||

| /// <param name="ctx"></param> | |||||

| /// <param name="name"></param> | |||||

| /// <returns></returns> | |||||

| [DllImport(TensorFlowLibName)] | |||||

| public static extern bool TFE_ContextHasFunction(SafeContextHandle ctx, string name); | |||||

| [DllImport(TensorFlowLibName)] | [DllImport(TensorFlowLibName)] | ||||

| public static extern void TFE_ContextStartStep(SafeContextHandle ctx); | public static extern void TFE_ContextStartStep(SafeContextHandle ctx); | ||||

+ 58

- 0

src/TensorFlowNET.Core/Functions/ConcreteFunction.cs

View File

| @@ -0,0 +1,58 @@ | |||||

| using System; | |||||

| using System.Collections.Generic; | |||||

| using System.Linq; | |||||

| using System.Text; | |||||

| using Tensorflow.Graphs; | |||||

| using static Tensorflow.Binding; | |||||

| namespace Tensorflow.Functions | |||||

| { | |||||

| /// <summary> | |||||

| /// | |||||

| /// </summary> | |||||

| public class ConcreteFunction : IDisposable | |||||

| { | |||||

| public string Name => _handle == IntPtr.Zero ? string.Empty : c_api.StringPiece(c_api.TF_FunctionName(_handle)); | |||||

| IntPtr _handle; | |||||

| public ConcreteFunction(Func<Tensor, Tensor> func, TF_DataType dtype) | |||||

| { | |||||

| string func_name = $"autograph_{Guid.NewGuid()}_{func.Method.Name}"; | |||||

| tf.compat.v1.disable_eager_execution(); | |||||

| // IntPtr func_handle; | |||||

| using (var graph = new FuncGraph(func_name)) | |||||

| { | |||||

| graph.as_default(); | |||||

| var input = tf.placeholder(dtype); | |||||

| var output = func(input); | |||||

| var opers = graph._nodes_by_name.Values.Select(x => x as Operation).ToArray(); | |||||

| _handle = graph.ToGraph(opers, | |||||

| new Operation[] { input }, | |||||

| new Operation[] { output }, | |||||

| null); | |||||

| } | |||||

| tf.enable_eager_execution(); | |||||

| } | |||||

| public Tensor Execute(Tensor arg) | |||||

| { | |||||

| var result = tf.Runner.TFE_Execute(tf.Context, | |||||

| tf.Context.DeviceName, | |||||

| Name, | |||||

| new[] { arg }, | |||||

| null, | |||||

| 1); | |||||

| return result[0]; | |||||

| } | |||||

| public void Dispose() | |||||

| { | |||||

| c_api.TFE_ContextRemoveFunction(tf.Context.Handle, Name, tf.Status.Handle); | |||||

| c_api.TF_DeleteFunction(_handle); | |||||

| } | |||||

| } | |||||

| } | |||||

+ 4

- 1

src/TensorFlowNET.Core/Functions/c_api.function.cs

View File

| @@ -21,6 +21,9 @@ namespace Tensorflow | |||||

| { | { | ||||

| public partial class c_api | public partial class c_api | ||||

| { | { | ||||

| [DllImport(TensorFlowLibName)] | |||||

| public static extern void TF_DeleteFunction(IntPtr handle); | |||||

| /// <summary> | /// <summary> | ||||

| /// Write out a serialized representation of `func` (as a FunctionDef protocol | /// Write out a serialized representation of `func` (as a FunctionDef protocol | ||||

| /// message) to `output_func_def` (allocated by TF_NewBuffer()). | /// message) to `output_func_def` (allocated by TF_NewBuffer()). | ||||

| @@ -39,7 +42,7 @@ namespace Tensorflow | |||||

| int num_opers, IntPtr[] opers, | int num_opers, IntPtr[] opers, | ||||

| int ninputs, TF_Output[] inputs, | int ninputs, TF_Output[] inputs, | ||||

| int noutputs, TF_Output[] outputs, | int noutputs, TF_Output[] outputs, | ||||

| IntPtr output_names, | |||||

| string[] output_names, | |||||

| IntPtr opts, | IntPtr opts, | ||||

| string description, | string description, | ||||

| SafeStatusHandle status); | SafeStatusHandle status); | ||||

+ 1

- 0

src/TensorFlowNET.Core/Gradients/gradients_util.cs

View File

| @@ -311,6 +311,7 @@ namespace Tensorflow | |||||

| while (queue.Count > 0) | while (queue.Count > 0) | ||||

| { | { | ||||

| var op = queue.Dequeue(); | var op = queue.Dequeue(); | ||||

| if (reached_ops.Contains(op)) | if (reached_ops.Contains(op)) | ||||

| { | { | ||||

| between_ops.Add(op); | between_ops.Add(op); | ||||

+ 45

- 0

src/TensorFlowNET.Core/Graphs/AutoGraph.cs

View File

| @@ -0,0 +1,45 @@ | |||||

| using System; | |||||

| using System.Collections.Generic; | |||||

| using System.Linq; | |||||

| using System.Linq.Expressions; | |||||

| using System.Text; | |||||

| using static Tensorflow.Binding; | |||||

| namespace Tensorflow.Graphs | |||||

| { | |||||

| public class AutoGraph | |||||

| { | |||||

| public Func<Tensor, Tensor, Tensor> to_graph(Func<Tensor, Tensor, Tensor> func) | |||||

| { | |||||

| string func_name = $"autograph_{Guid.NewGuid()}_{func.Method.Name}"; | |||||

| tf.compat.v1.disable_eager_execution(); | |||||

| // IntPtr func_handle; | |||||

| using(var graph = new FuncGraph(func_name)) | |||||

| { | |||||

| graph.as_default(); | |||||

| var input1 = tf.placeholder(tf.int32); | |||||

| var input2 = tf.placeholder(tf.int32); | |||||

| var output = func(input1, input2); | |||||

| var opers = graph._nodes_by_name.Values.Select(x => x as Operation).ToArray(); | |||||

| var func_handle = graph.ToGraph(opers, | |||||

| new Operation[] { input1, input2 }, | |||||

| new Operation[] { output }, | |||||

| null); | |||||

| } | |||||

| tf.enable_eager_execution(); | |||||

| return (Tensor a, Tensor b) => | |||||

| { | |||||

| var result = tf.Runner.TFE_Execute(tf.Context, | |||||

| tf.Context.DeviceName, | |||||

| func_name, | |||||

| new[] { a, b }, | |||||

| null, | |||||

| 1); | |||||

| return result[0]; | |||||

| }; | |||||

| } | |||||

| } | |||||

| } | |||||

+ 80

- 0

src/TensorFlowNET.Core/Graphs/AutoGraphAttribute.cs

View File

| @@ -0,0 +1,80 @@ | |||||

| using MethodBoundaryAspect.Fody.Attributes; | |||||

| using System; | |||||

| using System.Collections.Generic; | |||||

| using System.Linq; | |||||

| using System.Text; | |||||

| using Tensorflow.Eager; | |||||

| using static Tensorflow.Binding; | |||||

| namespace Tensorflow.Graphs | |||||

| { | |||||

| [AllowChangingInputArguments] | |||||

| public sealed class AutoGraphAttribute : OnMethodBoundaryAspect | |||||

| { | |||||

| FuncGraph graph; | |||||

| Tensor[] originalInputs; | |||||

| string func_name; | |||||

| static Dictionary<string, Func<Tensor[], Tensor>> functions = new Dictionary<string, Func<Tensor[], Tensor>>(); | |||||

| public override void OnEntry(MethodExecutionArgs args) | |||||

| { | |||||

| func_name = $"autograph_{args.Instance}.{args.Method.Name}"; | |||||

| if (functions.ContainsKey(func_name)) | |||||

| { | |||||

| args.ReturnValue = functions[func_name](args.Arguments.Select(x => x as Tensor).ToArray()); | |||||

| args.FlowBehavior = FlowBehavior.Return; | |||||

| return; | |||||

| } | |||||

| tf.compat.v1.disable_eager_execution(); | |||||

| // make function as an Operation by autograph | |||||

| graph = new FuncGraph(func_name); | |||||

| graph.as_default(); | |||||

| originalInputs = new Tensor[args.Arguments.Length]; | |||||

| // convert args to placeholder | |||||

| for (var i = 0; i < args.Arguments.Length; i++) | |||||

| { | |||||

| if (args.Arguments[i] is EagerTensor tensor) | |||||

| { | |||||

| originalInputs[i] = tensor; | |||||

| args.Arguments[i] = tf.placeholder(tensor.dtype, shape: tensor.TensorShape); | |||||

| } | |||||

| } | |||||

| } | |||||

| public override void OnExit(MethodExecutionArgs args) | |||||

| { | |||||

| var output = (Tensor)args.ReturnValue; | |||||

| var inputs = args.Arguments.Select(x => x as Tensor).ToArray(); | |||||

| var opers = graph._nodes_by_name.Values.Select(x => x as Operation).ToArray(); | |||||

| graph.ToGraph(opers, | |||||

| inputs.Select(x => x.op).ToArray(), | |||||

| new Operation[] { output.op }, | |||||

| null); | |||||

| graph.Dispose(); | |||||

| tf.enable_eager_execution(); | |||||

| Func<Tensor[], Tensor> function = (x) => | |||||

| { | |||||

| var result = tf.Runner.TFE_Execute(tf.Context, | |||||

| tf.Context.DeviceName, | |||||

| func_name, | |||||

| x, | |||||

| null, | |||||

| 1); | |||||

| return result[0]; | |||||

| }; | |||||

| // cache function. | |||||

| functions[func_name] = function; | |||||

| // run function | |||||

| args.ReturnValue = function(originalInputs); | |||||

| } | |||||

| } | |||||

| } | |||||

+ 61

- 0

src/TensorFlowNET.Core/Graphs/FuncGraph.cs

View File

| @@ -0,0 +1,61 @@ | |||||

| using System; | |||||

| using System.Collections.Generic; | |||||

| using System.Linq; | |||||

| using System.Runtime.InteropServices; | |||||

| using System.Text; | |||||

| using Tensorflow.Functions; | |||||

| using static Tensorflow.Binding; | |||||

| namespace Tensorflow.Graphs | |||||

| { | |||||

| /// <summary> | |||||

| /// Graph representing a function body. | |||||

| /// </summary> | |||||

| public class FuncGraph : Graph | |||||

| { | |||||

| List<Operation> inputs; | |||||

| List<Operation> outputs; | |||||

| Graph outer_graph; | |||||

| string func_name; | |||||

| IntPtr func_handle; | |||||

| public string FuncName => c_api.StringPiece(c_api.TF_FunctionName(func_handle)); | |||||

| /// <summary> | |||||

| /// Construct a new FuncGraph. | |||||

| /// </summary> | |||||

| public FuncGraph(string name) : base() | |||||

| { | |||||

| outer_graph = ops.get_default_graph(); | |||||

| func_name = name; | |||||

| } | |||||

| public IntPtr ToGraph(Operation[] opers, | |||||

| Operation[] inputs, Operation[] outputs, | |||||

| string[] output_names) | |||||

| { | |||||

| using var status = new Status(); | |||||

| func_handle = c_api.TF_GraphToFunction(_handle, | |||||

| func_name, | |||||

| false, | |||||

| opers.Length, | |||||

| opers.Select(x => (IntPtr)x).ToArray(), | |||||

| inputs.Length, | |||||

| inputs.Select(x => new TF_Output(x, 0)).ToArray(), | |||||

| outputs.Length, | |||||

| outputs.Select(x => new TF_Output(x, 0)).ToArray(), | |||||

| output_names == null || output_names.Length == 0 ? null : output_names, | |||||

| IntPtr.Zero, | |||||

| null, | |||||

| status.Handle); | |||||

| status.Check(true); | |||||

| c_api.TF_GraphCopyFunction(outer_graph, func_handle, IntPtr.Zero, status.Handle); | |||||

| status.Check(true); | |||||

| c_api.TFE_ContextAddFunction(tf.Context.Handle, func_handle, status.Handle); | |||||

| status.Check(true); | |||||

| return func_handle; | |||||

| } | |||||

| } | |||||

| } | |||||

+ 1

- 1

src/TensorFlowNET.Core/Graphs/c_api.graph.cs

View File

| @@ -321,6 +321,6 @@ namespace Tensorflow | |||||

| /// <param name="status">TF_Status*</param> | /// <param name="status">TF_Status*</param> | ||||

| [DllImport(TensorFlowLibName)] | [DllImport(TensorFlowLibName)] | ||||

| public static extern void UpdateEdge(IntPtr graph, TF_Output new_src, TF_Input dst, SafeStatusHandle status); | |||||

| public static extern void TF_UpdateEdge(IntPtr graph, TF_Output new_src, TF_Input dst, SafeStatusHandle status); | |||||

| } | } | ||||

| } | } | ||||

+ 1

- 1