100 changed files with 12472 additions and 299 deletions

Split View

Diff Options

-

+9 -0.codacy.yaml

-

+1 -0.dockerignore

-

+125 -81.gitignore

-

+75 -0.pyup.yml

-

+14 -0.readthedocs.yml

-

+119 -0.travis.yml

-

+585 -0CHANGELOG.md

-

+199 -0CONTRIBUTING.md

-

+0 -208LICENSE

-

+211 -0LICENSE.rst

-

+35 -0Makefile

-

+171 -10README.md

-

+201 -0README.rst

-

+4 -0docker/DockerLint.bat

-

+35 -0docker/Dockerfile

-

+14 -0docker/docs/Dockerfile

-

+15 -0docker/docs/sources.list.ustc

-

+68 -0docker/pypi_list.py

-

+37 -0docker/version_prefix.py

-

+225 -0docs/Makefile

-

+8 -0docs/_static/fix_rtd.css

-

+469 -0docs/conf.py

-

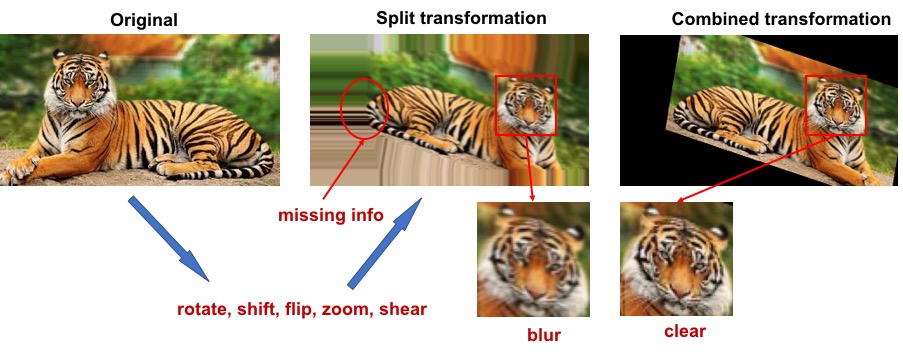

BINdocs/images/affine_transform_comparison.jpg

-

BINdocs/images/affine_transform_why.jpg

-

+100 -0docs/index.rst

-

+281 -0docs/make.bat

-

+73 -0docs/modules/activation.rst

-

+20 -0docs/modules/array_ops.rst

-

+6 -0docs/modules/cli.rst

-

+100 -0docs/modules/cost.rst

-

+260 -0docs/modules/db.rst

-

+23 -0docs/modules/distributed.rst

-

+295 -0docs/modules/files.rst

-

+51 -0docs/modules/initializers.rst

-

+36 -0docs/modules/iterate.rst

-

+701 -0docs/modules/layers.rst

-

+59 -0docs/modules/models.rst

-

+148 -0docs/modules/nlp.rst

-

+19 -0docs/modules/optimizers.rst

-

+641 -0docs/modules/prepro.rst

-

+33 -0docs/modules/rein.rst

-

+73 -0docs/modules/utils.rst

-

+76 -0docs/modules/visualize.rst

-

+184 -0docs/user/contributing.rst

-

+121 -0docs/user/examples.rst

-

+79 -0docs/user/faq.rst

-

+76 -0docs/user/get_involved.rst

-

+217 -0docs/user/get_start_advance.rst

-

+249 -0docs/user/get_start_model.rst

-

+210 -0docs/user/installation.rst

-

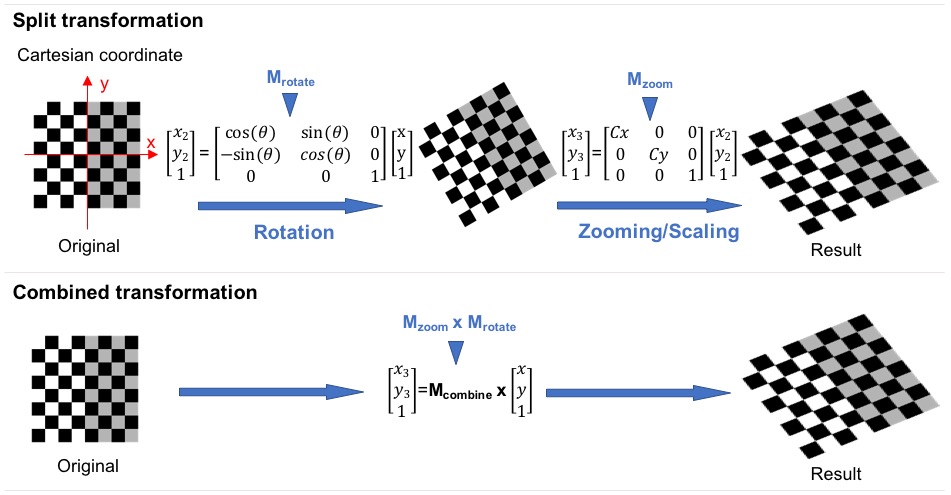

BINdocs/user/my_figs/basic_seq2seq.png

-

BINdocs/user/my_figs/img_tensorlayer.png

-

BINdocs/user/my_figs/img_tlayer.png

-

BINdocs/user/my_figs/img_tlayer_big.png

-

BINdocs/user/my_figs/img_tunelayer.png

-

BINdocs/user/my_figs/karpathy_rnn.jpeg

-

BINdocs/user/my_figs/mnist.jpeg

-

BINdocs/user/my_figs/pong_game.jpeg

-

BINdocs/user/my_figs/seq2seq.png

-

BINdocs/user/my_figs/tl_black_logo.png

-

BINdocs/user/my_figs/tl_transparent_logo.png

-

BINdocs/user/my_figs/tl_white_logo.png

-

BINdocs/user/my_figs/tsne.png

-

BINdocs/user/my_figs/word2vec_basic.pdf

-

+43 -0examples/basic_tutorials/tutorial_LayerList.py

-

+166 -0examples/basic_tutorials/tutorial_cifar10_cnn_dynamic_MS_backend.py

-

+186 -0examples/basic_tutorials/tutorial_cifar10_cnn_dynamic_TF_backend.py

-

+100 -0examples/basic_tutorials/tutorial_mnist_mlp_dynamci_dragon.py

-

+92 -0examples/basic_tutorials/tutorial_mnist_mlp_dynamic_MS_backend.py

-

+91 -0examples/basic_tutorials/tutorial_mnist_mlp_dynamic_TF_backend.py

-

+117 -0examples/basic_tutorials/tutorial_mnist_mlp_mindspore.py

-

+68 -0examples/basic_tutorials/tutorial_mnist_simple.py

-

+211 -0examples/basic_tutorials/tutorial_nested_usage_of_Layer.py

-

+57 -0examples/basic_tutorials/tutorial_paddle_tensorlayer_mlp.py

-

+0 -0examples/model_zoo/__init__.py

-

+287 -0examples/model_zoo/common.py

-

+1003 -0examples/model_zoo/imagenet_classes.py

-

+80 -0examples/model_zoo/model/coco.names

-

+541 -0examples/model_zoo/model/weights_2.txt

-

+541 -0examples/model_zoo/model/weights_3.txt

-

+541 -0examples/model_zoo/model/yolov4_weights3_config.txt

-

+541 -0examples/model_zoo/model/yolov4_weights_config.txt

-

+32 -0examples/model_zoo/pretrained_resnet50.py

-

+29 -0examples/model_zoo/pretrained_vgg16.py

-

+28 -0examples/model_zoo/pretrained_yolov4.py

-

+225 -0examples/model_zoo/resnet.py

-

+347 -0examples/model_zoo/vgg.py

-

+376 -0examples/model_zoo/yolo.py

-

BINimg/TL_gardener.png

-

BINimg/TL_gardener.psd

-

BINimg/awesome-mentioned.png

-

BINimg/github_mascot.png

-

BINimg/img_tensorflow.png

-

BINimg/img_tensorlayer.png

-

BINimg/img_tlayer1.png

-

BINimg/join_slack.png

-

BINimg/join_slack.psd

-

+19 -0img/medium/Readme.md

-

BINimg/medium/medium_header.png

-

BINimg/medium/medium_header.psd

+ 9

- 0

.codacy.yaml

View File

| @@ -0,0 +1,9 @@ | |||

| # https://support.codacy.com/hc/en-us/articles/115002130625-Codacy-Configuration-File | |||

| --- | |||

| engines: | |||

| bandit: | |||

| enabled: false # FIXME: make it work | |||

| exclude_paths: | |||

| - scripts/* | |||

| - setup.py | |||

| - docker/**/* | |||

+ 1

- 0

.dockerignore

View File

| @@ -0,0 +1 @@ | |||

| .git | |||

+ 125

- 81

.gitignore

View File

| @@ -1,87 +1,131 @@ | |||

| # ---> Android | |||

| # Built application files | |||

| *.apk | |||

| *.aar | |||

| *.ap_ | |||

| *.aab | |||

| # Files for the ART/Dalvik VM | |||

| *.dex | |||

| # Java class files | |||

| *.class | |||

| # Generated files | |||

| bin/ | |||

| gen/ | |||

| out/ | |||

| # Uncomment the following line in case you need and you don't have the release build type files in your app | |||

| # release/ | |||

| # Gradle files | |||

| .gradle/ | |||

| # Byte-compiled / optimized / DLL files | |||

| __pycache__/ | |||

| *.py[cod] | |||

| *$py.class | |||

| # C extensions | |||

| *.so | |||

| # Distribution / packaging | |||

| .Python | |||

| build/ | |||

| develop-eggs/ | |||

| dist/ | |||

| downloads/ | |||

| eggs/ | |||

| .eggs/ | |||

| lib/ | |||

| lib64/ | |||

| parts/ | |||

| sdist/ | |||

| var/ | |||

| wheels/ | |||

| *.egg-info/ | |||

| .installed.cfg | |||

| *.egg | |||

| MANIFEST | |||

| *~ | |||

| # PyInstaller | |||

| # Usually these files are written by a python script from a template | |||

| # before PyInstaller builds the exe, so as to inject date/other infos into it. | |||

| *.manifest | |||

| *.spec | |||

| # Installer logs | |||

| pip-log.txt | |||

| pip-delete-this-directory.txt | |||

| # Unit test / coverage reports | |||

| htmlcov/ | |||

| .tox/ | |||

| .coverage | |||

| .coverage.* | |||

| .cache | |||

| nosetests.xml | |||

| coverage.xml | |||

| *.cover | |||

| .hypothesis/ | |||

| .pytest_cache/ | |||

| # Translations | |||

| *.mo | |||

| *.pot | |||

| # Django stuff: | |||

| *.log | |||

| local_settings.py | |||

| db.sqlite3 | |||

| # Local configuration file (sdk path, etc) | |||

| local.properties | |||

| # Flask stuff: | |||

| instance/ | |||

| .webassets-cache | |||

| # Proguard folder generated by Eclipse | |||

| proguard/ | |||

| # Scrapy stuff: | |||

| .scrapy | |||

| # Log Files | |||

| *.log | |||

| # Sphinx documentation | |||

| docs/_build/ | |||

| docs/test_build/ | |||

| docs/build_test/ | |||

| # PyBuilder | |||

| target/ | |||

| # Jupyter Notebook | |||

| .ipynb_checkpoints | |||

| # pyenv | |||

| .python-version | |||

| # celery beat schedule file | |||

| celerybeat-schedule | |||

| # SageMath parsed files | |||

| *.sage.py | |||

| # Environments | |||

| .env | |||

| .venv | |||

| env/ | |||

| venv/ | |||

| ENV/ | |||

| env.bak/ | |||

| venv.bak/ | |||

| venv_/ | |||

| venv2/ | |||

| venv3/ | |||

| venv_doc/ | |||

| venv_py2/ | |||

| # Spyder project settings | |||

| .spyderproject | |||

| .spyproject | |||

| # Rope project settings | |||

| .ropeproject | |||

| # mkdocs documentation | |||

| /site | |||

| # mypy | |||

| .mypy_cache/ | |||

| # IDE Specific directories | |||

| .DS_Store | |||

| .idea | |||

| .vscode/ | |||

| # TensorLayer Directories | |||

| checkpoints | |||

| data/ | |||

| lib_win/ | |||

| # Android Studio Navigation editor temp files | |||

| .navigation/ | |||

| # Android Studio captures folder | |||

| captures/ | |||

| # IntelliJ | |||

| *.iml | |||

| .idea/workspace.xml | |||

| .idea/tasks.xml | |||

| .idea/gradle.xml | |||

| .idea/assetWizardSettings.xml | |||

| .idea/dictionaries | |||

| .idea/libraries | |||

| # Android Studio 3 in .gitignore file. | |||

| .idea/caches | |||

| .idea/modules.xml | |||

| # Comment next line if keeping position of elements in Navigation Editor is relevant for you | |||

| .idea/navEditor.xml | |||

| # Keystore files | |||

| # Uncomment the following lines if you do not want to check your keystore files in. | |||

| #*.jks | |||

| #*.keystore | |||

| # External native build folder generated in Android Studio 2.2 and later | |||

| .externalNativeBuild | |||

| .cxx/ | |||

| # Google Services (e.g. APIs or Firebase) | |||

| # google-services.json | |||

| # Freeline | |||

| freeline.py | |||

| freeline/ | |||

| freeline_project_description.json | |||

| # fastlane | |||

| fastlane/report.xml | |||

| fastlane/Preview.html | |||

| fastlane/screenshots | |||

| fastlane/test_output | |||

| fastlane/readme.md | |||

| # Version control | |||

| vcs.xml | |||

| # lint | |||

| lint/intermediates/ | |||

| lint/generated/ | |||

| lint/outputs/ | |||

| lint/tmp/ | |||

| # lint/reports/ | |||

| # Custom Scripts | |||

| update_tl.bat | |||

| update_tl.py | |||

| # Data Files and ByteCode files | |||

| *.gz | |||

| *.npz | |||

+ 75

- 0

.pyup.yml

View File

| @@ -0,0 +1,75 @@ | |||

| ############################################################################ | |||

| # see https://pyup.io/docs/configuration/ for all available options # | |||

| ############################################################################ | |||

| # configure updates globally | |||

| # default: all | |||

| # allowed: all, insecure, False | |||

| update: all | |||

| # configure dependency pinning globally | |||

| # default: True | |||

| # allowed: True, False | |||

| pin: False | |||

| # set the default branch | |||

| # default: empty, the default branch on GitHub | |||

| branch: master | |||

| # update schedule | |||

| # default: empty | |||

| # allowed: "every day", "every week", .. | |||

| schedule: "every day" | |||

| # search for requirement files | |||

| # default: True | |||

| # allowed: True, False | |||

| search: False | |||

| # Specify requirement files by hand, default is empty | |||

| # default: empty | |||

| # allowed: list | |||

| requirements: | |||

| # Requirements for the library | |||

| - requirements/requirements.txt | |||

| # Requirements for the development | |||

| - requirements/requirements_tf_cpu.txt | |||

| # Requirements for the development | |||

| - requirements/requirements_tf_gpu.txt | |||

| # Not necessary, but recommended libraries | |||

| - requirements/requirements_extra.txt | |||

| # Requirements for contrib loggers | |||

| - requirements_contrib_loggers.txt | |||

| # Requirements for the db | |||

| - requirements/requirements_db.txt | |||

| # Requirements for the development | |||

| - requirements/requirements_dev.txt | |||

| # Requirements for building docs | |||

| - requirements/requirements_doc.txt | |||

| # Requirements for running unittests | |||

| - requirements/requirements_test.txt | |||

| # add a label to pull requests, default is not set | |||

| # requires private repo permissions, even on public repos | |||

| # default: empty | |||

| #label_prs: update | |||

| # configure the branch prefix the bot is using | |||

| # default: pyup- | |||

| branch_prefix: pyup- | |||

| # set a global prefix for PRs | |||

| # default: empty | |||

| pr_prefix: "PyUP - Dependency Update" | |||

| # allow to close stale PRs | |||

| # default: True | |||

| close_prs: True | |||

+ 14

- 0

.readthedocs.yml

View File

| @@ -0,0 +1,14 @@ | |||

| # https://docs.readthedocs.io/en/latest/yaml-config.html | |||

| build: | |||

| image: latest # For python 3.6 | |||

| formats: | |||

| - epub | |||

| python: | |||

| version: 3.6 | |||

| requirements_file: | |||

| requirements/requirements_doc.txt | |||

+ 119

- 0

.travis.yml

View File

| @@ -0,0 +1,119 @@ | |||

| # https://docs.travis-ci.com/user/languages/python/ | |||

| language: python | |||

| # https://docs.travis-ci.com/user/caching/#pip-cache | |||

| cache: | |||

| directories: | |||

| - $HOME/.cache/pip/wheels | |||

| addons: | |||

| apt: | |||

| update: false | |||

| branches: | |||

| only: | |||

| - master | |||

| - TensorLayer-2.x | |||

| - /^\d+\.\d+(\.\d+)?(\S*)?$/ | |||

| python: | |||

| - "3.6" | |||

| - "3.5" | |||

| # - "2.7" # TensorLayer 2.0 does not support python2 now | |||

| env: | |||

| # Backward Compatibility in insured for release less than 1 year old. | |||

| # https://pypi.org/project/tensorflow/#history | |||

| matrix: | |||

| - _TF_VERSION=2.0.0-rc1 | |||

| # - _TF_VERSION=1.12.0 # Remove on Oct 22, 2019 | |||

| # - _TF_VERSION=1.11.0 # Remove on Sep 28, 2019 | |||

| # - _TF_VERSION=1.10.1 # Remove on Aug 24, 2019 | |||

| # - _TF_VERSION=1.9.0 # Remove on Jul 10, 2019 | |||

| # - _TF_VERSION=1.8.0 # Remove on Apr 28, 2019 | |||

| # - _TF_VERSION=1.7.1 # Remove on May 08, 2019 | |||

| # - _TF_VERSION=1.7.0 # Remove on Mar 29, 2019 | |||

| # - _TF_VERSION=1.6.0 # Remove on Mar 01, 2019 | |||

| global: | |||

| - PYPI_USER='jonathandekhtiar' | |||

| # See https://docs.travis-ci.com/user/encryption-keys/ for more details about secure keys. | |||

| ### == PYPI_PASSWORD === ### | |||

| ## To update: travis encrypt PYPI_PASSWORD=################################ | |||

| - secure: "fGIRDjfzzP9DhdDshgh/+bWTZ5Y0jTD4aR+gsT1TyAyc6N4f3RRlx70xZZwYMdQ+XC3no/q4na8UzhhuSM0hCCM1EaQ78WF1c6+FBScf4vYGoYgyJ1am+4gu54JXt+4f0bd+s6jyYBafJALUJp5fqHoxCUXqzjrOqGBBU2+JbL71Aaj8yhQuK0VPPABexsQPQM312Gvzg7hy9dh63J0Q02PqINn+CTcwq3gLH9Oua58zWQ7TaT0cdy/hzAc6Yxy3ajo2W5NU+nKROaaG9W57sa7K/v1dshDFFFST2DdGxm9i7vvfPsq0OWM6qWLsec/4mXJWsmai2ygZEv+IhaABb10c7spd2nl7oHFj2UGmldtO5W0zLb1KkCPWDPilFt3lvHM+OS/YaibquL5/5+yGj0LsRJrVyWoMBA8idcQeH4dvTAfySeFpO42VNwW5ez9JaEOh7bBp7naAA8c/fbNJJ5YEW4MEmOZ9dwFTohNNDiN+oITSrcXBS+jukbfTOmtCeYNUker+4G2YwII9cxHXbZeIMrTq9AqTfOVTAYCFaFHKbpSc1+HCyF7n5ZfNC00kBaw93XUnLRzSNKe5Ir791momYL8HecMN3OAI77bz26/pHSfzJnLntq9qx2nLBTnqDuSq5/pHvdZ8hyt+hTDxvF7HJIVMhbnkjoLPxmn4k/I=" | |||

| ### === GITHUB_PERSONAL_TOKEN === ### | |||

| ## To update: travis encrypt GITHUB_PERSONAL_TOKEN=################################ | |||

| - secure: "kMxg4WfTwhnYMD7WjYk71vgw7XlShPpANauKzfTL6oawDrpQRkBUai4uQwiL3kXVBuVv9rKKKZxxnyAm05iB5wGasPDhlFA1DPF0IbyU3pwQKlw2Xo5qtHdgxBnbms6JJ9z5b+hHCVg+LXYYeUw5qG01Osg5Ue6j1g2juQQHCod00FNuo3fe8ah/Y10Rem6CigH8ofosCrTvN2w1GaetJwVehRYf8JkPC6vQ+Yk8IIjHn2CaVJALbhuchVblxwH0NXXRen915BVBwWRQtsrvEVMXKu7A8sMHmvPz1u3rhXQfjpF2KeVOfy1ZnyiHcLE2HgAPuAGh4kxZAAA8ovmcaCbv8m64bm72BrQApSbt6OEtR9L1UeUwdEgi54FH1XFOHQ9dA6CpiGCyk3wAJZqO0/PkNYVLfb4gPLvnvBRwYBaPgUPvVNhidFu/oODENZmcf8g9ChtuC1GT70EYlVwhgDGqUY7/USZCEvIPe81UToqtIKgcgA+Is51XindumJVMiqTsjgdqeC/wZcw+y37TAjIvvXbtYxeqIKv9zh1JuZppqUhnf+OhI+HHFaY4iu7lQTs3C0WmoLskZAp9srwRtifnVFFkdYzngmPaSjWyko2qiS0cTdFJQB/ljqmnJdksacbv5OOa0Q4qZef/hW774nVx105FlkAIk70D2b5l2pA=" | |||

| ### === GITHUB_PERSONAL_TOKEN === ### | |||

| ## To update: travis encrypt HYPERDASH_APIKEY=################################ | |||

| - secure: "ez9Ck1VpqWOd2PPu+CMWzd8R4aAIXbjKCk96PCKwWu8VXoHjaPkiy8Nn0LUzSlUg3nKdZmu2JSndwDMy3+lMLG7zE2WlGNY7MF5KM3GrvFpP3cxJQ6OuPcZcEH4j5KtBtNTrNqa8SWglqhc9mr66a92SD8Ydc4aMj6L9nbQvrsvVzIMmMy6weVlpBF35nweYCM8LxlsnqyPLleHPZo3o/k+hsTqQQbiMGjC78tqrGr56u7AjL2+D/m33+dfCGzFvMJFcpLQ5ldbcVU54i5e6V3xJ48P30QOGZaqG3fcpfZsyJEIWjykt6XFA8GfJjaVVbxdlr7zP7Vd9iWBuemnMEX3F9Cy/4x7LmX9PJfsVPC6FQnanDvsZSNO5hpmKe8BTpttJJvxgscOczV4jnI69OzqhSQeyChwtkqhIg1E/53XIO+uLJAAZsCkAco7tjGGXTKyv8ZlpSJwSqsLcmgpmQbfodCoMLcYenTxqKZv78e2B4tOPGQyS2bkSxAqhvAIam7RCq/yEvz5n2/mBFEGwP6OQFIdC7ypO2LyrOlLT7HJjCeYMeKSm+GOD3LW9oIy9QJZpG6N/zAAjnk9C2mYtWRQIBo4qdHjRvyDReevDexI8j0AXySblxREmQ7ZaT6KEDXXZSu5goTlaGm0g2HwAkKu9xYFV/bRtp6+i1mP7CQg=" | |||

| matrix: | |||

| include: | |||

| - python: '3.6' | |||

| env: | |||

| - _DOC_AND_YAPF_TEST=True | |||

| install: | |||

| - | | |||

| if [[ -v _DOC_AND_YAPF_TEST ]]; then | |||

| pip install tensorflow==2.0.0-rc1 | |||

| pip install yapf | |||

| pip install -e .[doc] | |||

| else | |||

| pip install tensorflow==$_TF_VERSION | |||

| pip install -e .[all_cpu_dev] | |||

| fi | |||

| script: | |||

| # units test | |||

| # https://docs.pytest.org/en/latest/ | |||

| - rm setup.cfg | |||

| - | | |||

| if [[ -v _DOC_AND_YAPF_TEST ]]; then | |||

| mv setup.travis_doc.cfg setup.cfg | |||

| else | |||

| mv setup.travis.cfg setup.cfg | |||

| fi | |||

| - pytest | |||

| before_deploy: | |||

| - python setup.py sdist | |||

| - python setup.py bdist_wheel | |||

| - python setup.py bdist_wheel --universal | |||

| - python setup.py egg_info | |||

| deploy: | |||

| # Documentation: https://docs.travis-ci.com/user/deployment/pypi/ | |||

| - provider: pypi | |||

| user: '$PYPI_USER' | |||

| password: '$PYPI_PASSWORD' | |||

| skip_cleanup: true | |||

| on: | |||

| tags: true | |||

| python: '3.6' | |||

| condition: '$_TF_VERSION = 2.0.0-rc1' | |||

| # condition: '$_TF_VERSION = 1.11.0' | |||

| # Documentation: https://docs.travis-ci.com/user/deployment/releases/ | |||

| - provider: releases | |||

| file: | |||

| - dist/* | |||

| - tensorlayer.egg-info/PKG-INFO | |||

| file_glob: true | |||

| skip_cleanup: true | |||

| api_key: '$GITHUB_PERSONAL_TOKEN' | |||

| on: | |||

| tags: true | |||

| python: '3.6' | |||

| condition: '$_TF_VERSION = 2.0.0-rc1' | |||

| # condition: '$_TF_VERSION = 1.11.0' | |||

+ 585

- 0

CHANGELOG.md

View File

| @@ -0,0 +1,585 @@ | |||

| # Changelog | |||

| All notable changes to this project will be documented in this file. | |||

| The format is based on [Keep a Changelog](https://keepachangelog.com/) | |||

| and this project adheres to [Semantic Versioning](https://semver.org/spec/v2.0.0.html). | |||

| <!-- | |||

| ============== Guiding Principles ============== | |||

| * Changelogs are for humans, not machines. | |||

| * There should be an entry for every single version. | |||

| * The same types of changes should be grouped. | |||

| * Versions and sections should be linkable. | |||

| * The latest version comes first. | |||

| * The release date of each version is displayed. | |||

| * Mention whether you follow Semantic Versioning. | |||

| ============== Types of changes (keep the order) ============== | |||

| * `Added` for new features. | |||

| * `Changed` for changes in existing functionality. | |||

| * `Deprecated` for soon-to-be removed features. | |||

| * `Removed` for now removed features. | |||

| * `Fixed` for any bug fixes. | |||

| * `Security` in case of vulnerabilities. | |||

| * `Dependencies Update` in case of vulnerabilities. | |||

| * `Contributors` to thank the contributors that worked on this PR. | |||

| ============== How To Update The Changelog for a New Release ============== | |||

| ** Always Keep The Unreleased On Top ** | |||

| To release a new version, please update the changelog as followed: | |||

| 1. Rename the `Unreleased` Section to the Section Number | |||

| 2. Recreate an `Unreleased` Section on top | |||

| 3. Update the links at the very bottom | |||

| ======================= START: TEMPLATE TO KEEP IN CASE OF NEED =================== | |||

| ** DO NOT MODIFY THIS SECTION ! ** | |||

| ## [Unreleased] | |||

| ### Added | |||

| ### Changed | |||

| ### Dependencies Update | |||

| ### Deprecated | |||

| ### Fixed | |||

| ### Removed | |||

| ### Security | |||

| ### Contributors | |||

| ** DO NOT MODIFY THIS SECTION ! ** | |||

| ======================= END: TEMPLATE TO KEEP IN CASE OF NEED =================== | |||

| --> | |||

| <!-- YOU CAN EDIT FROM HERE --> | |||

| ## [Unreleased] | |||

| ### Added | |||

| ### Changed | |||

| ### Dependencies Update | |||

| ### Deprecated | |||

| ### Fixed | |||

| - Fix README. (#PR 1044) | |||

| - Fix package info. (#PR 1046) | |||

| ### Removed | |||

| ### Security | |||

| ### Contributors | |||

| - @luomai (PR #1044, 1046) | |||

| ## [2.2.0] - 2019-09-13 | |||

| TensorLayer 2.2.0 is a maintenance release. | |||

| It contains numerous API improvement and bug fixes. | |||

| This release is compatible with TensorFlow 2 RC1. | |||

| ### Added | |||

| - Support nested layer customization (#PR 1015) | |||

| - Support string dtype in InputLayer (#PR 1017) | |||

| - Support Dynamic RNN in RNN (#PR 1023) | |||

| - Add ResNet50 static model (#PR 1030) | |||

| - Add performance test code in static model (#PR 1041) | |||

| ### Changed | |||

| - `SpatialTransform2dAffine` auto `in_channels` | |||

| - support TensorFlow 2.0.0-rc1 | |||

| - Update model weights property, now returns its copy (#PR 1010) | |||

| ### Fixed | |||

| - RNN updates: remove warnings, fix if seq_len=0, unitest (#PR 1033) | |||

| - BN updates: fix BatchNorm1d for 2D data, refactored (#PR 1040) | |||

| ### Dependencies Update | |||

| ### Deprecated | |||

| ### Fixed | |||

| - Fix `tf.models.Model._construct_graph` for list of outputs, e.g. STN case (PR #1010) | |||

| - Enable better `in_channels` exception raise. (PR #1015) | |||

| - Set allow_pickle=True in np.load() (#PR 1021) | |||

| - Remove `private_method` decorator (#PR 1025) | |||

| - Copy original model's `trainable_weights` and `nontrainable_weights` when initializing `ModelLayer` (#PR 1026) | |||

| - Copy original model's `trainable_weights` and `nontrainable_weights` when initializing `LayerList` (#PR 1029) | |||

| - Remove redundant parts in `model.all_layers` (#PR 1029) | |||

| - Replace `tf.image.resize_image_with_crop_or_pad` with `tf.image.resize_with_crop_or_pad` (#PR 1032) | |||

| - Fix a bug in `ResNet50` static model (#PR 1041) | |||

| ### Removed | |||

| ### Security | |||

| ### Contributors | |||

| - @zsdonghao | |||

| - @luomai | |||

| - @ChrisWu1997: #1010 #1015 #1025 #1030 #1040 | |||

| - @warshallrho: #1017 #1021 #1026 #1029 #1032 #1041 | |||

| - @ArnoldLIULJ: #1023 | |||

| - @JingqingZ: #1023 | |||

| ## [2.1.0] | |||

| ### Changed | |||

| - Add version_info in model.config. (PR #992) | |||

| - Replace tf.nn.func with tf.nn.func.\_\_name\_\_ in model config. (PR #994) | |||

| - Add Reinforcement learning tutorials. (PR #995) | |||

| - Add RNN layers with simple rnn cell, GRU cell, LSTM cell. (PR #998) | |||

| - Update Seq2seq (#998) | |||

| - Add Seq2seqLuongAttention model (#998) | |||

| ### Fixed | |||

| ### Contributors | |||

| - @warshallrho: #992 #994 | |||

| - @quantumiracle: #995 | |||

| - @Tokarev-TT-33: #995 | |||

| - @initial-h: #995 | |||

| - @Officium: #995 | |||

| - @ArnoldLIULJ: #998 | |||

| - @JingqingZ: #998 | |||

| ## [2.0.2] - 2019-6-5 | |||

| ### Changed | |||

| - change the format of network config, change related code and files; change layer act (PR #980) | |||

| ### Fixed | |||

| - Fix dynamic model cannot track PRelu weights gradients problem (PR #982) | |||

| - Raise .weights warning (commit) | |||

| ### Contributors | |||

| - @warshallrho: #980 | |||

| - @1FengL: #982 | |||

| ## [2.0.1] - 2019-5-17 | |||

| A maintain release. | |||

| ### Changed | |||

| - remove `tl.layers.initialize_global_variables(sess)` (PR #931) | |||

| - support `trainable_weights` (PR #966) | |||

| ### Added | |||

| - Layer | |||

| - `InstanceNorm`, `InstanceNorm1d`, `InstanceNorm2d`, `InstanceNorm3d` (PR #963) | |||

| * Reinforcement learning tutorials. (PR #995) | |||

| ### Changed | |||

| - remove `tl.layers.initialize_global_variables(sess)` (PR #931) | |||

| - update `tutorial_generate_text.py`, `tutorial_ptb_lstm.py`. remove `tutorial_ptb_lstm_state_is_tuple.py` (PR #958) | |||

| - change `tl.layers.core`, `tl.models.core` (PR #966) | |||

| - change `weights` into `all_weights`, `trainable_weights`, `nontrainable_weights` | |||

| ### Dependencies Update | |||

| - nltk>=3.3,<3.4 => nltk>=3.3,<3.5 (PR #892) | |||

| - pytest>=3.6,<3.11 => pytest>=3.6,<4.1 (PR #889) | |||

| - yapf>=0.22,<0.25 => yapf==0.25.0 (PR #896) | |||

| - imageio==2.5.0 progressbar2==3.39.3 scikit-learn==0.21.0 scikit-image==0.15.0 scipy==1.2.1 wrapt==1.11.1 pymongo==3.8.0 sphinx==2.0.1 wrapt==1.11.1 opencv-python==4.1.0.25 requests==2.21.0 tqdm==4.31.1 lxml==4.3.3 pycodestyle==2.5.0 sphinx==2.0.1 yapf==0.27.0(PR #967) | |||

| ### Fixed | |||

| - fix docs of models @zsdonghao #957 | |||

| - In `BatchNorm`, keep dimensions of mean and variance to suit `channels first` (PR #963) | |||

| ### Contributors | |||

| - @warshallrho: #PR966 | |||

| - @zsdonghao: #931 | |||

| - @yd-yin: #963 | |||

| - @Tokarev-TT-33: # 995 | |||

| - @initial-h: # 995 | |||

| - @quantumiracle: #995 | |||

| - @Officium: #995 | |||

| - @1FengL: #958 | |||

| - @dvklopfenstein: #971 | |||

| ## [2.0.0] - 2019-05-04 | |||

| To many PR for this update, please check [here](https://github.com/tensorlayer/tensorlayer/releases/tag/2.0.0) for more details. | |||

| ### Changed | |||

| * update for TensorLayer 2.0.0 alpha version (PR #952) | |||

| * support TensorFlow 2.0.0-alpha | |||

| * support both static and dynamic model building | |||

| ### Dependencies Update | |||

| - tensorflow>=1.6,<1.13 => tensorflow>=2.0.0-alpha (PR #952) | |||

| - h5py>=2.9 (PR #952) | |||

| - cloudpickle>=0.8.1 (PR #952) | |||

| - remove matplotlib | |||

| ### Contributors | |||

| - @zsdonghao | |||

| - @JingqingZ | |||

| - @ChrisWu1997 | |||

| - @warshallrho | |||

| ## [1.11.1] - 2018-11-15 | |||

| ### Changed | |||

| * guide for pose estimation - flipping (PR #884) | |||

| * cv2 transform support 2 modes (PR #885) | |||

| ### Dependencies Update | |||

| - pytest>=3.6,<3.9 => pytest>=3.6,<3.10 (PR #874) | |||

| - requests>=2.19,<2.20 => requests>=2.19,<2.21 (PR #874) | |||

| - tqdm>=4.23,<4.28 => tqdm>=4.23,<4.29 (PR #878) | |||

| - pytest>=3.6,<3.10 => pytest>=3.6,<3.11 (PR #886) | |||

| - pytest-xdist>=1.22,<1.24 => pytest-xdist>=1.22,<1.25 (PR #883) | |||

| - tensorflow>=1.6,<1.12 => tensorflow>=1.6,<1.13 (PR #886) | |||

| ### Contributors | |||

| - @zsdonghao: #884 #885 | |||

| ## [1.11.0] - 2018-10-18 | |||

| ### Added | |||

| - Layer: | |||

| - Release `GroupNormLayer` (PR #850) | |||

| - Image affine transformation APIs | |||

| - `affine_rotation_matrix` (PR #857) | |||

| - `affine_horizontal_flip_matrix` (PR #857) | |||

| - `affine_vertical_flip_matrix` (PR #857) | |||

| - `affine_shift_matrix` (PR #857) | |||

| - `affine_shear_matrix` (PR #857) | |||

| - `affine_zoom_matrix` (PR #857) | |||

| - `affine_transform_cv2` (PR #857) | |||

| - `affine_transform_keypoints` (PR #857) | |||

| - Affine transformation tutorial | |||

| - `examples/data_process/tutorial_fast_affine_transform.py` (PR #857) | |||

| ### Changed | |||

| - BatchNormLayer: support `data_format` | |||

| ### Dependencies Update | |||

| - matplotlib>=2.2,<2.3 => matplotlib>=2.2,<3.1 (PR #845) | |||

| - pydocstyle>=2.1,<2.2 => pydocstyle>=2.1,<3.1 (PR #866) | |||

| - scikit-learn>=0.19,<0.20 => scikit-learn>=0.19,<0.21 (PR #851) | |||

| - sphinx>=1.7,<1.8 => sphinx>=1.7,<1.9 (PR #842) | |||

| - tensorflow>=1.6,<1.11 => tensorflow>=1.6,<1.12 (PR #853) | |||

| - tqdm>=4.23,<4.26 => tqdm>=4.23,<4.28 (PR #862 & #868) | |||

| - yapf>=0.22,<0.24 => yapf>=0.22,<0.25 (PR #829) | |||

| ### Fixed | |||

| - Correct offset calculation in `tl.prepro.transform_matrix_offset_center` (PR #855) | |||

| ### Contributors | |||

| - @2wins: #850 #855 | |||

| - @DEKHTIARJonathan: #853 | |||

| - @zsdonghao: #857 | |||

| - @luomai: #857 | |||

| ## [1.10.1] - 2018-09-07 | |||

| ### Added | |||

| - unittest `tests\test_timeout.py` has been added to ensure the network creation process does not freeze. | |||

| ### Changed | |||

| - remove 'tensorboard' param, replaced by 'tensorboard_dir' in `tensorlayer/utils.py` with customizable tensorboard directory (PR #819) | |||

| ### Removed | |||

| - TL Graph API removed. Memory Leaks Issues with this API, will be fixed and integrated in TL 2.0 (PR #818) | |||

| ### Fixed | |||

| - Issue #817 fixed: TL 1.10.0 - Memory Leaks and very slow network creation. | |||

| ### Dependencies Update | |||

| - autopep8>=1.3,<1.4 => autopep8>=1.3,<1.5 (PR #815) | |||

| - imageio>=2.3,<2.4 => imageio>=2.3,<2.5 (PR #823) | |||

| - pytest>=3.6,<3.8 => pytest>=3.6,<3.9 (PR #823) | |||

| - pytest-cov>=2.5,<2.6 => pytest-cov>=2.5,<2.7 (PR #820) | |||

| ### Contributors | |||

| - @DEKHTIARJonathan: #815 #818 #820 #823 | |||

| - @ndiy: #819 | |||

| - @zsdonghao: #818 | |||

| ## [1.10.0] - 2018-09-02 | |||

| ### Added | |||

| - API: | |||

| - Add `tl.model.vgg19` (PR #698) | |||

| - Add `tl.logging.contrib.hyperdash` (PR #739) | |||

| - Add `tl.distributed.trainer` (PR #700) | |||

| - Add `prefetch_buffer_size` to the `tl.distributed.trainer` (PR #766) | |||

| - Add `tl.db.TensorHub` (PR #751) | |||

| - Add `tl.files.save_graph` (PR #751) | |||

| - Add `tl.files.load_graph_` (PR #751) | |||

| - Add `tl.files.save_graph_and_params` (PR #751) | |||

| - Add `tl.files.load_graph_and_params` (PR #751) | |||

| - Add `tl.prepro.keypoint_random_xxx` (PR #787) | |||

| - Documentation: | |||

| - Add binary, ternary and dorefa links (PR #711) | |||

| - Update input scale of VGG16 and VGG19 to 0~1 (PR #736) | |||

| - Update database (PR #751) | |||

| - Layer: | |||

| - Release SwitchNormLayer (PR #737) | |||

| - Release QuanConv2d, QuanConv2dWithBN, QuanDenseLayer, QuanDenseLayerWithBN (PR#735) | |||

| - Update Core Layer to support graph (PR #751) | |||

| - All Pooling layers support `data_format` (PR #809) | |||

| - Setup: | |||

| - Creation of installation flaggs `all_dev`, `all_cpu_dev`, and `all_gpu_dev` (PR #739) | |||

| - Examples: | |||

| - change folder struction (PR #802) | |||

| - `tutorial_models_vgg19` has been introduced to show how to use `tl.model.vgg19` (PR #698). | |||

| - fix bug of `tutorial_bipedalwalker_a3c_continuous_action.py` (PR #734, Issue #732) | |||

| - `tutorial_models_vgg16` and `tutorial_models_vgg19` has been changed the input scale from [0,255] to [0,1](PR #710) | |||

| - `tutorial_mnist_distributed_trainer.py` and `tutorial_cifar10_distributed_trainer.py` are added to explain the uses of Distributed Trainer (PR #700) | |||

| - add `tutorial_quanconv_cifar10.py` and `tutorial_quanconv_mnist.py` (PR #735) | |||

| - add `tutorial_work_with_onnx.py`(PR #775) | |||

| - Applications: | |||

| - [Arbitrary Style Transfer in Real-time with Adaptive Instance Normalization](https://arxiv.org/abs/1703.06868) (PR #799) | |||

| ### Changed | |||

| - function minibatches changed to avoid wasting samples.(PR #762) | |||

| - all the input scale in both vgg16 and vgg19 has been changed the input scale from [0,255] to [0,1](PR #710) | |||

| - Dockerfiles merged and refactored into one file (PR #747) | |||

| - LazyImports move to the most **top level** imports as possible (PR #739) | |||

| - some new test functions have been added in `test_layers_convolution.py`, `test_layers_normalization.py`, `test_layers_core.py` (PR #735) | |||

| - documentation now uses mock imports reducing the number of dependencies to compile the documentation (PR #785) | |||

| - fixed and enforced pydocstyle D210, D200, D301, D207, D403, D204, D412, D402, D300, D208 (PR #784) | |||

| ### Deprecated | |||

| - `tl.logging.warn` has been deprecated in favor of `tl.logging.warning` (PR #739) | |||

| ### Removed | |||

| - `conv_layers()` has been removed in both vgg16 and vgg19(PR #710) | |||

| - graph API (PR #818) | |||

| ### Fixed | |||

| - import error caused by matplotlib on OSX (PR #705) | |||

| - missing import in tl.prepro (PR #712) | |||

| - Dockerfiles import error fixed - issue #733 (PR #747) | |||

| - Fix a typo in `absolute_difference_error` in file: `tensorlayer/cost.py` - Issue #753 (PR #759) | |||

| - Fix the bug of scaling the learning rate of trainer (PR #776) | |||

| - log error instead of info when npz file not found. (PR #812) | |||

| ### Dependencies Update | |||

| - numpy>=1.14,<1.15 => numpy>=1.14,<1.16 (PR #754) | |||

| - pymongo>=3.6,<3.7 => pymongo>=3.6,<3.8 (PR #750) | |||

| - pytest>=3.6,<3.7 => tqdm>=3.6,<3.8 (PR #798) | |||

| - pytest-xdist>=1.22,<1.23 => pytest-xdist>=1.22,<1.24 (PR #805 and #806) | |||

| - tensorflow>=1.8,<1.9 => tensorflow>=1.6,<1.11 (PR #739 and PR #798) | |||

| - tqdm>=4.23,<4.25 => tqdm>=4.23,<4.26 (PR #798) | |||

| - yapf>=0.21,<0.22 => yapf>=0.22,<0.24 (PR #798 #808) | |||

| ### Contributors | |||

| - @DEKHTIARJonathan: #739 #747 #750 #754 | |||

| - @lgarithm: #705 #700 | |||

| - @OwenLiuzZ: #698 #710 #775 #776 | |||

| - @zsdonghao: #711 #712 #734 #736 #737 #700 #751 #809 #818 | |||

| - @luomai: #700 #751 #766 #802 | |||

| - @XJTUWYD: #735 | |||

| - @mutewall: #735 | |||

| - @thangvubk: #759 | |||

| - @JunbinWang: #796 | |||

| - @boldjoel: #787 | |||

| ## [1.9.1] - 2018-07-30 | |||

| ### Fixed | |||

| - Issue with tensorflow 1.10.0 fixed | |||

| ## [1.9.0] - 2018-06-16 | |||

| ### Added | |||

| - API: | |||

| - `tl.alphas` and `tl.alphas_like` added following the tf.ones/zeros and tf.zeros_like/ones_like (PR #580) | |||

| - `tl.lazy_imports.LazyImport` to import heavy libraries only when necessary (PR #667) | |||

| - `tl.act.leaky_relu6` and `tl.layers.PRelu6Layer` have been deprecated (PR #686) | |||

| - `tl.act.leaky_twice_relu6` and `tl.layers.PTRelu6Layer` have been deprecated (PR #686) | |||

| - CI Tool: | |||

| - [Stale Probot](https://github.com/probot/stale) added to clean stale issues (PR #573) | |||

| - [Changelog Probot](https://github.com/mikz/probot-changelog) Configuration added (PR #637) | |||

| - Travis Builds now handling a matrix of TF Version from TF==1.6.0 to TF==1.8.0 (PR #644) | |||

| - CircleCI added to build and upload Docker Containers for each PR merged and tag release (PR #648) | |||

| - Decorator: | |||

| - `tl.decorators` API created including `deprecated_alias` and `private_method` (PR #660) | |||

| - `tl.decorators` API enriched with `protected_method` (PR #675) | |||

| - `tl.decorators` API enriched with `deprecated` directly raising warning and modifying documentation (PR #691) | |||

| - Docker: | |||

| - Containers for each release and for each PR merged on master built (PR #648) | |||

| - Containers built in the following configurations (PR #648): | |||

| - py2 + cpu | |||

| - py2 + gpu | |||

| - py3 + cpu | |||

| - py3 + gpu | |||

| - Documentation: | |||

| - Clean README.md (PR #677) | |||

| - Release semantic version added on index page (PR #633) | |||

| - Optimizers page added (PR #636) | |||

| - `AMSGrad` added on Optimizers page added (PR #636) | |||

| - Layer: | |||

| - ElementwiseLambdaLayer added to use custom function to connect multiple layer inputs (PR #579) | |||

| - AtrousDeConv2dLayer added (PR #662) | |||

| - Fix bugs of using `tf.layers` in CNN (PR #686) | |||

| - Optimizer: | |||

| - AMSGrad Optimizer added based on `On the Convergence of Adam and Beyond (ICLR 2018)` (PR #636) | |||

| - Setup: | |||

| - Creation of installation flaggs `all`, `all_cpu`, and `all_gpu` (PR #660) | |||

| - Test: | |||

| - `test_utils_predict.py` added to reproduce and fix issue #288 (PR #566) | |||

| - `Layer_DeformableConvolution_Test` added to reproduce issue #572 with deformable convolution (PR #573) | |||

| - `Array_Op_Alphas_Test` and `Array_Op_Alphas_Like_Test` added to test `tensorlayer/array_ops.py` file (PR #580) | |||

| - `test_optimizer_amsgrad.py` added to test `AMSGrad` optimizer (PR #636) | |||

| - `test_logging.py` added to insure robustness of the logging API (PR #645) | |||

| - `test_decorators.py` added (PR #660) | |||

| - `test_activations.py` added (PR #686) | |||

| - Tutorials: | |||

| - `tutorial_tfslim` has been introduced to show how to use `SlimNetsLayer` (PR #560). | |||

| - add the following to all tutorials (PR #697): | |||

| ```python | |||

| tf.logging.set_verbosity(tf.logging.DEBUG) | |||

| tl.logging.set_verbosity(tl.logging.DEBUG) | |||

| ``` | |||

| ### Changed | |||

| - Tensorflow CPU & GPU dependencies moved to separated requirement files in order to allow PyUP.io to parse them (PR #573) | |||

| - The document of LambdaLayer for linking it with ElementwiseLambdaLayer (PR #587) | |||

| - RTD links point to stable documentation instead of latest used for development (PR #633) | |||

| - TF Version older than 1.6.0 are officially unsupported and raises an exception (PR #644) | |||

| - README.md Badges Updated with Support Python and Tensorflow Versions (PR #644) | |||

| - TL logging API has been consistent with TF logging API and thread-safe (PR #645) | |||

| - Relative Imports changed for absolute imports (PR #657) | |||

| - `tl.files` refactored into a directory with numerous files (PR #657) | |||

| - `tl.files.voc_dataset` fixed because of original Pascal VOC website was down (PR #657) | |||

| - extra requirements hidden inside the library added in the project requirements (PR #657) | |||

| - requirements files refactored in `requirements/` directory (PR #657) | |||

| - README.md and other markdown files have been refactored and cleaned. (PR #639) | |||

| - Ternary Convolution Layer added in unittest (PR #658) | |||

| - Convolution Layers unittests have been cleaned & refactored (PR #658) | |||

| - All the tests are now using a DEBUG level verbosity when run individualy (PR #660) | |||

| - `tf.identity` as activation is **ignored**, thus reducing the size of the graph by removing useless operation (PR #667) | |||

| - argument dictionaries are now checked and saved within the `Layer` Base Class (PR #667) | |||

| - `Layer` Base Class now presenting methods to update faultlessly `all_layers`, `all_params`, and `all_drop` (PR #675) | |||

| - Input Layers have been removed from `tl.layers.core` and added to `tl.layers.inputs` (PR #675) | |||

| - Input Layers are now considered as true layers in the graph (they represent a placeholder), unittests have been updated (PR #675) | |||

| - Layer API is simplified, with automatic feeding `prev_layer` into `self.inputs` (PR #675) | |||

| - Complete Documentation Refactoring and Reorganization (namely Layer APIs) (PR #691) | |||

| ### Deprecated | |||

| - `tl.layers.TimeDistributedLayer` argurment `args` is deprecated in favor of `layer_args` (PR #667) | |||

| - `tl.act.leaky_relu` have been deprecated in favor of `tf.nn.leaky_relu` (PR #686) | |||

| ### Removed | |||

| - `assert()` calls remove and replaced by `raise AssertionError()` (PR #667) | |||

| - `tl.identity` is removed, not used anymore and deprecated for a long time (PR #667) | |||

| - All Code specific to `TF.__version__ < "1.6"` have been removed (PR #675) | |||

| ### Fixed | |||

| - Issue #498 - Deprecation Warning Fix in `tl.layers.RNNLayer` with `inspect` (PR #574) | |||

| - Issue #498 - Deprecation Warning Fix in `tl.files` with truth value of an empty array is ambiguous (PR #575) | |||

| - Issue #565 related to `tl.utils.predict` fixed - `np.hstack` problem in which the results for multiple batches are stacked along `axis=1` (PR #566) | |||

| - Issue #572 with `tl.layers.DeformableConv2d` fixed (PR #573) | |||

| - Issue #664 with `tl.layers.ConvLSTMLayer` fixed (PR #676) | |||

| - Typo of the document of ElementwiseLambdaLayer (PR #588) | |||

| - Error in `tl.layers.TernaryConv2d` fixed - self.inputs not defined (PR #658) | |||

| - Deprecation warning fixed in `tl.layers.binary._compute_threshold()` (PR #658) | |||

| - All references to `tf.logging` replaced by `tl.logging` (PR #661) | |||

| - Duplicated code removed when bias was used (PR #667) | |||

| - `tensorlayer.third_party.roi_pooling.roi_pooling.roi_pooling_ops` is now lazy loaded to prevent systematic error raised (PR #675) | |||

| - Documentation not build in RTD due to old version of theme in docs directory fixed (PR #703) | |||

| - Tutorial: | |||

| - `tutorial_word2vec_basic.py` saving issue #476 fixed (PR #635) | |||

| - All tutorials tested and errors have been fixed (PR #635) | |||

| ### Dependencies Update | |||

| - Update pytest from 3.5.1 to 3.6.0 (PR #647) | |||

| - Update progressbar2 from 3.37.1 to 3.38.0 (PR #651) | |||

| - Update scikit-image from 0.13.1 to 0.14.0 (PR #656) | |||

| - Update keras from 2.1.6 to 2.2.0 (PR #684) | |||

| - Update requests from 2.18.4 to 2.19.0 (PR #695) | |||

| ### Contributors | |||

| - @lgarithm: #563 | |||

| - @DEKHTIARJonathan: #573 #574 #575 #580 #633 #635 #636 #639 #644 #645 #648 #657 #667 #658 #659 #660 #661 #666 #667 #672 #675 #683 #686 #687 #690 #691 #692 #703 | |||

| - @2wins: #560 #566 #662 | |||

| - @One-sixth: #579 | |||

| - @zsdonghao: #587 #588 #639 #685 #697 | |||

| - @luomai: #639 #677 | |||

| - @dengyueyun666: #676 | |||

| ## [1.8.5] - 2018-05-09 | |||

| ### Added | |||

| - Github Templates added (by @DEKHTIARJonathan) | |||

| - New issues Template | |||

| - New PR Template | |||

| - Travis Deploy Automation on new Tag (by @DEKHTIARJonathan) | |||

| - Deploy to PyPI and create a new version. | |||

| - Deploy to Github Releases and upload the wheel files | |||

| - PyUP.io has been added to ensure we are compatible with the latest libraries (by @DEKHTIARJonathan) | |||

| - `deconv2d` now handling dilation_rate (by @zsdonghao) | |||

| - Documentation unittest added (by @DEKHTIARJonathan) | |||

| - `test_layers_core` has been added to ensure that `LayersConfig` is abstract. | |||

| ### Changed | |||

| - All Tests Refactored - Now using unittests and runned with PyTest (by @DEKHTIARJonathan) | |||

| - Documentation updated (by @zsdonghao) | |||

| - Package Setup Refactored (by @DEKHTIARJonathan) | |||

| - Dataset Downlaod now using library progressbar2 (by @DEKHTIARJonathan) | |||

| - `deconv2d` function transformed into Class (by @zsdonghao) | |||

| - `conv1d` function transformed into Class (by @zsdonghao) | |||

| - super resolution functions transformed into Class (by @zsdonghao) | |||

| - YAPF coding style improved and enforced (by @DEKHTIARJonathan) | |||

| ### Fixed | |||

| - Backward Compatibility Restored with deprecation warnings (by @DEKHTIARJonathan) | |||

| - Tensorflow Deprecation Fix (Issue #498): | |||

| - AverageEmbeddingInputlayer (by @zsdonghao) | |||

| - load_mpii_pose_dataset (by @zsdonghao) | |||

| - maxPool2D initializer issue #551 (by @zsdonghao) | |||

| - `LayersConfig` class has been enforced as abstract | |||

| - Pooling Layer Issue #557 fixed (by @zsdonghao) | |||

| ### Dependencies Update | |||

| - scipy>=1.0,<1.1 => scipy>=1.1,<1.2 | |||

| ### Contributors | |||

| @zsdonghao @luomai @DEKHTIARJonathan | |||

| [Unreleased]: https://github.com/tensorlayer/tensorlayer/compare/2.0....master | |||

| [2.2.0]: https://github.com/tensorlayer/tensorlayer/compare/2.2.0...2.2.0 | |||

| [2.1.0]: https://github.com/tensorlayer/tensorlayer/compare/2.1.0...2.1.0 | |||

| [2.0.2]: https://github.com/tensorlayer/tensorlayer/compare/2.0.2...2.0.2 | |||

| [2.0.1]: https://github.com/tensorlayer/tensorlayer/compare/2.0.1...2.0.1 | |||

| [2.0.0]: https://github.com/tensorlayer/tensorlayer/compare/2.0.0...2.0.0 | |||

| [1.11.1]: https://github.com/tensorlayer/tensorlayer/compare/1.11.0...1.11.0 | |||

| [1.11.0]: https://github.com/tensorlayer/tensorlayer/compare/1.10.1...1.11.0 | |||

| [1.10.1]: https://github.com/tensorlayer/tensorlayer/compare/1.10.0...1.10.1 | |||

| [1.10.0]: https://github.com/tensorlayer/tensorlayer/compare/1.9.1...1.10.0 | |||

| [1.9.1]: https://github.com/tensorlayer/tensorlayer/compare/1.9.0...1.9.1 | |||

| [1.9.0]: https://github.com/tensorlayer/tensorlayer/compare/1.8.5...1.9.0 | |||

| [1.8.5]: https://github.com/tensorlayer/tensorlayer/compare/1.8.4...1.8.5 | |||

+ 199

- 0

CONTRIBUTING.md

View File

| @@ -0,0 +1,199 @@ | |||

| # TensorLayer Contributor Guideline | |||

| ## Welcome to contribute! | |||

| You are more than welcome to contribute to TensorLayer! If you have any improvement, please send us your [pull requests](https://help.github.com/en/articles/about-pull-requests). You may implement your improvement on your [fork](https://help.github.com/en/articles/working-with-forks). | |||

| ## Checklist | |||

| * Continuous integration | |||

| * Build from sources | |||

| * Unittest | |||

| * Documentation | |||

| * General intro to TensorLayer2 | |||

| * How to contribute a new `Layer` | |||

| * How to contribute a new `Model` | |||

| * How to contribute a new example/tutorial | |||

| ## Continuous integration | |||

| We appreciate contributions | |||

| either by adding / improving examples or extending / fixing the core library. | |||

| To make your contributions, you would need to follow the [pep8](https://www.python.org/dev/peps/pep-0008/) coding style and [numpydoc](https://numpydoc.readthedocs.io/en/latest/) document style. | |||

| We rely on Continuous Integration (CI) for checking push commits. | |||

| The following tools are used to ensure that your commits can pass through the CI test: | |||

| * [yapf](https://github.com/google/yapf) (format code), compulsory | |||

| * [isort](https://github.com/timothycrosley/isort) (sort imports), optional | |||

| * [autoflake](https://github.com/myint/autoflake) (remove unused imports), optional | |||

| You can simply run | |||

| ```bash | |||

| make format | |||

| ``` | |||

| to apply those tools before submitting your PR. | |||

| ## Build from sources | |||

| ```bash | |||

| # First clone the repository and change the current directory to the newly cloned repository | |||

| git clone https://github.com/zsdonghao/tensorlayer2.git | |||

| cd tensorlayer2 | |||

| # Install virtualenv if necessary | |||

| pip install virtualenv | |||

| # Then create a virtualenv called `venv` | |||

| virtualenv venv | |||

| # Activate the virtualenv | |||

| ## Linux: | |||

| source venv/bin/activate | |||

| ## Windows: | |||

| venv\Scripts\activate.bat | |||

| # ============= IF TENSORFLOW IS NOT ALREADY INSTALLED ============= # | |||

| # basic installation | |||

| pip install . | |||

| # advanced: for a machine **without** an NVIDIA GPU | |||

| pip install -e ".[all_cpu_dev]" | |||

| # advanced: for a machine **with** an NVIDIA GPU | |||

| pip install -e ".[all_gpu_dev]" | |||

| ``` | |||

| ## Unittest | |||

| Launching the unittest for the whole repo: | |||

| ```bash | |||

| # install pytest | |||

| pip install pytest | |||

| # run pytest | |||

| pytest | |||

| ``` | |||

| Running your unittest code on your implemented module only: | |||

| ```bash | |||

| # install coverage | |||

| pip install coverage | |||

| cd /path/to/your/unittest/code | |||

| # For example: cd tests/layers/ | |||

| # run unittest | |||

| coverage run --source myproject.module -m unittest discover | |||

| # For example: coverage run --source tensorlayer.layers -m unittest discover | |||

| # generate html report | |||

| coverage html | |||

| ``` | |||

| ## Documentation | |||

| Even though you follow [numpydoc](https://numpydoc.readthedocs.io/en/latest/) document style when writing your code, | |||

| this does not ensure those lines appear on TensorLayer online documentation. | |||

| You need further modify corresponding RST files in `docs/modules`. | |||

| For example, to add your implemented new pooling layer into documentation, modify `docs/modules/layer.rst`. First, insert layer name under Layer list | |||

| ```rst | |||

| Layer list | |||

| ---------- | |||

| .. autosummary:: | |||

| NewPoolingLayer | |||

| ``` | |||

| Second, find pooling layer part and add: | |||

| ```rst | |||

| .. ----------------------------------------------------------- | |||

| .. Pooling Layers | |||

| .. ----------------------------------------------------------- | |||

| Pooling Layers | |||

| ------------------------ | |||

| New Pooling Layer | |||

| ^^^^^^^^^^^^^^^^^^^^^^^^^^ | |||

| .. autoclass:: NewPoolingLayer | |||

| ``` | |||

| Finally, test with local documentation: | |||

| ```bash | |||

| cd ./docs | |||

| make clean | |||

| make html | |||

| # then view generated local documentation by ./html/index.html | |||

| ``` | |||

| ## General intro to TensorLayer2 | |||

| * TensorLayer2 is built on [TensorFlow2](https://www.tensorflow.org/alpha), so TensorLayer2 is purely eager, no sessions, no globals. | |||

| * TensorLayer2 supports APIs to build static models and dynamic models. Therefore, all `Layers` should be compatible with the two modes. | |||

| ```python | |||

| # An example of a static model | |||

| # A static model has inputs and outputs with fixed shape. | |||

| inputs = tl.layers.Input([32, 784]) | |||

| dense1 = tl.layers.Dense(n_units=800, act=tf.nn.relu, in_channels=784, name='dense1')(inputs) | |||

| dense2 = tl.layers.Dense(n_units=10, act=tf.nn.relu, in_channels=800, name='dense2')(dense1) | |||

| model = tl.models.Model(inputs=inputs, outputs=dense2) | |||

| # An example of a dynamic model | |||

| # A dynamic model has more flexibility. The inputs and outputs may be different in different runs. | |||

| class CustomizeModel(tl.models.Model): | |||

| def __init__(self): | |||

| super(CustomizeModel, self).__init__() | |||

| self.dense1 = tl.layers.Dense(n_units=800, act=tf.nn.relu, in_channels=784, name='dense1') | |||

| self.dense2 = tl.layers.Dense(n_units=10, act=tf.nn.relu, in_channels=800, name='dense2') | |||

| # a dynamic model allows more flexibility by customising forwarding. | |||

| def forward(self, x, bar=None): | |||

| d1 = self.dense1(x) | |||

| if bar: | |||

| return d1 | |||

| else: | |||

| d2 = self.dense2(d1) | |||

| return d1, d2 | |||

| model = CustomizeModel() | |||

| ``` | |||

| * More examples can be found in [examples](examples/) and [tests/layers](tests/layers/). Note that not all of them are completed. | |||

| ## How to contribute a new `Layer` | |||

| * A `NewLayer` should be a derived from the base class [`Layer`](tensorlayer/layers/core.py). | |||

| * Member methods to be overrided: | |||

| - `__init__(self, args1, args2, inputs_shape=None, name=None)`: The constructor of the `NewLayer`, which should | |||

| - Call `super(NewLayer, self).__init__(name)` to construct the base. | |||

| - Define member variables based on the args1, args2 (or even more). | |||

| - If the `inputs_shape` is provided, call `self.build(inputs_shape)` and set `self._built=True`. Note that sometimes only `in_channels` should be enough to build the layer like [`Dense`](tensorlayer/layers/dense/base_dense.py). | |||

| - Logging by `logging.info(...)`. | |||

| - `__repr__(self)`: Return a printable representation of the `NewLayer`. | |||

| - `build(self, inputs_shape)`: Build the `NewLayer` by defining weights. | |||

| - `forward(self, inputs, **kwargs)`: Forward feeding the `NewLayer`. Note that the forward feeding of some `Layers` may be different during training and testing like [`Dropout`](tensorlayer/layers/dropout.py). | |||

| * Unittest: | |||

| - Unittest should be done before a pull request. Unittest code can be written in [tests/](tests/) | |||

| * Documents: | |||

| - Please write a description for each class and method in RST format. The description may include the functionality, arguments, references, examples of the `NewLayer`. | |||

| * Examples: [`Dense`](tensorlayer/layers/dense/base_dense.py), [`Dropout`](tensorlayer/layers/dropout.py), [`Conv`](tensorlayer/layers/convolution/simplified_conv.py). | |||

| ## How to contribute a new `Model` | |||

| * A `NewModel` should be derived from the base class [`Model`](tensorlayer/models/core.py) (if dynamic) or an instance of [`Model`](tensorlayer/models/core.py) (if static). | |||

| * A static `NewModel` should have fixed inputs and outputs. Please check the example [`VGG_Static`](tensorlayer/models/vgg.py) | |||

| * A dynamic `NewModel` has more flexiblility. Please check the example [`VGG16`](tensorlayer/models/vgg16.py) | |||

| ## How to contribute a new example/tutorial | |||

| * A new example/tutorial should implement a complete workflow of deep learning which includes (but not limited) | |||

| - `Models` construction based on `Layers`. | |||

| - Data processing and loading. | |||

| - Training and testing. | |||

| - Forward feeding by calling the models. | |||

| - Loss function. | |||

| - Back propagation by `tf.GradientTape()`. | |||

| - Model saving and restoring. | |||

| * Examples: [MNIST](examples/basic_tutorials/tutorial_mnist_mlp_static.py), [CIFAR10](examples/basic_tutorials/tutorial_cifar10_cnn_static.py), [FastText](examples/text_classification/tutorial_imdb_fasttext.py) | |||

+ 0

- 208

LICENSE

View File

| @@ -1,208 +0,0 @@ | |||

| Apache License | |||

| Version 2.0, January 2004 | |||

| http://www.apache.org/licenses/ TERMS AND CONDITIONS FOR USE, REPRODUCTION, | |||

| AND DISTRIBUTION | |||

| 1. Definitions. | |||

| "License" shall mean the terms and conditions for use, reproduction, and distribution | |||

| as defined by Sections 1 through 9 of this document. | |||

| "Licensor" shall mean the copyright owner or entity authorized by the copyright | |||

| owner that is granting the License. | |||

| "Legal Entity" shall mean the union of the acting entity and all other entities | |||

| that control, are controlled by, or are under common control with that entity. | |||

| For the purposes of this definition, "control" means (i) the power, direct | |||

| or indirect, to cause the direction or management of such entity, whether | |||

| by contract or otherwise, or (ii) ownership of fifty percent (50%) or more | |||

| of the outstanding shares, or (iii) beneficial ownership of such entity. | |||

| "You" (or "Your") shall mean an individual or Legal Entity exercising permissions | |||

| granted by this License. | |||

| "Source" form shall mean the preferred form for making modifications, including | |||

| but not limited to software source code, documentation source, and configuration | |||

| files. | |||

| "Object" form shall mean any form resulting from mechanical transformation | |||

| or translation of a Source form, including but not limited to compiled object | |||

| code, generated documentation, and conversions to other media types. | |||

| "Work" shall mean the work of authorship, whether in Source or Object form, | |||

| made available under the License, as indicated by a copyright notice that | |||

| is included in or attached to the work (an example is provided in the Appendix | |||

| below). | |||

| "Derivative Works" shall mean any work, whether in Source or Object form, | |||

| that is based on (or derived from) the Work and for which the editorial revisions, | |||

| annotations, elaborations, or other modifications represent, as a whole, an | |||

| original work of authorship. For the purposes of this License, Derivative | |||

| Works shall not include works that remain separable from, or merely link (or | |||

| bind by name) to the interfaces of, the Work and Derivative Works thereof. | |||

| "Contribution" shall mean any work of authorship, including the original version | |||

| of the Work and any modifications or additions to that Work or Derivative | |||

| Works thereof, that is intentionally submitted to Licensor for inclusion in | |||

| the Work by the copyright owner or by an individual or Legal Entity authorized | |||

| to submit on behalf of the copyright owner. For the purposes of this definition, | |||

| "submitted" means any form of electronic, verbal, or written communication | |||

| sent to the Licensor or its representatives, including but not limited to | |||

| communication on electronic mailing lists, source code control systems, and | |||

| issue tracking systems that are managed by, or on behalf of, the Licensor | |||

| for the purpose of discussing and improving the Work, but excluding communication | |||

| that is conspicuously marked or otherwise designated in writing by the copyright | |||

| owner as "Not a Contribution." | |||

| "Contributor" shall mean Licensor and any individual or Legal Entity on behalf | |||

| of whom a Contribution has been received by Licensor and subsequently incorporated | |||

| within the Work. | |||

| 2. Grant of Copyright License. Subject to the terms and conditions of this | |||

| License, each Contributor hereby grants to You a perpetual, worldwide, non-exclusive, | |||

| no-charge, royalty-free, irrevocable copyright license to reproduce, prepare | |||

| Derivative Works of, publicly display, publicly perform, sublicense, and distribute | |||

| the Work and such Derivative Works in Source or Object form. | |||

| 3. Grant of Patent License. Subject to the terms and conditions of this License, | |||

| each Contributor hereby grants to You a perpetual, worldwide, non-exclusive, | |||

| no-charge, royalty-free, irrevocable (except as stated in this section) patent | |||

| license to make, have made, use, offer to sell, sell, import, and otherwise | |||

| transfer the Work, where such license applies only to those patent claims | |||

| licensable by such Contributor that are necessarily infringed by their Contribution(s) | |||

| alone or by combination of their Contribution(s) with the Work to which such | |||

| Contribution(s) was submitted. If You institute patent litigation against | |||

| any entity (including a cross-claim or counterclaim in a lawsuit) alleging | |||

| that the Work or a Contribution incorporated within the Work constitutes direct | |||

| or contributory patent infringement, then any patent licenses granted to You | |||

| under this License for that Work shall terminate as of the date such litigation | |||

| is filed. | |||

| 4. Redistribution. You may reproduce and distribute copies of the Work or | |||

| Derivative Works thereof in any medium, with or without modifications, and | |||

| in Source or Object form, provided that You meet the following conditions: | |||

| (a) You must give any other recipients of the Work or Derivative Works a copy | |||

| of this License; and | |||

| (b) You must cause any modified files to carry prominent notices stating that | |||

| You changed the files; and | |||

| (c) You must retain, in the Source form of any Derivative Works that You distribute, | |||

| all copyright, patent, trademark, and attribution notices from the Source | |||

| form of the Work, excluding those notices that do not pertain to any part | |||

| of the Derivative Works; and | |||

| (d) If the Work includes a "NOTICE" text file as part of its distribution, | |||

| then any Derivative Works that You distribute must include a readable copy | |||

| of the attribution notices contained within such NOTICE file, excluding those | |||

| notices that do not pertain to any part of the Derivative Works, in at least | |||

| one of the following places: within a NOTICE text file distributed as part | |||

| of the Derivative Works; within the Source form or documentation, if provided | |||

| along with the Derivative Works; or, within a display generated by the Derivative | |||

| Works, if and wherever such third-party notices normally appear. The contents | |||

| of the NOTICE file are for informational purposes only and do not modify the | |||

| License. You may add Your own attribution notices within Derivative Works | |||

| that You distribute, alongside or as an addendum to the NOTICE text from the | |||

| Work, provided that such additional attribution notices cannot be construed | |||

| as modifying the License. | |||

| You may add Your own copyright statement to Your modifications and may provide | |||

| additional or different license terms and conditions for use, reproduction, | |||

| or distribution of Your modifications, or for any such Derivative Works as | |||

| a whole, provided Your use, reproduction, and distribution of the Work otherwise | |||

| complies with the conditions stated in this License. | |||

| 5. Submission of Contributions. Unless You explicitly state otherwise, any | |||

| Contribution intentionally submitted for inclusion in the Work by You to the | |||

| Licensor shall be under the terms and conditions of this License, without | |||

| any additional terms or conditions. Notwithstanding the above, nothing herein | |||

| shall supersede or modify the terms of any separate license agreement you | |||

| may have executed with Licensor regarding such Contributions. | |||

| 6. Trademarks. This License does not grant permission to use the trade names, | |||

| trademarks, service marks, or product names of the Licensor, except as required | |||

| for reasonable and customary use in describing the origin of the Work and | |||

| reproducing the content of the NOTICE file. | |||

| 7. Disclaimer of Warranty. Unless required by applicable law or agreed to | |||

| in writing, Licensor provides the Work (and each Contributor provides its | |||

| Contributions) on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY | |||

| KIND, either express or implied, including, without limitation, any warranties | |||

| or conditions of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR | |||

| A PARTICULAR PURPOSE. You are solely responsible for determining the appropriateness | |||

| of using or redistributing the Work and assume any risks associated with Your | |||

| exercise of permissions under this License. | |||

| 8. Limitation of Liability. In no event and under no legal theory, whether | |||

| in tort (including negligence), contract, or otherwise, unless required by | |||

| applicable law (such as deliberate and grossly negligent acts) or agreed to | |||

| in writing, shall any Contributor be liable to You for damages, including | |||

| any direct, indirect, special, incidental, or consequential damages of any | |||

| character arising as a result of this License or out of the use or inability | |||

| to use the Work (including but not limited to damages for loss of goodwill, | |||

| work stoppage, computer failure or malfunction, or any and all other commercial | |||

| damages or losses), even if such Contributor has been advised of the possibility | |||

| of such damages. | |||

| 9. Accepting Warranty or Additional Liability. While redistributing the Work | |||

| or Derivative Works thereof, You may choose to offer, and charge a fee for, | |||

| acceptance of support, warranty, indemnity, or other liability obligations | |||

| and/or rights consistent with this License. However, in accepting such obligations, | |||

| You may act only on Your own behalf and on Your sole responsibility, not on | |||

| behalf of any other Contributor, and only if You agree to indemnify, defend, | |||

| and hold each Contributor harmless for any liability incurred by, or claims | |||

| asserted against, such Contributor by reason of your accepting any such warranty | |||

| or additional liability. END OF TERMS AND CONDITIONS | |||

| APPENDIX: How to apply the Apache License to your work. | |||

| To apply the Apache License to your work, attach the following boilerplate | |||

| notice, with the fields enclosed by brackets "[]" replaced with your own identifying | |||

| information. (Don't include the brackets!) The text should be enclosed in | |||

| the appropriate comment syntax for the file format. We also recommend that | |||

| a file or class name and description of purpose be included on the same "printed | |||

| page" as the copyright notice for easier identification within third-party | |||

| archives. | |||

| Copyright [yyyy] [name of copyright owner] | |||

| Licensed under the Apache License, Version 2.0 (the "License"); | |||

| you may not use this file except in compliance with the License. | |||

| You may obtain a copy of the License at | |||

| http://www.apache.org/licenses/LICENSE-2.0 | |||

| Unless required by applicable law or agreed to in writing, software | |||

| distributed under the License is distributed on an "AS IS" BASIS, | |||

| WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. | |||

| See the License for the specific language governing permissions and | |||

| limitations under the License. | |||

+ 211

- 0

LICENSE.rst

View File

| @@ -0,0 +1,211 @@ | |||

| License | |||

| ======= | |||

| Copyright (c) 2016~2018 The TensorLayer contributors. All rights reserved. | |||

| Apache License | |||

| Version 2.0, January 2004 | |||

| http://www.apache.org/licenses/ | |||

| TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION | |||

| 1. Definitions. | |||

| "License" shall mean the terms and conditions for use, reproduction, | |||

| and distribution as defined by Sections 1 through 9 of this document. | |||

| "Licensor" shall mean the copyright owner or entity authorized by | |||

| the copyright owner that is granting the License. | |||

| "Legal Entity" shall mean the union of the acting entity and all | |||

| other entities that control, are controlled by, or are under common | |||

| control with that entity. For the purposes of this definition, | |||

| "control" means (i) the power, direct or indirect, to cause the | |||

| direction or management of such entity, whether by contract or | |||

| otherwise, or (ii) ownership of fifty percent (50%) or more of the | |||

| outstanding shares, or (iii) beneficial ownership of such entity. | |||

| "You" (or "Your") shall mean an individual or Legal Entity | |||

| exercising permissions granted by this License. | |||

| "Source" form shall mean the preferred form for making modifications, | |||

| including but not limited to software source code, documentation | |||

| source, and configuration files. | |||

| "Object" form shall mean any form resulting from mechanical | |||

| transformation or translation of a Source form, including but | |||

| not limited to compiled object code, generated documentation, | |||

| and conversions to other media types. | |||

| "Work" shall mean the work of authorship, whether in Source or | |||

| Object form, made available under the License, as indicated by a | |||

| copyright notice that is included in or attached to the work | |||

| (an example is provided in the Appendix below). | |||

| "Derivative Works" shall mean any work, whether in Source or Object | |||

| form, that is based on (or derived from) the Work and for which the | |||

| editorial revisions, annotations, elaborations, or other modifications | |||

| represent, as a whole, an original work of authorship. For the purposes | |||

| of this License, Derivative Works shall not include works that remain | |||

| separable from, or merely link (or bind by name) to the interfaces of, | |||

| the Work and Derivative Works thereof. | |||

| "Contribution" shall mean any work of authorship, including | |||

| the original version of the Work and any modifications or additions | |||

| to that Work or Derivative Works thereof, that is intentionally | |||

| submitted to Licensor for inclusion in the Work by the copyright owner | |||

| or by an individual or Legal Entity authorized to submit on behalf of | |||

| the copyright owner. For the purposes of this definition, "submitted" | |||

| means any form of electronic, verbal, or written communication sent | |||

| to the Licensor or its representatives, including but not limited to | |||

| communication on electronic mailing lists, source code control systems, | |||

| and issue tracking systems that are managed by, or on behalf of, the | |||

| Licensor for the purpose of discussing and improving the Work, but | |||

| excluding communication that is conspicuously marked or otherwise | |||

| designated in writing by the copyright owner as "Not a Contribution." | |||

| "Contributor" shall mean Licensor and any individual or Legal Entity | |||

| on behalf of whom a Contribution has been received by Licensor and | |||

| subsequently incorporated within the Work. | |||

| 2. Grant of Copyright License. Subject to the terms and conditions of | |||

| this License, each Contributor hereby grants to You a perpetual, | |||

| worldwide, non-exclusive, no-charge, royalty-free, irrevocable | |||

| copyright license to reproduce, prepare Derivative Works of, | |||

| publicly display, publicly perform, sublicense, and distribute the | |||

| Work and such Derivative Works in Source or Object form. | |||

| 3. Grant of Patent License. Subject to the terms and conditions of | |||

| this License, each Contributor hereby grants to You a perpetual, | |||

| worldwide, non-exclusive, no-charge, royalty-free, irrevocable | |||

| (except as stated in this section) patent license to make, have made, | |||

| use, offer to sell, sell, import, and otherwise transfer the Work, | |||

| where such license applies only to those patent claims licensable | |||

| by such Contributor that are necessarily infringed by their | |||

| Contribution(s) alone or by combination of their Contribution(s) | |||

| with the Work to which such Contribution(s) was submitted. If You | |||

| institute patent litigation against any entity (including a | |||

| cross-claim or counterclaim in a lawsuit) alleging that the Work | |||

| or a Contribution incorporated within the Work constitutes direct | |||

| or contributory patent infringement, then any patent licenses | |||

| granted to You under this License for that Work shall terminate | |||

| as of the date such litigation is filed. | |||

| 4. Redistribution. You may reproduce and distribute copies of the | |||

| Work or Derivative Works thereof in any medium, with or without | |||

| modifications, and in Source or Object form, provided that You | |||

| meet the following conditions: | |||

| (a) You must give any other recipients of the Work or | |||

| Derivative Works a copy of this License; and | |||

| (b) You must cause any modified files to carry prominent notices | |||

| stating that You changed the files; and | |||

| (c) You must retain, in the Source form of any Derivative Works | |||

| that You distribute, all copyright, patent, trademark, and | |||

| attribution notices from the Source form of the Work, | |||

| excluding those notices that do not pertain to any part of | |||

| the Derivative Works; and | |||

| (d) If the Work includes a "NOTICE" text file as part of its | |||

| distribution, then any Derivative Works that You distribute must | |||

| include a readable copy of the attribution notices contained | |||

| within such NOTICE file, excluding those notices that do not | |||

| pertain to any part of the Derivative Works, in at least one | |||

| of the following places: within a NOTICE text file distributed | |||

| as part of the Derivative Works; within the Source form or | |||

| documentation, if provided along with the Derivative Works; or, | |||

| within a display generated by the Derivative Works, if and | |||

| wherever such third-party notices normally appear. The contents | |||

| of the NOTICE file are for informational purposes only and | |||

| do not modify the License. You may add Your own attribution | |||

| notices within Derivative Works that You distribute, alongside | |||

| or as an addendum to the NOTICE text from the Work, provided | |||

| that such additional attribution notices cannot be construed | |||

| as modifying the License. | |||

| You may add Your own copyright statement to Your modifications and | |||

| may provide additional or different license terms and conditions | |||

| for use, reproduction, or distribution of Your modifications, or | |||

| for any such Derivative Works as a whole, provided Your use, | |||

| reproduction, and distribution of the Work otherwise complies with | |||

| the conditions stated in this License. | |||

| 5. Submission of Contributions. Unless You explicitly state otherwise, | |||

| any Contribution intentionally submitted for inclusion in the Work | |||

| by You to the Licensor shall be under the terms and conditions of | |||

| this License, without any additional terms or conditions. | |||

| Notwithstanding the above, nothing herein shall supersede or modify | |||

| the terms of any separate license agreement you may have executed | |||

| with Licensor regarding such Contributions. | |||

| 6. Trademarks. This License does not grant permission to use the trade | |||