3 changed files with 24 additions and 8 deletions

Unified View

Diff Options

-

+16 -3README.md

-

BINdoc/result.png

-

+8 -5main.py

+ 16

- 3

README.md

View File

| @@ -2,21 +2,28 @@ | |||||

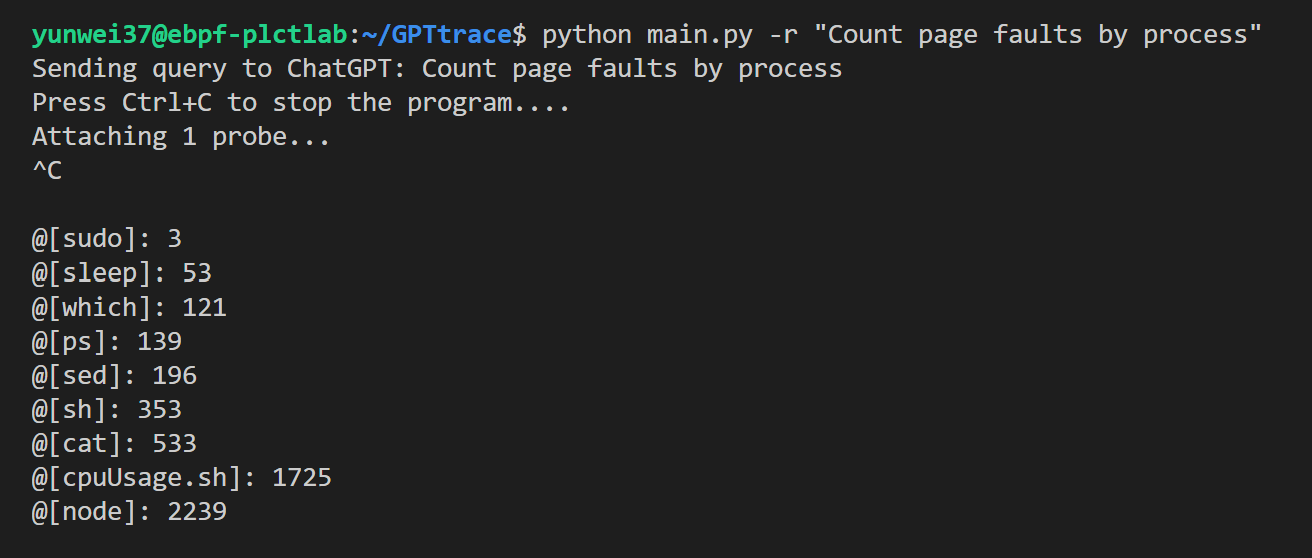

| Generate eBPF programs and tracing with ChatGPT and natural language | Generate eBPF programs and tracing with ChatGPT and natural language | ||||

| For example: | |||||

| ## Introduction | |||||

| - Tracing with natural language | |||||

|  |  | ||||

| - Generate eBPF programs with natural language | |||||

| ## Usage | ## Usage | ||||

| ```console | ```console | ||||

| python main.py | |||||

| usage: GPTtrace [-h] [-e | -r TEXT] [-u UUID] [-t ACCESS_TOKEN] | |||||

| $ python main.py | |||||

| usage: GPTtrace [-h] [-e | -v | -r TEXT] [-u UUID] [-t ACCESS_TOKEN] | |||||

| Use ChatGPT to write eBPF programs (bpftrace, etc.) | Use ChatGPT to write eBPF programs (bpftrace, etc.) | ||||

| optional arguments: | optional arguments: | ||||

| -h, --help show this help message and exit | -h, --help show this help message and exit | ||||

| -e, --explain Let ChatGPT explain what's eBPF | -e, --explain Let ChatGPT explain what's eBPF | ||||

| -v, --verbose Print the receive | |||||

| -r TEXT, --run TEXT Generate commands using your input with ChatGPT, and run it | -r TEXT, --run TEXT Generate commands using your input with ChatGPT, and run it | ||||

| -u UUID, --uuid UUID Conversion UUID to use, or passed through environment variable `GPTTRACE_CONV_UUID` | -u UUID, --uuid UUID Conversion UUID to use, or passed through environment variable `GPTTRACE_CONV_UUID` | ||||

| -t ACCESS_TOKEN, --access-token ACCESS_TOKEN | -t ACCESS_TOKEN, --access-token ACCESS_TOKEN | ||||

| @@ -36,3 +43,9 @@ optional arguments: | |||||

| - Count LLC cache misses by process name and PID (uses PMCs): | - Count LLC cache misses by process name and PID (uses PMCs): | ||||

| - Profile user-level stacks at 99 Hertz, for PID 189: | - Profile user-level stacks at 99 Hertz, for PID 189: | ||||

| - Files opened, for processes in the root cgroup-v2 | - Files opened, for processes in the root cgroup-v2 | ||||

| ## 🔗 Links | |||||

| - detail documents and tutorials about how we train ChatGPT to write eBPF programs: https://github.com/eunomia-bpf/bpf-developer-tutorial (基于 CO-RE (一次编写,到处运行) libbpf 的 eBPF 开发者教程:通过 20 个小工具一步步学习 eBPF(尝试教会 ChatGPT 编写 eBPF 程序) | |||||

| - bpftrace: https://github.com/iovisor/bpftrace | |||||

| - ChatGPT: https://chat.openai.com/ | |||||

BIN

doc/result.png

View File

+ 8

- 5

main.py

View File

| @@ -19,6 +19,8 @@ def main(): | |||||

| group = parser.add_mutually_exclusive_group() | group = parser.add_mutually_exclusive_group() | ||||

| group.add_argument( | group.add_argument( | ||||

| "-e", "--explain", help="Let ChatGPT explain what's eBPF", action="store_true") | "-e", "--explain", help="Let ChatGPT explain what's eBPF", action="store_true") | ||||

| group.add_argument( | |||||

| "-v", "--verbose", help="Print the receive ", action="store_true") | |||||

| group.add_argument( | group.add_argument( | ||||

| "-r", "--run", help="Generate commands using your input with ChatGPT, and run it", action="store", metavar="TEXT") | "-r", "--run", help="Generate commands using your input with ChatGPT, and run it", action="store", metavar="TEXT") | ||||

| @@ -40,13 +42,15 @@ def main(): | |||||

| return | return | ||||

| chatbot = Chatbot(config={"access_token": access_token}) | chatbot = Chatbot(config={"access_token": access_token}) | ||||

| if args.explain: | if args.explain: | ||||

| generate_result(chatbot, "解释一下什么是 eBPF", conv_uuid, True) | |||||

| generate_result(chatbot, "Explain what's eBPF", conv_uuid, True) | |||||

| elif args.run is not None: | elif args.run is not None: | ||||

| desc: str = args.run | desc: str = args.run | ||||

| ret_val = generate_result(chatbot, desc, conv_uuid, True) | |||||

| print("Sending query to ChatGPT: " + desc) | |||||

| ret_val = generate_result(chatbot, construct_prompt(desc), conv_uuid, args.verbose) | |||||

| # print(ret_val) | # print(ret_val) | ||||

| parsed = make_executable_command(ret_val) | parsed = make_executable_command(ret_val) | ||||

| print(f"Command to run: {parsed}") | |||||

| # print(f"Command to run: {parsed}") | |||||

| print("Press Ctrl+C to stop the program....") | |||||

| os.system("sudo " + parsed) | os.system("sudo " + parsed) | ||||

| def construct_prompt(text: str) -> str: | def construct_prompt(text: str) -> str: | ||||

| @@ -74,10 +78,9 @@ def make_executable_command(command: str) -> str: | |||||

| def generate_result(bot: Chatbot, text: str, session: str = None, print_out: bool = False) -> str: | def generate_result(bot: Chatbot, text: str, session: str = None, print_out: bool = False) -> str: | ||||

| from io import StringIO | from io import StringIO | ||||

| prev_text = "" | prev_text = "" | ||||

| query_text = construct_prompt(text) | |||||

| buf = StringIO() | buf = StringIO() | ||||

| for data in bot.ask( | for data in bot.ask( | ||||

| query_text, conversation_id=session | |||||

| text, conversation_id=session | |||||

| ): | ): | ||||

| message = data["message"][len(prev_text):] | message = data["message"][len(prev_text):] | ||||

| if print_out: | if print_out: | ||||