commit

45915b2ba1

100 changed files with 582826 additions and 0 deletions

Unified View

Diff Options

-

+6 -0.idea/vcs.xml

-

+229 -0.idea/workspace.xml

-

+91483 -0Models+K-Means/data/SIR_dataset_processed.json

-

+9232 -0Models+K-Means/data/SIR_test_set.json

-

+73115 -0Models+K-Means/data/SIR_train_set.json

-

+9141 -0Models+K-Means/data/SIR_validation_set.json

-

+46 -0Models+K-Means/data/label_word_ids.json

-

+37 -0Models+K-Means/data/label_word_ids_CVSS2.json

-

+9742 -0Models+K-Means/dataset_more/test.csv

-

+63856 -0Models+K-Means/dataset_more/train.csv

-

+51 -0Models+K-Means/main/CVSSDataset.py

-

+38 -0Models+K-Means/main/CVSS_Calculator.py

-

+530 -0Models+K-Means/main/K-Means/K-Means_cluster.ipynb

-

+690 -0Models+K-Means/main/K-Means/KMeans+vectorString.ipynb

-

+7038 -0Models+K-Means/main/K-Means/PCA+KMeans_cluster.csv

-

+7038 -0Models+K-Means/main/K-Means/PCA+KMeans聚类.csv

-

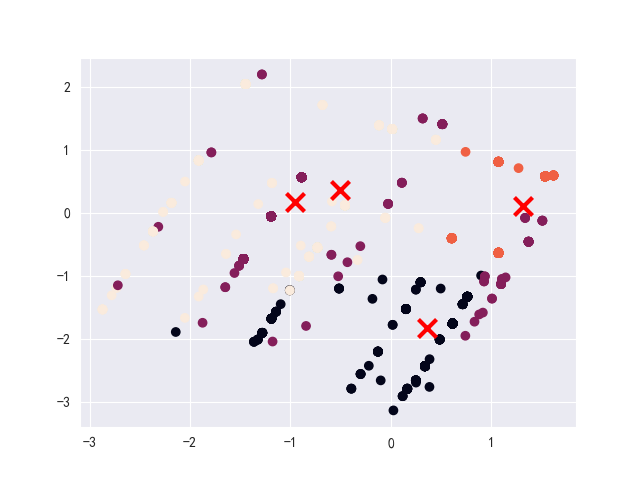

BINModels+K-Means/main/K-Means/cluster.png

-

+17227 -0Models+K-Means/main/K-Means/cluster.svg

-

+5966 -0Models+K-Means/main/K-Means/cluster1.svg

-

+999 -0Models+K-Means/main/K-Means/hard.ipynb

-

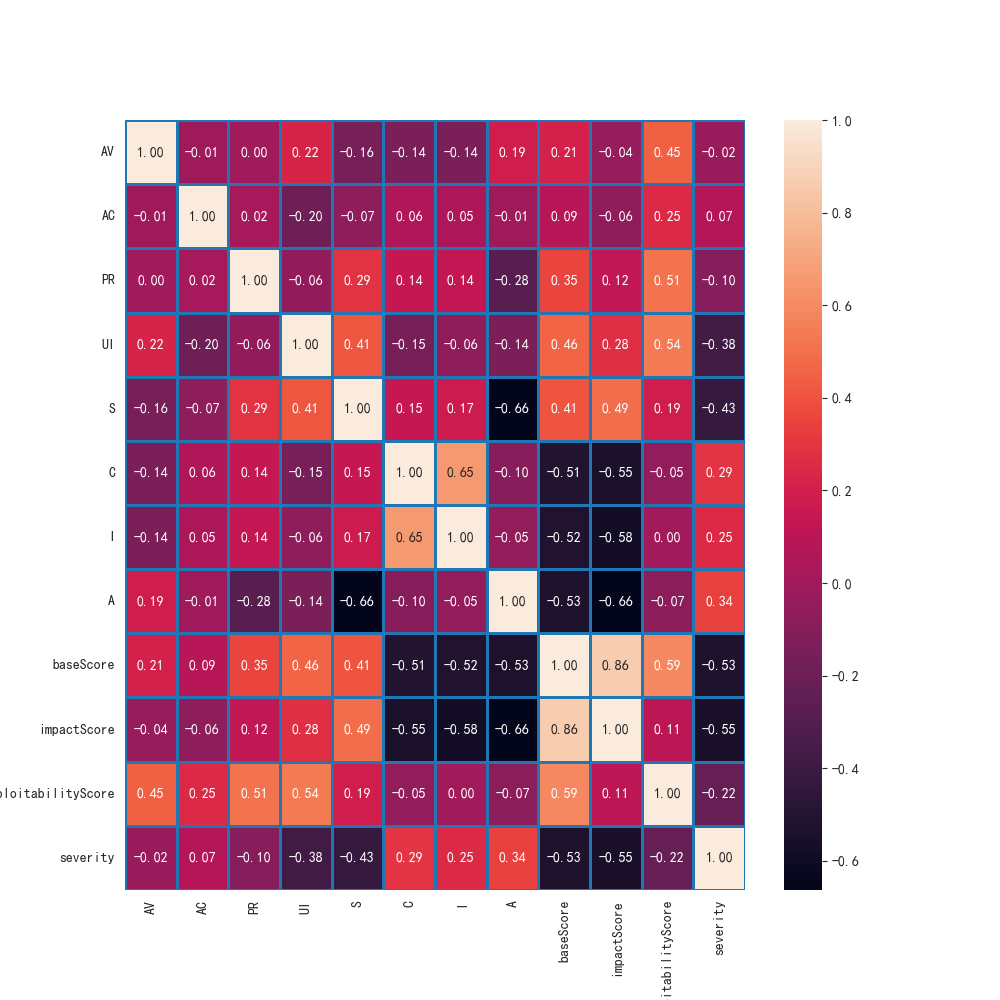

BINModels+K-Means/main/K-Means/heatmap.png

-

+3387 -0Models+K-Means/main/K-Means/heatmap.svg

-

BINModels+K-Means/main/__pycache__/CVSSDataset.cpython-39.pyc

-

BINModels+K-Means/main/__pycache__/lemmatization.cpython-39.pyc

-

BINModels+K-Means/main/__pycache__/remove_stop_words.cpython-39.pyc

-

BINModels+K-Means/main/__pycache__/stemmatization.cpython-39.pyc

-

+687 -0Models+K-Means/main/csv_process.ipynb

-

+533 -0Models+K-Means/main/decisionTree/test_decisionTree.ipynb

-

+67 -0Models+K-Means/main/json2csv.py

-

+66 -0Models+K-Means/main/jsonToCsv.py

-

+56 -0Models+K-Means/main/lemmatization.py

-

BINModels+K-Means/main/output/cluster.png

-

BINModels+K-Means/main/output/heatmap.png

-

+0 -0Models+K-Means/main/output/output1.csv

-

+6331 -0Models+K-Means/main/output/output1_bert_nvd.csv

-

+6331 -0Models+K-Means/main/output/output1_distilbert_nvd.csv

-

+15962 -0Models+K-Means/main/output/output1_last.csv

-

+6331 -0Models+K-Means/main/output/output_albert.csv

-

+6331 -0Models+K-Means/main/output/roberta.csv

-

+20 -0Models+K-Means/main/remove_stop_words.py

-

BINModels+K-Means/main/requirements.txt

-

+20 -0Models+K-Means/main/stemmatization.py

-

+11 -0Models+K-Means/main/tagRemove.py

-

+291 -0Models+K-Means/main/test.py

-

+19 -0Models+K-Means/main/test.sh

-

+2 -0Models+K-Means/main/test_sh.py

-

+269 -0Models+K-Means/main/train.py

-

+14 -0Models+K-Means/main/train.sh

-

BINModels+K-Means/nltk_data/corpora/stopwords.zip

-

+32 -0Models+K-Means/nltk_data/corpora/stopwords/README

-

+754 -0Models+K-Means/nltk_data/corpora/stopwords/arabic

-

+165 -0Models+K-Means/nltk_data/corpora/stopwords/azerbaijani

-

+326 -0Models+K-Means/nltk_data/corpora/stopwords/basque

-

+398 -0Models+K-Means/nltk_data/corpora/stopwords/bengali

-

+278 -0Models+K-Means/nltk_data/corpora/stopwords/catalan

-

+841 -0Models+K-Means/nltk_data/corpora/stopwords/chinese

-

+94 -0Models+K-Means/nltk_data/corpora/stopwords/danish

-

+101 -0Models+K-Means/nltk_data/corpora/stopwords/dutch

-

+179 -0Models+K-Means/nltk_data/corpora/stopwords/english

-

+235 -0Models+K-Means/nltk_data/corpora/stopwords/finnish

-

+157 -0Models+K-Means/nltk_data/corpora/stopwords/french

-

+232 -0Models+K-Means/nltk_data/corpora/stopwords/german

-

+265 -0Models+K-Means/nltk_data/corpora/stopwords/greek

-

+221 -0Models+K-Means/nltk_data/corpora/stopwords/hebrew

-

+1036 -0Models+K-Means/nltk_data/corpora/stopwords/hinglish

-

+199 -0Models+K-Means/nltk_data/corpora/stopwords/hungarian

-

+758 -0Models+K-Means/nltk_data/corpora/stopwords/indonesian

-

+279 -0Models+K-Means/nltk_data/corpora/stopwords/italian

-

+380 -0Models+K-Means/nltk_data/corpora/stopwords/kazakh

-

+255 -0Models+K-Means/nltk_data/corpora/stopwords/nepali

-

+176 -0Models+K-Means/nltk_data/corpora/stopwords/norwegian

-

+207 -0Models+K-Means/nltk_data/corpora/stopwords/portuguese

-

+356 -0Models+K-Means/nltk_data/corpora/stopwords/romanian

-

+151 -0Models+K-Means/nltk_data/corpora/stopwords/russian

-

+1784 -0Models+K-Means/nltk_data/corpora/stopwords/slovene

-

+313 -0Models+K-Means/nltk_data/corpora/stopwords/spanish

-

+114 -0Models+K-Means/nltk_data/corpora/stopwords/swedish

-

+163 -0Models+K-Means/nltk_data/corpora/stopwords/tajik

-

+53 -0Models+K-Means/nltk_data/corpora/stopwords/turkish

-

BINModels+K-Means/nltk_data/tokenizers/punkt_tab.zip

-

+98 -0Models+K-Means/nltk_data/tokenizers/punkt_tab/README

-

+118 -0Models+K-Means/nltk_data/tokenizers/punkt_tab/czech/abbrev_types.txt

-

+96 -0Models+K-Means/nltk_data/tokenizers/punkt_tab/czech/collocations.tab

-

+52789 -0Models+K-Means/nltk_data/tokenizers/punkt_tab/czech/ortho_context.tab

-

+54 -0Models+K-Means/nltk_data/tokenizers/punkt_tab/czech/sent_starters.txt

-

+211 -0Models+K-Means/nltk_data/tokenizers/punkt_tab/danish/abbrev_types.txt

-

+101 -0Models+K-Means/nltk_data/tokenizers/punkt_tab/danish/collocations.tab

-

+53913 -0Models+K-Means/nltk_data/tokenizers/punkt_tab/danish/ortho_context.tab

-

+64 -0Models+K-Means/nltk_data/tokenizers/punkt_tab/danish/sent_starters.txt

-

+99 -0Models+K-Means/nltk_data/tokenizers/punkt_tab/dutch/abbrev_types.txt

-

+37 -0Models+K-Means/nltk_data/tokenizers/punkt_tab/dutch/collocations.tab

-

+32208 -0Models+K-Means/nltk_data/tokenizers/punkt_tab/dutch/ortho_context.tab

-

+54 -0Models+K-Means/nltk_data/tokenizers/punkt_tab/dutch/sent_starters.txt

-

+156 -0Models+K-Means/nltk_data/tokenizers/punkt_tab/english/abbrev_types.txt

-

+37 -0Models+K-Means/nltk_data/tokenizers/punkt_tab/english/collocations.tab

-

+20366 -0Models+K-Means/nltk_data/tokenizers/punkt_tab/english/ortho_context.tab

-

+39 -0Models+K-Means/nltk_data/tokenizers/punkt_tab/english/sent_starters.txt

-

+48 -0Models+K-Means/nltk_data/tokenizers/punkt_tab/estonian/abbrev_types.txt

-

+100 -0Models+K-Means/nltk_data/tokenizers/punkt_tab/estonian/collocations.tab

-

+68544 -0Models+K-Means/nltk_data/tokenizers/punkt_tab/estonian/ortho_context.tab

+ 6

- 0

.idea/vcs.xml

View File

| @@ -0,0 +1,6 @@ | |||||

| <?xml version="1.0" encoding="UTF-8"?> | |||||

| <project version="4"> | |||||

| <component name="VcsDirectoryMappings"> | |||||

| <mapping directory="$PROJECT_DIR$" vcs="Git" /> | |||||

| </component> | |||||

| </project> | |||||

+ 229

- 0

.idea/workspace.xml

View File

| @@ -0,0 +1,229 @@ | |||||

| <?xml version="1.0" encoding="UTF-8"?> | |||||

| <project version="4"> | |||||

| <component name="AutoImportSettings"> | |||||

| <option name="autoReloadType" value="SELECTIVE" /> | |||||

| </component> | |||||

| <component name="ChangeListManager"> | |||||

| <list default="true" id="7cb15b16-868b-48e5-9f8c-d95327a0b928" name="更改" comment="" /> | |||||

| <option name="SHOW_DIALOG" value="false" /> | |||||

| <option name="HIGHLIGHT_CONFLICTS" value="true" /> | |||||

| <option name="HIGHLIGHT_NON_ACTIVE_CHANGELIST" value="false" /> | |||||

| <option name="LAST_RESOLUTION" value="IGNORE" /> | |||||

| </component> | |||||

| <component name="FileTemplateManagerImpl"> | |||||

| <option name="RECENT_TEMPLATES"> | |||||

| <list> | |||||

| <option value="HTML File" /> | |||||

| <option value="Python Script" /> | |||||

| </list> | |||||

| </option> | |||||

| </component> | |||||

| <component name="Git.Settings"> | |||||

| <option name="RECENT_GIT_ROOT_PATH" value="$PROJECT_DIR$" /> | |||||

| </component> | |||||

| <component name="MarkdownSettingsMigration"> | |||||

| <option name="stateVersion" value="1" /> | |||||

| </component> | |||||

| <component name="ProjectColorInfo">{ | |||||

| "associatedIndex": 2 | |||||

| }</component> | |||||

| <component name="ProjectId" id="2wQLGPO7Ae3L3IOpkLnnyaEAHnt" /> | |||||

| <component name="ProjectLevelVcsManager" settingsEditedManually="true" /> | |||||

| <component name="ProjectViewState"> | |||||

| <option name="hideEmptyMiddlePackages" value="true" /> | |||||

| <option name="showLibraryContents" value="true" /> | |||||

| </component> | |||||

| <component name="PropertiesComponent">{ | |||||

| "keyToString": { | |||||

| "DefaultHtmlFileTemplate": "HTML File", | |||||

| "RunOnceActivity.OpenProjectViewOnStart": "true", | |||||

| "RunOnceActivity.ShowReadmeOnStart": "true", | |||||

| "WebServerToolWindowFactoryState": "false", | |||||

| "ignore.virus.scanning.warn.message": "true", | |||||

| "last_opened_file_path": "C:/Users/lw/Desktop/seeingData/static/img", | |||||

| "node.js.detected.package.eslint": "true", | |||||

| "node.js.detected.package.tslint": "true", | |||||

| "node.js.selected.package.eslint": "(autodetect)", | |||||

| "node.js.selected.package.tslint": "(autodetect)", | |||||

| "vue.rearranger.settings.migration": "true" | |||||

| } | |||||

| }</component> | |||||

| <component name="RecentsManager"> | |||||

| <key name="CopyFile.RECENT_KEYS"> | |||||

| <recent name="C:\Users\lw\Desktop\seeingData\static\img" /> | |||||

| <recent name="C:\Users\lw\Desktop\seeingData" /> | |||||

| <recent name="C:\Users\lw\Desktop\seeingData\static" /> | |||||

| <recent name="C:\Users\lw\Desktop\seeingData\templates" /> | |||||

| <recent name="C:\Users\lw\Desktop\seeingData\Data" /> | |||||

| </key> | |||||

| <key name="MoveFile.RECENT_KEYS"> | |||||

| <recent name="C:\Users\lw\Desktop\seeingData\static" /> | |||||

| </key> | |||||

| </component> | |||||

| <component name="RunManager" selected="Python.app"> | |||||

| <configuration name="main.html" type="JavascriptDebugType" temporary="true" nameIsGenerated="true" uri="http://localhost:63342/seeingData/templates/main.html" useBuiltInWebServerPort="true"> | |||||

| <method v="2" /> | |||||

| </configuration> | |||||

| <configuration name="app" type="PythonConfigurationType" factoryName="Python" temporary="true" nameIsGenerated="true"> | |||||

| <module name="seeingData" /> | |||||

| <option name="INTERPRETER_OPTIONS" value="" /> | |||||

| <option name="PARENT_ENVS" value="true" /> | |||||

| <envs> | |||||

| <env name="PYTHONUNBUFFERED" value="1" /> | |||||

| </envs> | |||||

| <option name="SDK_HOME" value="" /> | |||||

| <option name="WORKING_DIRECTORY" value="$PROJECT_DIR$" /> | |||||

| <option name="IS_MODULE_SDK" value="true" /> | |||||

| <option name="ADD_CONTENT_ROOTS" value="true" /> | |||||

| <option name="ADD_SOURCE_ROOTS" value="true" /> | |||||

| <EXTENSION ID="PythonCoverageRunConfigurationExtension" runner="coverage.py" /> | |||||

| <option name="SCRIPT_NAME" value="$PROJECT_DIR$/app.py" /> | |||||

| <option name="PARAMETERS" value="" /> | |||||

| <option name="SHOW_COMMAND_LINE" value="false" /> | |||||

| <option name="EMULATE_TERMINAL" value="false" /> | |||||

| <option name="MODULE_MODE" value="false" /> | |||||

| <option name="REDIRECT_INPUT" value="false" /> | |||||

| <option name="INPUT_FILE" value="" /> | |||||

| <method v="2" /> | |||||

| </configuration> | |||||

| <configuration name="cal (1)" type="PythonConfigurationType" factoryName="Python" temporary="true" nameIsGenerated="true"> | |||||

| <module name="seeingData" /> | |||||

| <option name="INTERPRETER_OPTIONS" value="" /> | |||||

| <option name="PARENT_ENVS" value="true" /> | |||||

| <envs> | |||||

| <env name="PYTHONUNBUFFERED" value="1" /> | |||||

| </envs> | |||||

| <option name="SDK_HOME" value="" /> | |||||

| <option name="WORKING_DIRECTORY" value="$PROJECT_DIR$/calculate" /> | |||||

| <option name="IS_MODULE_SDK" value="true" /> | |||||

| <option name="ADD_CONTENT_ROOTS" value="true" /> | |||||

| <option name="ADD_SOURCE_ROOTS" value="true" /> | |||||

| <EXTENSION ID="PythonCoverageRunConfigurationExtension" runner="coverage.py" /> | |||||

| <option name="SCRIPT_NAME" value="$PROJECT_DIR$/calculate/cal.py" /> | |||||

| <option name="PARAMETERS" value="" /> | |||||

| <option name="SHOW_COMMAND_LINE" value="false" /> | |||||

| <option name="EMULATE_TERMINAL" value="false" /> | |||||

| <option name="MODULE_MODE" value="false" /> | |||||

| <option name="REDIRECT_INPUT" value="false" /> | |||||

| <option name="INPUT_FILE" value="" /> | |||||

| <method v="2" /> | |||||

| </configuration> | |||||

| <configuration name="exploitabilityMSE" type="PythonConfigurationType" factoryName="Python" temporary="true" nameIsGenerated="true"> | |||||

| <module name="seeingData" /> | |||||

| <option name="INTERPRETER_OPTIONS" value="" /> | |||||

| <option name="PARENT_ENVS" value="true" /> | |||||

| <envs> | |||||

| <env name="PYTHONUNBUFFERED" value="1" /> | |||||

| </envs> | |||||

| <option name="SDK_HOME" value="" /> | |||||

| <option name="WORKING_DIRECTORY" value="$PROJECT_DIR$/calculate" /> | |||||

| <option name="IS_MODULE_SDK" value="true" /> | |||||

| <option name="ADD_CONTENT_ROOTS" value="true" /> | |||||

| <option name="ADD_SOURCE_ROOTS" value="true" /> | |||||

| <EXTENSION ID="PythonCoverageRunConfigurationExtension" runner="coverage.py" /> | |||||

| <option name="SCRIPT_NAME" value="$PROJECT_DIR$/calculate/exploitabilityMSE.py" /> | |||||

| <option name="PARAMETERS" value="" /> | |||||

| <option name="SHOW_COMMAND_LINE" value="false" /> | |||||

| <option name="EMULATE_TERMINAL" value="false" /> | |||||

| <option name="MODULE_MODE" value="false" /> | |||||

| <option name="REDIRECT_INPUT" value="false" /> | |||||

| <option name="INPUT_FILE" value="" /> | |||||

| <method v="2" /> | |||||

| </configuration> | |||||

| <configuration name="impactMse" type="PythonConfigurationType" factoryName="Python" temporary="true" nameIsGenerated="true"> | |||||

| <module name="seeingData" /> | |||||

| <option name="INTERPRETER_OPTIONS" value="" /> | |||||

| <option name="PARENT_ENVS" value="true" /> | |||||

| <envs> | |||||

| <env name="PYTHONUNBUFFERED" value="1" /> | |||||

| </envs> | |||||

| <option name="SDK_HOME" value="" /> | |||||

| <option name="WORKING_DIRECTORY" value="$PROJECT_DIR$/calculate" /> | |||||

| <option name="IS_MODULE_SDK" value="true" /> | |||||

| <option name="ADD_CONTENT_ROOTS" value="true" /> | |||||

| <option name="ADD_SOURCE_ROOTS" value="true" /> | |||||

| <EXTENSION ID="PythonCoverageRunConfigurationExtension" runner="coverage.py" /> | |||||

| <option name="SCRIPT_NAME" value="$PROJECT_DIR$/calculate/impactMse.py" /> | |||||

| <option name="PARAMETERS" value="" /> | |||||

| <option name="SHOW_COMMAND_LINE" value="false" /> | |||||

| <option name="EMULATE_TERMINAL" value="false" /> | |||||

| <option name="MODULE_MODE" value="false" /> | |||||

| <option name="REDIRECT_INPUT" value="false" /> | |||||

| <option name="INPUT_FILE" value="" /> | |||||

| <method v="2" /> | |||||

| </configuration> | |||||

| <configuration name="main" type="PythonConfigurationType" factoryName="Python" nameIsGenerated="true"> | |||||

| <module name="seeingData" /> | |||||

| <option name="INTERPRETER_OPTIONS" value="" /> | |||||

| <option name="PARENT_ENVS" value="true" /> | |||||

| <envs> | |||||

| <env name="PYTHONUNBUFFERED" value="1" /> | |||||

| </envs> | |||||

| <option name="SDK_HOME" value="" /> | |||||

| <option name="WORKING_DIRECTORY" value="$PROJECT_DIR$" /> | |||||

| <option name="IS_MODULE_SDK" value="true" /> | |||||

| <option name="ADD_CONTENT_ROOTS" value="true" /> | |||||

| <option name="ADD_SOURCE_ROOTS" value="true" /> | |||||

| <EXTENSION ID="PythonCoverageRunConfigurationExtension" runner="coverage.py" /> | |||||

| <option name="SCRIPT_NAME" value="$PROJECT_DIR$/main.py" /> | |||||

| <option name="PARAMETERS" value="" /> | |||||

| <option name="SHOW_COMMAND_LINE" value="false" /> | |||||

| <option name="EMULATE_TERMINAL" value="false" /> | |||||

| <option name="MODULE_MODE" value="false" /> | |||||

| <option name="REDIRECT_INPUT" value="false" /> | |||||

| <option name="INPUT_FILE" value="" /> | |||||

| <method v="2" /> | |||||

| </configuration> | |||||

| <recent_temporary> | |||||

| <list> | |||||

| <item itemvalue="Python.app" /> | |||||

| <item itemvalue="JavaScript 调试.main.html" /> | |||||

| <item itemvalue="Python.cal (1)" /> | |||||

| <item itemvalue="Python.impactMse" /> | |||||

| <item itemvalue="Python.exploitabilityMSE" /> | |||||

| </list> | |||||

| </recent_temporary> | |||||

| </component> | |||||

| <component name="SpellCheckerSettings" RuntimeDictionaries="0" Folders="0" CustomDictionaries="0" DefaultDictionary="应用程序级" UseSingleDictionary="true" transferred="true" /> | |||||

| <component name="TaskManager"> | |||||

| <task active="true" id="Default" summary="默认任务"> | |||||

| <changelist id="7cb15b16-868b-48e5-9f8c-d95327a0b928" name="更改" comment="" /> | |||||

| <created>1745968754647</created> | |||||

| <option name="number" value="Default" /> | |||||

| <option name="presentableId" value="Default" /> | |||||

| <updated>1745968754647</updated> | |||||

| <workItem from="1745968756808" duration="1718000" /> | |||||

| <workItem from="1746000490114" duration="1219000" /> | |||||

| <workItem from="1746001738667" duration="2829000" /> | |||||

| <workItem from="1746004674142" duration="14725000" /> | |||||

| <workItem from="1746060084499" duration="29737000" /> | |||||

| <workItem from="1746112547099" duration="661000" /> | |||||

| <workItem from="1746113230998" duration="18000" /> | |||||

| <workItem from="1746113446889" duration="943000" /> | |||||

| <workItem from="1746114706813" duration="101000" /> | |||||

| <workItem from="1746126263703" duration="720000" /> | |||||

| <workItem from="1746127669568" duration="1287000" /> | |||||

| <workItem from="1746445549616" duration="1180000" /> | |||||

| <workItem from="1746448252115" duration="616000" /> | |||||

| <workItem from="1746457783724" duration="5000" /> | |||||

| <workItem from="1746460056528" duration="148000" /> | |||||

| <workItem from="1746460254314" duration="24000" /> | |||||

| <workItem from="1746631090475" duration="142000" /> | |||||

| <workItem from="1747312456214" duration="701000" /> | |||||

| </task> | |||||

| <servers /> | |||||

| </component> | |||||

| <component name="TypeScriptGeneratedFilesManager"> | |||||

| <option name="version" value="3" /> | |||||

| </component> | |||||

| <component name="com.intellij.coverage.CoverageDataManagerImpl"> | |||||

| <SUITE FILE_PATH="coverage/seeingData$exploitabilityMSE.coverage" NAME="exploitabilityMSE 覆盖结果" MODIFIED="1746109799847" SOURCE_PROVIDER="com.intellij.coverage.DefaultCoverageFileProvider" RUNNER="coverage.py" COVERAGE_BY_TEST_ENABLED="true" COVERAGE_TRACING_ENABLED="false" WORKING_DIRECTORY="$PROJECT_DIR$/calculate" /> | |||||

| <SUITE FILE_PATH="coverage/seeingData$calbase.coverage" NAME="calbase 覆盖结果" MODIFIED="1746108928046" SOURCE_PROVIDER="com.intellij.coverage.DefaultCoverageFileProvider" RUNNER="coverage.py" COVERAGE_BY_TEST_ENABLED="true" COVERAGE_TRACING_ENABLED="false" WORKING_DIRECTORY="$PROJECT_DIR$/calculate" /> | |||||

| <SUITE FILE_PATH="coverage/seeingData$cal__1_.coverage" NAME="cal (1) 覆盖结果" MODIFIED="1746110303519" SOURCE_PROVIDER="com.intellij.coverage.DefaultCoverageFileProvider" RUNNER="coverage.py" COVERAGE_BY_TEST_ENABLED="true" COVERAGE_TRACING_ENABLED="false" WORKING_DIRECTORY="$PROJECT_DIR$/calculate" /> | |||||

| <SUITE FILE_PATH="coverage/seeingData$main.coverage" NAME="main 覆盖结果" MODIFIED="1745968761559" SOURCE_PROVIDER="com.intellij.coverage.DefaultCoverageFileProvider" RUNNER="coverage.py" COVERAGE_BY_TEST_ENABLED="true" COVERAGE_TRACING_ENABLED="false" WORKING_DIRECTORY="$PROJECT_DIR$" /> | |||||

| <SUITE FILE_PATH="coverage/seeingData$impactMse.coverage" NAME="impactMse 覆盖结果" MODIFIED="1746110275202" SOURCE_PROVIDER="com.intellij.coverage.DefaultCoverageFileProvider" RUNNER="coverage.py" COVERAGE_BY_TEST_ENABLED="true" COVERAGE_TRACING_ENABLED="false" WORKING_DIRECTORY="$PROJECT_DIR$/calculate" /> | |||||

| <SUITE FILE_PATH="coverage/seeingData$accurate.coverage" NAME="accurate 覆盖结果" MODIFIED="1746020524862" SOURCE_PROVIDER="com.intellij.coverage.DefaultCoverageFileProvider" RUNNER="coverage.py" COVERAGE_BY_TEST_ENABLED="true" COVERAGE_TRACING_ENABLED="false" WORKING_DIRECTORY="$PROJECT_DIR$/calculate" /> | |||||

| <SUITE FILE_PATH="coverage/seeingData$baseMse.coverage" NAME="baseMse 覆盖结果" MODIFIED="1746108872502" SOURCE_PROVIDER="com.intellij.coverage.DefaultCoverageFileProvider" RUNNER="coverage.py" COVERAGE_BY_TEST_ENABLED="true" COVERAGE_TRACING_ENABLED="false" WORKING_DIRECTORY="$PROJECT_DIR$/calculate" /> | |||||

| <SUITE FILE_PATH="coverage/seeingData$app.coverage" NAME="app 覆盖结果" MODIFIED="1746631107684" SOURCE_PROVIDER="com.intellij.coverage.DefaultCoverageFileProvider" RUNNER="coverage.py" COVERAGE_BY_TEST_ENABLED="true" COVERAGE_TRACING_ENABLED="false" WORKING_DIRECTORY="$PROJECT_DIR$" /> | |||||

| </component> | |||||

| </project> | |||||

+ 91483

- 0

Models+K-Means/data/SIR_dataset_processed.json

File diff suppressed because it is too large

View File

+ 9232

- 0

Models+K-Means/data/SIR_test_set.json

File diff suppressed because it is too large

View File

+ 73115

- 0

Models+K-Means/data/SIR_train_set.json

File diff suppressed because it is too large

View File

+ 9141

- 0

Models+K-Means/data/SIR_validation_set.json

File diff suppressed because it is too large

View File

+ 46

- 0

Models+K-Means/data/label_word_ids.json

View File

| @@ -0,0 +1,46 @@ | |||||

| { | |||||

| "AV": { | |||||

| "network": 2897, | |||||

| "adjacent": 5516, | |||||

| "local": 2334, | |||||

| "physical": 3558 | |||||

| }, | |||||

| "AC": { | |||||

| "low": 2659, | |||||

| "high": 2152 | |||||

| }, | |||||

| "PR": { | |||||

| "none": 3904, | |||||

| "low": 2659, | |||||

| "high": 2152 | |||||

| }, | |||||

| "UI": { | |||||

| "none": 3904, | |||||

| "required": 3223 | |||||

| }, | |||||

| "S": { | |||||

| "unchanged": 15704, | |||||

| "changed": 2904 | |||||

| }, | |||||

| "C": { | |||||

| "none": 3904, | |||||

| "low": 2659, | |||||

| "high": 2152 | |||||

| }, | |||||

| "I": { | |||||

| "none": 3904, | |||||

| "low": 2659, | |||||

| "high": 2152 | |||||

| }, | |||||

| "A": { | |||||

| "none": 3904, | |||||

| "low": 2659, | |||||

| "high": 2152 | |||||

| }, | |||||

| "severity": { | |||||

| "low": 2659, | |||||

| "medium": 5396, | |||||

| "high": 2152, | |||||

| "critical": 4187 | |||||

| } | |||||

| } | |||||

+ 37

- 0

Models+K-Means/data/label_word_ids_CVSS2.json

View File

| @@ -0,0 +1,37 @@ | |||||

| { | |||||

| "AV": { | |||||

| "network": 2897, | |||||

| "adjacent": 5516, | |||||

| "local": 2334 | |||||

| }, | |||||

| "AC": { | |||||

| "low": 2659, | |||||

| "medium": 5396, | |||||

| "high": 2152 | |||||

| }, | |||||

| "Au": { | |||||

| "none": 3904, | |||||

| "single": 2309, | |||||

| "multiple": 3674 | |||||

| }, | |||||

| "C": { | |||||

| "none": 3904, | |||||

| "partial": 7704, | |||||

| "complete": 3143 | |||||

| }, | |||||

| "I": { | |||||

| "none": 3904, | |||||

| "partial": 7704, | |||||

| "complete": 3143 | |||||

| }, | |||||

| "A": { | |||||

| "none": 3904, | |||||

| "partial": 7704, | |||||

| "complete": 3143 | |||||

| }, | |||||

| "severity": { | |||||

| "low": 2659, | |||||

| "medium": 5396, | |||||

| "high": 2152 | |||||

| } | |||||

| } | |||||

+ 9742

- 0

Models+K-Means/dataset_more/test.csv

File diff suppressed because it is too large

View File

+ 63856

- 0

Models+K-Means/dataset_more/train.csv

File diff suppressed because it is too large

View File

+ 51

- 0

Models+K-Means/main/CVSSDataset.py

View File

| @@ -0,0 +1,51 @@ | |||||

| from pathlib import Path | |||||

| from sklearn.model_selection import train_test_split | |||||

| import torch | |||||

| import csv | |||||

| class CVSSDataset(torch.utils.data.Dataset): | |||||

| def __init__(self, encodings, labels): | |||||

| self.encodings = encodings | |||||

| self.labels = labels | |||||

| def __getitem__(self, idx): | |||||

| item = {key: torch.tensor(val[idx]) for key, val in self.encodings.items()} | |||||

| item['labels'] = torch.tensor(self.labels[idx]) | |||||

| return item | |||||

| def __len__(self): | |||||

| return len(self.labels) | |||||

| def read_cvss_txt(split_dir, list_classes): | |||||

| split_dir = Path(split_dir) | |||||

| texts = [] | |||||

| labels = [] | |||||

| for label_dir in ["LOW", "HIGH"]: | |||||

| for text_file in (split_dir/label_dir).iterdir(): | |||||

| texts.append(text_file.read_text()) | |||||

| for i in range(len(list_classes)): | |||||

| if list_classes[i] == label_dir: | |||||

| labels.append(i) | |||||

| else: | |||||

| continue | |||||

| return texts, labels | |||||

| def read_cvss_csv(file_name, num_label, list_classes): | |||||

| texts = [] | |||||

| labels = [] | |||||

| csv_file = open(file_name, 'r+',encoding='UTF-8') | |||||

| csv_reader = csv.reader(csv_file, delimiter=',', quotechar='"') | |||||

| for row in csv_reader: | |||||

| texts.append(row[0]) | |||||

| for i in range(len(list_classes)): | |||||

| if list_classes[i] == row[num_label]: | |||||

| labels.append(i) | |||||

| else: | |||||

| continue | |||||

| csv_file.close() | |||||

| return texts, labels | |||||

+ 38

- 0

Models+K-Means/main/CVSS_Calculator.py

View File

| @@ -0,0 +1,38 @@ | |||||

| import math | |||||

| from cvss import CVSS2 | |||||

| from cvss import CVSS3 | |||||

| from cvss import CVSS4 | |||||

| import re | |||||

| import pandas as pd | |||||

| import numpy as np | |||||

| data1 = pd.DataFrame(pd.read_json(r"E:\pythonProject_open\data\SIR_dataset_processed.json")) | |||||

| vecStr = data1["vectorString"] | |||||

| impactScores = data1["impactScore"] | |||||

| exploitabilityScores = data1["exploitabilityScore"] | |||||

| print("----") | |||||

| for i, j, k in zip(vecStr, impactScores, exploitabilityScores) : | |||||

| cvssVer = re.findall(':(.*?)/', i) | |||||

| impactScore = float(j) | |||||

| exploitabilityScore = float(k) | |||||

| if float(cvssVer[0]) == 2: | |||||

| cvss = CVSS2(i) | |||||

| elif 2 <= float(cvssVer[0]) < 4: | |||||

| cvss = CVSS3(i) | |||||

| else: | |||||

| cvss = CVSS4(i) | |||||

| cvss_baseScore = cvss.base_score | |||||

| print(cvss_baseScore) | |||||

| if impactScore <= 0: | |||||

| cvss_baseScore = 0 | |||||

| elif 0 < impactScore + exploitabilityScore < 10: | |||||

| cvss_baseScore = math.ceil((impactScore + exploitabilityScore) * 10) / 10 | |||||

| else: | |||||

| cvss_baseScore = 10 | |||||

| print(f"baseScore:{cvss_baseScore}, impactScore:{impactScore}, exploitabilityScore:{exploitabilityScore}") | |||||

+ 530

- 0

Models+K-Means/main/K-Means/K-Means_cluster.ipynb

File diff suppressed because it is too large

View File

+ 690

- 0

Models+K-Means/main/K-Means/KMeans+vectorString.ipynb

File diff suppressed because it is too large

View File

+ 7038

- 0

Models+K-Means/main/K-Means/PCA+KMeans_cluster.csv

File diff suppressed because it is too large

View File

+ 7038

- 0

Models+K-Means/main/K-Means/PCA+KMeans聚类.csv

File diff suppressed because it is too large

View File

BIN

Models+K-Means/main/K-Means/cluster.png

View File

+ 17227

- 0

Models+K-Means/main/K-Means/cluster.svg

File diff suppressed because it is too large

View File

+ 5966

- 0

Models+K-Means/main/K-Means/cluster1.svg

File diff suppressed because it is too large

View File

+ 999

- 0

Models+K-Means/main/K-Means/hard.ipynb

File diff suppressed because it is too large

View File

BIN

Models+K-Means/main/K-Means/heatmap.png

View File

+ 3387

- 0

Models+K-Means/main/K-Means/heatmap.svg

File diff suppressed because it is too large

View File

BIN

Models+K-Means/main/__pycache__/CVSSDataset.cpython-39.pyc

View File

BIN

Models+K-Means/main/__pycache__/lemmatization.cpython-39.pyc

View File

BIN

Models+K-Means/main/__pycache__/remove_stop_words.cpython-39.pyc

View File

BIN

Models+K-Means/main/__pycache__/stemmatization.cpython-39.pyc

View File

+ 687

- 0

Models+K-Means/main/csv_process.ipynb

View File

| @@ -0,0 +1,687 @@ | |||||

| { | |||||

| "cells": [ | |||||

| { | |||||

| "cell_type": "code", | |||||

| "execution_count": 1, | |||||

| "id": "676be61a-bd65-4510-8357-94859f596330", | |||||

| "metadata": {}, | |||||

| "outputs": [], | |||||

| "source": [ | |||||

| "import pandas as pd\n", | |||||

| "import json" | |||||

| ] | |||||

| }, | |||||

| { | |||||

| "cell_type": "code", | |||||

| "execution_count": 2, | |||||

| "id": "94f312ae-87d2-4d5e-ae75-9fc85a2a980c", | |||||

| "metadata": {}, | |||||

| "outputs": [], | |||||

| "source": [ | |||||

| "data = pd.read_json('../data/SIR_test_set.json')" | |||||

| ] | |||||

| }, | |||||

| { | |||||

| "cell_type": "code", | |||||

| "execution_count": 3, | |||||

| "id": "7d949b04-4929-4921-a818-4e8cbb57826b", | |||||

| "metadata": {}, | |||||

| "outputs": [ | |||||

| { | |||||

| "name": "stdout", | |||||

| "output_type": "stream", | |||||

| "text": [ | |||||

| " CVE_ID Issue_Url_old \\\n", | |||||

| "0 CVE-2021-45822 https://github.com/btiteam/xbtit-3.1/issues/7 \n", | |||||

| "1 CVE-2021-45769 https://github.com/mz-automation/libiec61850/i... \n", | |||||

| "2 CVE-2021-45773 https://github.com/mz-automation/lib60870/issu... \n", | |||||

| "3 CVE-2022-25014 https://github.com/gamonoid/icehrm/issues/283 \n", | |||||

| "4 CVE-2022-25013 https://github.com/gamonoid/icehrm/issues/284 \n", | |||||

| ".. ... ... \n", | |||||

| "705 CVE-2022-32417 https://github.com/Snakinya/Vuln/issues/1 \n", | |||||

| "706 CVE-2021-34485 https://github.com/github/advisory-database/is... \n", | |||||

| "707 CVE-2021-44906 https://github.com/minimistjs/minimist/issues/11 \n", | |||||

| "708 CVE-2020-8927 https://github.com/github/advisory-database/is... \n", | |||||

| "709 CVE-2021-31402 https://github.com/cfug/dio/issues/1752 \n", | |||||

| "\n", | |||||

| " Issue_Url_new \\\n", | |||||

| "0 https://github.com/btiteam/xbtit-3.1/issues/7 \n", | |||||

| "1 https://github.com/mz-automation/libiec61850/i... \n", | |||||

| "2 https://github.com/mz-automation/lib60870/issu... \n", | |||||

| "3 https://github.com/gamonoid/icehrm/issues/283 \n", | |||||

| "4 https://github.com/gamonoid/icehrm/issues/284 \n", | |||||

| ".. ... \n", | |||||

| "705 https://github.com/snakinya/vuln/issues/1 \n", | |||||

| "706 https://github.com/github/advisory-database/is... \n", | |||||

| "707 https://github.com/minimistjs/minimist/issues/11 \n", | |||||

| "708 https://github.com/github/advisory-database/is... \n", | |||||

| "709 https://github.com/cfug/dio/issues/1752 \n", | |||||

| "\n", | |||||

| " Repo_new Issue_Created_At \\\n", | |||||

| "0 btiteam/xbtit-3.1 2021-12-22 20:25:58+00:00 \n", | |||||

| "1 mz-automation/libiec61850 2021-12-23 00:53:55+00:00 \n", | |||||

| "2 mz-automation/lib60870 2021-12-23 06:01:26+00:00 \n", | |||||

| "3 gamonoid/icehrm 2021-12-23 08:09:18+00:00 \n", | |||||

| "4 gamonoid/icehrm 2021-12-23 08:13:20+00:00 \n", | |||||

| ".. ... ... \n", | |||||

| "705 Snakinya/Vuln 2022-08-04 10:38:48+00:00 \n", | |||||

| "706 github/advisory-database 2022-10-12 20:44:32+00:00 \n", | |||||

| "707 minimistjs/minimist 2022-10-19 14:23:14+00:00 \n", | |||||

| "708 github/advisory-database 2022-10-31 20:04:11+00:00 \n", | |||||

| "709 cfug/dio 2023-03-21 16:54:52+00:00 \n", | |||||

| "\n", | |||||

| " description \\\n", | |||||

| "0 Stored & Reflected XSS affecting Xbtit NUMBERT... \n", | |||||

| "1 NULL Pointer Dereference in APITAG NULL Pointe... \n", | |||||

| "2 NULL Pointer Dereference in APITAG NULL Pointe... \n", | |||||

| "3 Reflected XSS vulnerability NUMBERTAG in icehr... \n", | |||||

| "4 Reflected XSS vulnerabilities NUMBERTAG in ice... \n", | |||||

| ".. ... \n", | |||||

| "705 pboot cms NUMBERTAG RCE. 漏洞详情: URLTAG 声明 APITA... \n", | |||||

| "706 .NET CVE backfill round NUMBERTAG Hello, Pleas... \n", | |||||

| "707 Backport of NUMBERTAG fixes to NUMBERTAG Thank... \n", | |||||

| "708 Update impacted packages for CVETAG . Hi, This... \n", | |||||

| "709 CVE Dio NUMBERTAG Google OVS Scanner. Package ... \n", | |||||

| "\n", | |||||

| " vectorString severity baseScore \\\n", | |||||

| "0 CVSS:3.1/AV:N/AC:L/PR:N/UI:R/S:C/C:L/I:L/A:N MEDIUM 6.1 \n", | |||||

| "1 CVSS:3.1/AV:N/AC:L/PR:N/UI:N/S:U/C:N/I:N/A:H HIGH 7.5 \n", | |||||

| "2 CVSS:3.1/AV:N/AC:L/PR:N/UI:N/S:U/C:N/I:N/A:H HIGH 7.5 \n", | |||||

| "3 CVSS:3.1/AV:N/AC:L/PR:N/UI:R/S:C/C:L/I:L/A:N MEDIUM 6.1 \n", | |||||

| "4 CVSS:3.1/AV:N/AC:L/PR:N/UI:R/S:C/C:L/I:L/A:N MEDIUM 6.1 \n", | |||||

| ".. ... ... ... \n", | |||||

| "705 CVSS:3.1/AV:N/AC:L/PR:N/UI:N/S:U/C:H/I:H/A:H CRITICAL 9.8 \n", | |||||

| "706 CVSS:3.1/AV:L/AC:L/PR:L/UI:N/S:U/C:H/I:N/A:N MEDIUM 5.5 \n", | |||||

| "707 CVSS:3.1/AV:N/AC:L/PR:N/UI:N/S:U/C:H/I:H/A:H CRITICAL 9.8 \n", | |||||

| "708 CVSS:3.1/AV:N/AC:L/PR:N/UI:N/S:U/C:N/I:L/A:L MEDIUM 6.5 \n", | |||||

| "709 CVSS:3.1/AV:N/AC:L/PR:N/UI:N/S:U/C:H/I:N/A:N HIGH 7.5 \n", | |||||

| "\n", | |||||

| " impactScore exploitabilityScore \n", | |||||

| "0 2.7 2.8 \n", | |||||

| "1 3.6 3.9 \n", | |||||

| "2 3.6 3.9 \n", | |||||

| "3 2.7 2.8 \n", | |||||

| "4 2.7 2.8 \n", | |||||

| ".. ... ... \n", | |||||

| "705 5.9 3.9 \n", | |||||

| "706 3.6 1.8 \n", | |||||

| "707 5.9 3.9 \n", | |||||

| "708 2.5 3.9 \n", | |||||

| "709 3.6 3.9 \n", | |||||

| "\n", | |||||

| "[710 rows x 11 columns]\n" | |||||

| ] | |||||

| } | |||||

| ], | |||||

| "source": [ | |||||

| "train_data_temp = pd.DataFrame()\n", | |||||

| "print(data)" | |||||

| ] | |||||

| }, | |||||

| { | |||||

| "cell_type": "code", | |||||

| "execution_count": 4, | |||||

| "id": "d4272d23-2c40-416a-aa83-40b09817ea0a", | |||||

| "metadata": {}, | |||||

| "outputs": [], | |||||

| "source": [ | |||||

| "train_data_temp['description'] = data['description']" | |||||

| ] | |||||

| }, | |||||

| { | |||||

| "cell_type": "code", | |||||

| "execution_count": 5, | |||||

| "id": "101f87d6-38d7-4562-a572-49ab74eec58d", | |||||

| "metadata": {}, | |||||

| "outputs": [ | |||||

| { | |||||

| "name": "stdout", | |||||

| "output_type": "stream", | |||||

| "text": [ | |||||

| "0 False\n", | |||||

| "1 False\n", | |||||

| "2 False\n", | |||||

| "3 False\n", | |||||

| "4 False\n", | |||||

| " ... \n", | |||||

| "705 False\n", | |||||

| "706 False\n", | |||||

| "707 False\n", | |||||

| "708 False\n", | |||||

| "709 False\n", | |||||

| "Name: description, Length: 710, dtype: bool\n" | |||||

| ] | |||||

| } | |||||

| ], | |||||

| "source": [ | |||||

| "print(train_data_temp['description'].isna())" | |||||

| ] | |||||

| }, | |||||

| { | |||||

| "cell_type": "code", | |||||

| "execution_count": 6, | |||||

| "id": "85b0cd35-3862-43fb-b4ab-d88c0ceae6da", | |||||

| "metadata": {}, | |||||

| "outputs": [ | |||||

| { | |||||

| "name": "stdout", | |||||

| "output_type": "stream", | |||||

| "text": [ | |||||

| "Empty DataFrame\n", | |||||

| "Columns: [description]\n", | |||||

| "Index: []\n" | |||||

| ] | |||||

| } | |||||

| ], | |||||

| "source": [ | |||||

| "# 获取 NaN 值的行索引\n", | |||||

| "nan_rows = train_data_temp[train_data_temp['description'].isna()]\n", | |||||

| "print(nan_rows)" | |||||

| ] | |||||

| }, | |||||

| { | |||||

| "cell_type": "code", | |||||

| "execution_count": 7, | |||||

| "id": "8eaf202e-b96b-4f79-8b3b-89e4757add04", | |||||

| "metadata": {}, | |||||

| "outputs": [], | |||||

| "source": [ | |||||

| "vectorString = data['vectorString']" | |||||

| ] | |||||

| }, | |||||

| { | |||||

| "cell_type": "code", | |||||

| "execution_count": 8, | |||||

| "id": "49331613-93f9-4d86-9e43-384c16ff8813", | |||||

| "metadata": {}, | |||||

| "outputs": [ | |||||

| { | |||||

| "name": "stdout", | |||||

| "output_type": "stream", | |||||

| "text": [ | |||||

| " AV AC PR UI S C I A\n", | |||||

| "0 N L N R C L L N\n", | |||||

| "1 N L N N U N N H\n", | |||||

| "2 N L N N U N N H\n", | |||||

| "3 N L N R C L L N\n", | |||||

| "4 N L N R C L L N\n", | |||||

| ".. .. .. .. .. .. .. .. ..\n", | |||||

| "705 N L N N U H H H\n", | |||||

| "706 L L L N U H N N\n", | |||||

| "707 N L N N U H H H\n", | |||||

| "708 N L N N U N L L\n", | |||||

| "709 N L N N U H N N\n", | |||||

| "\n", | |||||

| "[710 rows x 8 columns]\n" | |||||

| ] | |||||

| }, | |||||

| { | |||||

| "name": "stderr", | |||||

| "output_type": "stream", | |||||

| "text": [ | |||||

| "C:\\Users\\lx\\AppData\\Local\\Temp\\ipykernel_38864\\3052899741.py:14: FutureWarning: DataFrame.applymap has been deprecated. Use DataFrame.map instead.\n", | |||||

| " train_data = train_data.applymap(transform_value)\n" | |||||

| ] | |||||

| } | |||||

| ], | |||||

| "source": [ | |||||

| "#转换数据\n", | |||||

| "def transform_value(val):\n", | |||||

| " return val.split(':')[1]\n", | |||||

| " \n", | |||||

| "columns = ['AV', 'AC', 'PR', 'UI', 'S', 'C', 'I', 'A']\n", | |||||

| "\n", | |||||

| "temp = []\n", | |||||

| "\n", | |||||

| "for i in range(vectorString.size):\n", | |||||

| " part = vectorString[i].split('/')\n", | |||||

| " list_items = part[1::]\n", | |||||

| " temp.append(list_items)\n", | |||||

| "train_data = pd.DataFrame(temp, columns=columns)\n", | |||||

| "train_data = train_data.applymap(transform_value)\n", | |||||

| "print(train_data)" | |||||

| ] | |||||

| }, | |||||

| { | |||||

| "cell_type": "code", | |||||

| "execution_count": 9, | |||||

| "id": "79a6f3ee-0517-4a4f-b26a-6f2dabf9d3b0", | |||||

| "metadata": {}, | |||||

| "outputs": [], | |||||

| "source": [ | |||||

| "def calculate_cvss_score(params):\n", | |||||

| " # 字典映射分值\n", | |||||

| " AV = {'N': 0.85, 'A': 0.62, 'L': 0.55, 'P': 0.2}\n", | |||||

| " AC = {'L': 0.77, 'H': 0.44}\n", | |||||

| " PR = {'N': 0.85, 'L': 0.68, 'H': 0.5}\n", | |||||

| " UI = {'N': 0.85, 'R': 0.62}\n", | |||||

| " S = {'U': 1, 'C': 1.08}\n", | |||||

| " C = {'N': 0, 'L': 0.22, 'H': 0.56}\n", | |||||

| " I = {'N': 0, 'L': 0.22, 'H': 0.56}\n", | |||||

| " A = {'N': 0, 'L': 0.22, 'H': 0.56}\n", | |||||

| "\n", | |||||

| " # 获取参数值\n", | |||||

| " av = AV[params['AV']]\n", | |||||

| " ac = AC[params['AC']]\n", | |||||

| " pr = PR[params['PR']]\n", | |||||

| " ui = UI[params['UI']]\n", | |||||

| " s = S[params['S']]\n", | |||||

| " c = C[params['C']]\n", | |||||

| " i = I[params['I']]\n", | |||||

| " a = A[params['A']]\n", | |||||

| "\n", | |||||

| " # 计算临时分数\n", | |||||

| " impact = 1 - (1 - c) * (1 - i) * (1 - a)\n", | |||||

| " exploitability = 8.22 * av * ac * pr * ui\n", | |||||

| "\n", | |||||

| " if impact == 0:\n", | |||||

| " base_score = 0\n", | |||||

| " else:\n", | |||||

| " if s == 1: # 未改变\n", | |||||

| " base_score = round(min(1.176 * (exploitability + impact), 10), 1)\n", | |||||

| " else: # 改变\n", | |||||

| " base_score = round(min(1.08 * (exploitability + impact), 10), 1)\n", | |||||

| "\n", | |||||

| " return base_score" | |||||

| ] | |||||

| }, | |||||

| { | |||||

| "cell_type": "code", | |||||

| "execution_count": 10, | |||||

| "id": "622cf1dd-082c-4d2a-a880-34d22e96d053", | |||||

| "metadata": {}, | |||||

| "outputs": [ | |||||

| { | |||||

| "name": "stdout", | |||||

| "output_type": "stream", | |||||

| "text": [ | |||||

| " AV AC PR UI S C I A score\n", | |||||

| "0 N L N R C L L N 3.5\n", | |||||

| "1 N L N N U N N H 5.2\n", | |||||

| "2 N L N N U N N H 5.2\n", | |||||

| "3 N L N R C L L N 3.5\n", | |||||

| "4 N L N R C L L N 3.5\n", | |||||

| ".. .. .. .. .. .. .. .. .. ...\n", | |||||

| "705 N L N N U H H H 5.6\n", | |||||

| "706 L L L N U H N N 3.0\n", | |||||

| "707 N L N N U H H H 5.6\n", | |||||

| "708 N L N N U N L L 5.0\n", | |||||

| "709 N L N N U H N N 5.2\n", | |||||

| "\n", | |||||

| "[710 rows x 9 columns]\n" | |||||

| ] | |||||

| } | |||||

| ], | |||||

| "source": [ | |||||

| "# 为每一行创建字典\n", | |||||

| "train_dicts = train_data.apply(lambda row: {col: row[col][0] for col in train_data.columns}, axis=1)\n", | |||||

| "train_score = train_dicts.apply(calculate_cvss_score)\n", | |||||

| "train_data['score'] = train_score\n", | |||||

| "print(train_data)" | |||||

| ] | |||||

| }, | |||||

| { | |||||

| "cell_type": "code", | |||||

| "execution_count": 11, | |||||

| "id": "f767e3c9-634b-4c0d-9145-eb4c013e1a6e", | |||||

| "metadata": {}, | |||||

| "outputs": [], | |||||

| "source": [ | |||||

| "dict = {\n", | |||||

| " 'AV': {\n", | |||||

| " 'N': 'NETWORK',\n", | |||||

| " 'A': 'ADJACENT',\n", | |||||

| " 'L': 'LOCAL',\n", | |||||

| " 'P': 'PHYSICAL'\n", | |||||

| " },\n", | |||||

| " 'AC': {\n", | |||||

| " 'L': 'LOW',\n", | |||||

| " 'H': 'HIGH'\n", | |||||

| " }, \n", | |||||

| " 'PR': {\n", | |||||

| " 'N': 'NONE',\n", | |||||

| " 'L': 'LOW',\n", | |||||

| " 'H': 'HIGH'\n", | |||||

| " }, \n", | |||||

| " 'UI': {\n", | |||||

| " 'N': 'NONE',\n", | |||||

| " 'R': 'REQUIRED'\n", | |||||

| " },\n", | |||||

| " 'S': {\n", | |||||

| " 'U': 'UNCHANGED',\n", | |||||

| " 'C': 'CHANGED'\n", | |||||

| " },\n", | |||||

| " 'C': {\n", | |||||

| " 'N': 'NONE',\n", | |||||

| " 'L': 'LOW',\n", | |||||

| " 'H': 'HIGH'\n", | |||||

| " },\n", | |||||

| " 'I': {\n", | |||||

| " 'N': 'NONE',\n", | |||||

| " 'L': 'LOW',\n", | |||||

| " 'H': 'HIGH'\n", | |||||

| " },\n", | |||||

| " 'A': {\n", | |||||

| " 'N': 'NONE', \n", | |||||

| " 'L': 'LOW',\n", | |||||

| " 'H': 'HIGH'\n", | |||||

| " }\n", | |||||

| "}" | |||||

| ] | |||||

| }, | |||||

| { | |||||

| "cell_type": "code", | |||||

| "execution_count": 12, | |||||

| "id": "d42106a3-9eb5-4580-9143-d7ae061b6d4c", | |||||

| "metadata": {}, | |||||

| "outputs": [ | |||||

| { | |||||

| "data": { | |||||

| "text/plain": " AV AC PR UI S C I A score\n0 NETWORK LOW NONE REQUIRED CHANGED LOW LOW NONE 3.5\n1 NETWORK LOW NONE NONE UNCHANGED NONE NONE HIGH 5.2\n2 NETWORK LOW NONE NONE UNCHANGED NONE NONE HIGH 5.2\n3 NETWORK LOW NONE REQUIRED CHANGED LOW LOW NONE 3.5\n4 NETWORK LOW NONE REQUIRED CHANGED LOW LOW NONE 3.5\n.. ... ... ... ... ... ... ... ... ...\n705 NETWORK LOW NONE NONE UNCHANGED HIGH HIGH HIGH 5.6\n706 LOCAL LOW LOW NONE UNCHANGED HIGH NONE NONE 3.0\n707 NETWORK LOW NONE NONE UNCHANGED HIGH HIGH HIGH 5.6\n708 NETWORK LOW NONE NONE UNCHANGED NONE LOW LOW 5.0\n709 NETWORK LOW NONE NONE UNCHANGED HIGH NONE NONE 5.2\n\n[710 rows x 9 columns]", | |||||

| "text/html": "<div>\n<style scoped>\n .dataframe tbody tr th:only-of-type {\n vertical-align: middle;\n }\n\n .dataframe tbody tr th {\n vertical-align: top;\n }\n\n .dataframe thead th {\n text-align: right;\n }\n</style>\n<table border=\"1\" class=\"dataframe\">\n <thead>\n <tr style=\"text-align: right;\">\n <th></th>\n <th>AV</th>\n <th>AC</th>\n <th>PR</th>\n <th>UI</th>\n <th>S</th>\n <th>C</th>\n <th>I</th>\n <th>A</th>\n <th>score</th>\n </tr>\n </thead>\n <tbody>\n <tr>\n <th>0</th>\n <td>NETWORK</td>\n <td>LOW</td>\n <td>NONE</td>\n <td>REQUIRED</td>\n <td>CHANGED</td>\n <td>LOW</td>\n <td>LOW</td>\n <td>NONE</td>\n <td>3.5</td>\n </tr>\n <tr>\n <th>1</th>\n <td>NETWORK</td>\n <td>LOW</td>\n <td>NONE</td>\n <td>NONE</td>\n <td>UNCHANGED</td>\n <td>NONE</td>\n <td>NONE</td>\n <td>HIGH</td>\n <td>5.2</td>\n </tr>\n <tr>\n <th>2</th>\n <td>NETWORK</td>\n <td>LOW</td>\n <td>NONE</td>\n <td>NONE</td>\n <td>UNCHANGED</td>\n <td>NONE</td>\n <td>NONE</td>\n <td>HIGH</td>\n <td>5.2</td>\n </tr>\n <tr>\n <th>3</th>\n <td>NETWORK</td>\n <td>LOW</td>\n <td>NONE</td>\n <td>REQUIRED</td>\n <td>CHANGED</td>\n <td>LOW</td>\n <td>LOW</td>\n <td>NONE</td>\n <td>3.5</td>\n </tr>\n <tr>\n <th>4</th>\n <td>NETWORK</td>\n <td>LOW</td>\n <td>NONE</td>\n <td>REQUIRED</td>\n <td>CHANGED</td>\n <td>LOW</td>\n <td>LOW</td>\n <td>NONE</td>\n <td>3.5</td>\n </tr>\n <tr>\n <th>...</th>\n <td>...</td>\n <td>...</td>\n <td>...</td>\n <td>...</td>\n <td>...</td>\n <td>...</td>\n <td>...</td>\n <td>...</td>\n <td>...</td>\n </tr>\n <tr>\n <th>705</th>\n <td>NETWORK</td>\n <td>LOW</td>\n <td>NONE</td>\n <td>NONE</td>\n <td>UNCHANGED</td>\n <td>HIGH</td>\n <td>HIGH</td>\n <td>HIGH</td>\n <td>5.6</td>\n </tr>\n <tr>\n <th>706</th>\n <td>LOCAL</td>\n <td>LOW</td>\n <td>LOW</td>\n <td>NONE</td>\n <td>UNCHANGED</td>\n <td>HIGH</td>\n <td>NONE</td>\n <td>NONE</td>\n <td>3.0</td>\n </tr>\n <tr>\n <th>707</th>\n <td>NETWORK</td>\n <td>LOW</td>\n <td>NONE</td>\n <td>NONE</td>\n <td>UNCHANGED</td>\n <td>HIGH</td>\n <td>HIGH</td>\n <td>HIGH</td>\n <td>5.6</td>\n </tr>\n <tr>\n <th>708</th>\n <td>NETWORK</td>\n <td>LOW</td>\n <td>NONE</td>\n <td>NONE</td>\n <td>UNCHANGED</td>\n <td>NONE</td>\n <td>LOW</td>\n <td>LOW</td>\n <td>5.0</td>\n </tr>\n <tr>\n <th>709</th>\n <td>NETWORK</td>\n <td>LOW</td>\n <td>NONE</td>\n <td>NONE</td>\n <td>UNCHANGED</td>\n <td>HIGH</td>\n <td>NONE</td>\n <td>NONE</td>\n <td>5.2</td>\n </tr>\n </tbody>\n</table>\n<p>710 rows × 9 columns</p>\n</div>" | |||||

| }, | |||||

| "execution_count": 12, | |||||

| "metadata": {}, | |||||

| "output_type": "execute_result" | |||||

| } | |||||

| ], | |||||

| "source": [ | |||||

| "# 替换 DataFrame 中的值\n", | |||||

| "train_data.replace(dict, inplace=True)\n", | |||||

| "train_data" | |||||

| ] | |||||

| }, | |||||

| { | |||||

| "cell_type": "code", | |||||

| "execution_count": 13, | |||||

| "id": "f07b2d92-8271-46c8-ab90-a19570dd2566", | |||||

| "metadata": {}, | |||||

| "outputs": [], | |||||

| "source": [ | |||||

| "train_data.insert(0, 'description', train_data_temp)" | |||||

| ] | |||||

| }, | |||||

| { | |||||

| "cell_type": "code", | |||||

| "execution_count": 14, | |||||

| "id": "5ca97546-e120-4e80-b7dc-67a00c1bbf45", | |||||

| "metadata": {}, | |||||

| "outputs": [ | |||||

| { | |||||

| "name": "stdout", | |||||

| "output_type": "stream", | |||||

| "text": [ | |||||

| " description AV AC PR \\\n", | |||||

| "0 Stored & Reflected XSS affecting Xbtit NUMBERT... NETWORK LOW NONE \n", | |||||

| "1 NULL Pointer Dereference in APITAG NULL Pointe... NETWORK LOW NONE \n", | |||||

| "2 NULL Pointer Dereference in APITAG NULL Pointe... NETWORK LOW NONE \n", | |||||

| "3 Reflected XSS vulnerability NUMBERTAG in icehr... NETWORK LOW NONE \n", | |||||

| "4 Reflected XSS vulnerabilities NUMBERTAG in ice... NETWORK LOW NONE \n", | |||||

| ".. ... ... ... ... \n", | |||||

| "705 pboot cms NUMBERTAG RCE. 漏洞详情: URLTAG 声明 APITA... NETWORK LOW NONE \n", | |||||

| "706 .NET CVE backfill round NUMBERTAG Hello, Pleas... LOCAL LOW LOW \n", | |||||

| "707 Backport of NUMBERTAG fixes to NUMBERTAG Thank... NETWORK LOW NONE \n", | |||||

| "708 Update impacted packages for CVETAG . Hi, This... NETWORK LOW NONE \n", | |||||

| "709 CVE Dio NUMBERTAG Google OVS Scanner. Package ... NETWORK LOW NONE \n", | |||||

| "\n", | |||||

| " UI S C I A score \n", | |||||

| "0 REQUIRED CHANGED LOW LOW NONE 3.5 \n", | |||||

| "1 NONE UNCHANGED NONE NONE HIGH 5.2 \n", | |||||

| "2 NONE UNCHANGED NONE NONE HIGH 5.2 \n", | |||||

| "3 REQUIRED CHANGED LOW LOW NONE 3.5 \n", | |||||

| "4 REQUIRED CHANGED LOW LOW NONE 3.5 \n", | |||||

| ".. ... ... ... ... ... ... \n", | |||||

| "705 NONE UNCHANGED HIGH HIGH HIGH 5.6 \n", | |||||

| "706 NONE UNCHANGED HIGH NONE NONE 3.0 \n", | |||||

| "707 NONE UNCHANGED HIGH HIGH HIGH 5.6 \n", | |||||

| "708 NONE UNCHANGED NONE LOW LOW 5.0 \n", | |||||

| "709 NONE UNCHANGED HIGH NONE NONE 5.2 \n", | |||||

| "\n", | |||||

| "[710 rows x 10 columns]\n" | |||||

| ] | |||||

| } | |||||

| ], | |||||

| "source": [ | |||||

| "print(train_data)" | |||||

| ] | |||||

| }, | |||||

| { | |||||

| "cell_type": "code", | |||||

| "execution_count": 15, | |||||

| "id": "3e5e14c5-8f88-43d3-945c-1505e11a2490", | |||||

| "metadata": {}, | |||||

| "outputs": [ | |||||

| { | |||||

| "name": "stdout", | |||||

| "output_type": "stream", | |||||

| "text": [ | |||||

| " description AV AC PR \\\n", | |||||

| "0 Stored & Reflected XSS affecting Xbtit NUMBERT... NETWORK LOW NONE \n", | |||||

| "10 illegal memcpy during njs_vmcode_typeof in PAT... NETWORK LOW NONE \n", | |||||

| "11 Heap UAF in njs_await_fulfilled. Env CODETAG P... NETWORK LOW NONE \n", | |||||

| "48 Add nonce to the logout link. The logout link ... NETWORK LOW NONE \n", | |||||

| "50 Divide By Zero in H5T__complete_copy () at PAT... NETWORK LOW NONE \n", | |||||

| ".. ... ... ... ... \n", | |||||

| "705 pboot cms NUMBERTAG RCE. 漏洞详情: URLTAG 声明 APITA... NETWORK LOW NONE \n", | |||||

| "706 .NET CVE backfill round NUMBERTAG Hello, Pleas... LOCAL LOW LOW \n", | |||||

| "707 Backport of NUMBERTAG fixes to NUMBERTAG Thank... NETWORK LOW NONE \n", | |||||

| "708 Update impacted packages for CVETAG . Hi, This... NETWORK LOW NONE \n", | |||||

| "709 CVE Dio NUMBERTAG Google OVS Scanner. Package ... NETWORK LOW NONE \n", | |||||

| "\n", | |||||

| " UI S C I A score prefix \n", | |||||

| "0 REQUIRED CHANGED LOW LOW NONE 3.5 Stored & R \n", | |||||

| "10 NONE UNCHANGED HIGH HIGH HIGH 5.6 illegal me \n", | |||||

| "11 NONE UNCHANGED HIGH HIGH HIGH 5.6 Heap UAF i \n", | |||||

| "48 REQUIRED CHANGED NONE HIGH NONE 3.7 Add nonce \n", | |||||

| "50 REQUIRED UNCHANGED NONE NONE HIGH 4.0 Divide By \n", | |||||

| ".. ... ... ... ... ... ... ... \n", | |||||

| "705 NONE UNCHANGED HIGH HIGH HIGH 5.6 pboot cms \n", | |||||

| "706 NONE UNCHANGED HIGH NONE NONE 3.0 .NET CVE b \n", | |||||

| "707 NONE UNCHANGED HIGH HIGH HIGH 5.6 Backport o \n", | |||||

| "708 NONE UNCHANGED NONE LOW LOW 5.0 Update imp \n", | |||||

| "709 NONE UNCHANGED HIGH NONE NONE 5.2 CVE Dio NU \n", | |||||

| "\n", | |||||

| "[264 rows x 11 columns]\n" | |||||

| ] | |||||

| } | |||||

| ], | |||||

| "source": [ | |||||

| "# 提取前20个字符\n", | |||||

| "train_data['prefix'] = train_data['description'].str[:10]\n", | |||||

| "\n", | |||||

| "# 计算每个前20个字符的出现次数\n", | |||||

| "prefix_counts = train_data['prefix'].value_counts()\n", | |||||

| "\n", | |||||

| "# 只保留那些前20个字符出现次数为1的描述\n", | |||||

| "unique_prefixes = prefix_counts[prefix_counts == 1].index\n", | |||||

| "unique_descriptions = train_data[train_data['prefix'].isin(unique_prefixes)]\n", | |||||

| "print(unique_descriptions)" | |||||

| ] | |||||

| }, | |||||

| { | |||||

| "cell_type": "code", | |||||

| "execution_count": 16, | |||||

| "id": "d660a495-fdab-41fc-932c-6e593babc88e", | |||||

| "metadata": {}, | |||||

| "outputs": [ | |||||

| { | |||||

| "name": "stdout", | |||||

| "output_type": "stream", | |||||

| "text": [ | |||||

| " description AV AC PR \\\n", | |||||

| "0 Stored & Reflected XSS affecting Xbtit NUMBERT... NETWORK LOW NONE \n", | |||||

| "10 illegal memcpy during njs_vmcode_typeof in PAT... NETWORK LOW NONE \n", | |||||

| "11 Heap UAF in njs_await_fulfilled. Env CODETAG P... NETWORK LOW NONE \n", | |||||

| "48 Add nonce to the logout link. The logout link ... NETWORK LOW NONE \n", | |||||

| "50 Divide By Zero in H5T__complete_copy () at PAT... NETWORK LOW NONE \n", | |||||

| ".. ... ... ... ... \n", | |||||

| "698 A NUMBERTAG specific heap buffer overflow with... NETWORK LOW NONE \n", | |||||

| "699 Mitigation for CVETAG . Hi there. It appears a... NETWORK LOW NONE \n", | |||||

| "703 Contact APITAG Product Security Team and ask t... NETWORK LOW NONE \n", | |||||

| "707 Backport of NUMBERTAG fixes to NUMBERTAG Thank... NETWORK LOW NONE \n", | |||||

| "709 CVE Dio NUMBERTAG Google OVS Scanner. Package ... NETWORK LOW NONE \n", | |||||

| "\n", | |||||

| " UI S C I A score prefix \n", | |||||

| "0 REQUIRED CHANGED LOW LOW NONE 3.5 Stored & R \n", | |||||

| "10 NONE UNCHANGED HIGH HIGH HIGH 5.6 illegal me \n", | |||||

| "11 NONE UNCHANGED HIGH HIGH HIGH 5.6 Heap UAF i \n", | |||||

| "48 REQUIRED CHANGED NONE HIGH NONE 3.7 Add nonce \n", | |||||

| "50 REQUIRED UNCHANGED NONE NONE HIGH 4.0 Divide By \n", | |||||

| ".. ... ... ... ... ... ... ... \n", | |||||

| "698 NONE UNCHANGED HIGH HIGH HIGH 5.6 A NUMBERTA \n", | |||||

| "699 NONE UNCHANGED NONE NONE HIGH 5.2 Mitigation \n", | |||||

| "703 NONE UNCHANGED LOW LOW LOW 5.2 Contact AP \n", | |||||

| "707 NONE UNCHANGED HIGH HIGH HIGH 5.6 Backport o \n", | |||||

| "709 NONE UNCHANGED HIGH NONE NONE 5.2 CVE Dio NU \n", | |||||

| "\n", | |||||

| "[197 rows x 11 columns]\n" | |||||

| ] | |||||

| } | |||||

| ], | |||||

| "source": [ | |||||

| "# 删除描述长度大于1000的行\n", | |||||

| "temp = pd.DataFrame()\n", | |||||

| "filtered_train_data = pd.DataFrame()\n", | |||||

| "temp = unique_descriptions[unique_descriptions['description'].str.len() <= 1000]\n", | |||||

| "filtered_train_data = temp[temp['description'].str.len() > 100]\n", | |||||

| "print(filtered_train_data)" | |||||

| ] | |||||

| }, | |||||

| { | |||||

| "cell_type": "code", | |||||

| "execution_count": 17, | |||||

| "id": "eaa6e29e-7fc1-45b9-809b-a4d43686362c", | |||||

| "metadata": {}, | |||||

| "outputs": [ | |||||

| { | |||||

| "name": "stderr", | |||||

| "output_type": "stream", | |||||

| "text": [ | |||||

| "C:\\Users\\lx\\AppData\\Local\\Temp\\ipykernel_38864\\3885197511.py:1: SettingWithCopyWarning: \n", | |||||

| "A value is trying to be set on a copy of a slice from a DataFrame\n", | |||||

| "\n", | |||||

| "See the caveats in the documentation: https://pandas.pydata.org/pandas-docs/stable/user_guide/indexing.html#returning-a-view-versus-a-copy\n", | |||||

| " filtered_train_data.sort_values(by='prefix', inplace=True)\n" | |||||

| ] | |||||

| } | |||||

| ], | |||||

| "source": [ | |||||

| "filtered_train_data.sort_values(by='prefix', inplace=True)" | |||||

| ] | |||||

| }, | |||||

| { | |||||

| "cell_type": "code", | |||||

| "execution_count": 18, | |||||

| "id": "db7adb10-83a9-438f-9681-ac02293cba3e", | |||||

| "metadata": {}, | |||||

| "outputs": [ | |||||

| { | |||||

| "name": "stdout", | |||||

| "output_type": "stream", | |||||

| "text": [ | |||||

| " description AV AC PR \\\n", | |||||

| "698 A NUMBERTAG specific heap buffer overflow with... NETWORK LOW NONE \n", | |||||

| "488 A Remote Code Execution (RCE) vulnerability ex... NETWORK LOW HIGH \n", | |||||

| "51 A heap use after free in in H5AC_unpin_entry. ... NETWORK LOW NONE \n", | |||||

| "408 A package should never try to do unrelated thi... NETWORK LOW NONE \n", | |||||

| "236 A security vulnerability which will lead to co... NETWORK LOW NONE \n", | |||||

| ".. ... ... ... ... \n", | |||||

| "480 一个后台存储型xss漏洞. When adding movie names, malicio... NETWORK LOW LOW \n", | |||||

| "639 关于 CVETAG 漏洞,不要在发 issues 了!!!. APITAG 如果你从前端传递... NETWORK LOW NONE \n", | |||||

| "606 后台服务器组中存在XSS漏洞. 进入后台,点击视频 >服务器组 >添加, 在名称框插入pay... NETWORK LOW LOW \n", | |||||

| "509 固定的cookie NUMBERTAG APITAG FILETAG NUMBERTAG H... NETWORK LOW NONE \n", | |||||

| "52 默认的 APITAG 为什么选择 APITAG 呢?. 版本情况 JDK版本: corret... NETWORK LOW NONE \n", | |||||

| "\n", | |||||

| " UI S C I A score \n", | |||||

| "698 NONE UNCHANGED HIGH HIGH HIGH 5.6 \n", | |||||

| "488 NONE UNCHANGED HIGH HIGH HIGH 3.8 \n", | |||||

| "51 REQUIRED UNCHANGED HIGH HIGH HIGH 4.4 \n", | |||||

| "408 NONE UNCHANGED HIGH HIGH HIGH 5.6 \n", | |||||

| "236 NONE UNCHANGED HIGH HIGH HIGH 5.6 \n", | |||||

| ".. ... ... ... ... ... ... \n", | |||||

| "480 REQUIRED CHANGED LOW LOW NONE 2.9 \n", | |||||

| "639 NONE UNCHANGED HIGH HIGH HIGH 5.6 \n", | |||||

| "606 REQUIRED CHANGED LOW LOW NONE 2.9 \n", | |||||

| "509 NONE UNCHANGED HIGH HIGH HIGH 5.6 \n", | |||||

| "52 NONE UNCHANGED HIGH HIGH HIGH 5.6 \n", | |||||

| "\n", | |||||

| "[197 rows x 10 columns]\n" | |||||

| ] | |||||

| }, | |||||

| { | |||||

| "name": "stderr", | |||||

| "output_type": "stream", | |||||

| "text": [ | |||||

| "C:\\Users\\lx\\AppData\\Local\\Temp\\ipykernel_38864\\3846312463.py:1: SettingWithCopyWarning: \n", | |||||

| "A value is trying to be set on a copy of a slice from a DataFrame\n", | |||||

| "\n", | |||||

| "See the caveats in the documentation: https://pandas.pydata.org/pandas-docs/stable/user_guide/indexing.html#returning-a-view-versus-a-copy\n", | |||||

| " filtered_train_data.drop('prefix', axis=1, inplace=True)\n" | |||||

| ] | |||||

| } | |||||

| ], | |||||

| "source": [ | |||||

| "filtered_train_data.drop('prefix', axis=1, inplace=True)\n", | |||||

| "print(filtered_train_data)" | |||||

| ] | |||||

| }, | |||||

| { | |||||

| "cell_type": "code", | |||||

| "execution_count": 19, | |||||

| "id": "9cc98bb5-1ae4-4024-b9cc-d3db88996221", | |||||

| "metadata": {}, | |||||

| "outputs": [ | |||||

| { | |||||

| "data": { | |||||

| "text/plain": "2.0" | |||||

| }, | |||||

| "execution_count": 19, | |||||

| "metadata": {}, | |||||

| "output_type": "execute_result" | |||||

| } | |||||

| ], | |||||

| "source": [ | |||||

| "filtered_train_data['score'].min()" | |||||

| ] | |||||

| }, | |||||

| { | |||||

| "cell_type": "code", | |||||

| "execution_count": 20, | |||||

| "id": "0c2e00f4-cf7b-4b15-8901-537abb4524e5", | |||||

| "metadata": {}, | |||||

| "outputs": [], | |||||

| "source": [ | |||||

| "filtered_train_data.to_csv(r\"../dataset/filtered_test_dataset.csv\",header=None,index=None)" | |||||

| ] | |||||

| }, | |||||

| { | |||||

| "cell_type": "code", | |||||

| "execution_count": 20, | |||||

| "id": "080b3051-8eb8-4c4f-81ad-d3a215c2f693", | |||||

| "metadata": {}, | |||||

| "outputs": [], | |||||

| "source": [] | |||||

| } | |||||

| ], | |||||

| "metadata": { | |||||

| "kernelspec": { | |||||

| "display_name": "Python 3 (ipykernel)", | |||||

| "language": "python", | |||||

| "name": "python3" | |||||

| }, | |||||

| "language_info": { | |||||

| "codemirror_mode": { | |||||

| "name": "ipython", | |||||

| "version": 3 | |||||

| }, | |||||

| "file_extension": ".py", | |||||

| "mimetype": "text/x-python", | |||||

| "name": "python", | |||||

| "nbconvert_exporter": "python", | |||||

| "pygments_lexer": "ipython3", | |||||

| "version": "3.11.4" | |||||

| } | |||||

| }, | |||||

| "nbformat": 4, | |||||

| "nbformat_minor": 5 | |||||

| } | |||||

+ 533

- 0

Models+K-Means/main/decisionTree/test_decisionTree.ipynb

View File

| @@ -0,0 +1,533 @@ | |||||

| { | |||||

| "cells": [ | |||||

| { | |||||

| "cell_type": "code", | |||||

| "execution_count": 8, | |||||

| "id": "64ff4cb2-6a11-4558-9b58-02d23d391b34", | |||||

| "metadata": {}, | |||||

| "outputs": [], | |||||

| "source": [ | |||||

| "import pandas as pd\n", | |||||

| "import json\n", | |||||

| "from sklearn import tree\n", | |||||

| "from sklearn.model_selection import train_test_split as tsplit \n", | |||||

| "from sklearn.metrics import classification_report\n", | |||||

| "from sklearn.metrics import accuracy_score, classification_report, confusion_matrix\n", | |||||

| "from sklearn.preprocessing import OneHotEncoder" | |||||

| ] | |||||

| }, | |||||

| { | |||||

| "cell_type": "code", | |||||

| "execution_count": 9, | |||||

| "id": "4afcae4b-305f-4ce6-af54-08edba088e0b", | |||||

| "metadata": {}, | |||||

| "outputs": [], | |||||

| "source": [ | |||||

| "def transform_value(val):\n", | |||||

| " return val.split(':')[1]" | |||||

| ] | |||||

| }, | |||||

| { | |||||

| "cell_type": "code", | |||||

| "execution_count": 10, | |||||

| "id": "1b1287ad-40c8-4059-ad05-097bad2feac7", | |||||

| "metadata": {}, | |||||

| "outputs": [], | |||||

| "source": [ | |||||

| "def extract_data(s):\n", | |||||

| " data_temp = pd.read_json(s)\n", | |||||

| " columns = ['AV', 'AC', 'PR', 'UI', 'S', 'C', 'I', 'A']\n", | |||||

| " vectorString = data_temp['vectorString']\n", | |||||

| " temp = []\n", | |||||

| " for i in range(vectorString.size):\n", | |||||

| " part = vectorString[i].split('/')\n", | |||||

| " list_items = part[1::]\n", | |||||

| " temp.append(list_items)\n", | |||||

| " data = pd.DataFrame(temp, columns=columns)\n", | |||||

| " data = data.applymap(transform_value)\n", | |||||

| " data['severity'] = data_temp['severity']\n", | |||||

| " return data" | |||||

| ] | |||||

| }, | |||||

| { | |||||

| "cell_type": "code", | |||||

| "execution_count": 11, | |||||

| "id": "6962b88e-2523-4bde-8fa6-df96bfbc5221", | |||||

| "metadata": {}, | |||||

| "outputs": [ | |||||

| { | |||||

| "name": "stdout", | |||||

| "output_type": "stream", | |||||

| "text": [ | |||||

| " AV AC PR UI S C I A severity\n", | |||||

| "0 N L N R C L L N MEDIUM\n", | |||||

| "1 N L N N U N N H HIGH\n", | |||||

| "2 N L N N U N N H HIGH\n", | |||||

| "3 N L N R C L L N MEDIUM\n", | |||||

| "4 N L N R C L L N MEDIUM\n", | |||||

| ".. .. .. .. .. .. .. .. .. ...\n", | |||||

| "705 N L N N U H H H CRITICAL\n", | |||||

| "706 L L L N U H N N MEDIUM\n", | |||||

| "707 N L N N U H H H CRITICAL\n", | |||||

| "708 N L N N U N L L MEDIUM\n", | |||||

| "709 N L N N U H N N HIGH\n", | |||||

| "\n", | |||||

| "[710 rows x 9 columns]\n" | |||||

| ] | |||||

| } | |||||

| ], | |||||

| "source": [ | |||||

| "data_train = extract_data('SIR_train_set.json')\n", | |||||

| "data_test = extract_data('SIR_test_set.json')\n", | |||||

| "data_validation = extract_data('SIR_validation_set.json')\n", | |||||

| "data_train\n", | |||||

| "print(data_test)" | |||||

| ] | |||||

| }, | |||||

| { | |||||

| "cell_type": "code", | |||||

| "execution_count": 12, | |||||

| "id": "49ccfdf6-99f0-4c5e-9772-03e500e6b6d6", | |||||

| "metadata": {}, | |||||

| "outputs": [ | |||||

| { | |||||

| "data": { | |||||

| "text/html": [ | |||||

| "<div>\n", | |||||

| "<style scoped>\n", | |||||

| " .dataframe tbody tr th:only-of-type {\n", | |||||

| " vertical-align: middle;\n", | |||||

| " }\n", | |||||

| "\n", | |||||

| " .dataframe tbody tr th {\n", | |||||

| " vertical-align: top;\n", | |||||

| " }\n", | |||||

| "\n", | |||||

| " .dataframe thead th {\n", | |||||

| " text-align: right;\n", | |||||

| " }\n", | |||||

| "</style>\n", | |||||

| "<table border=\"1\" class=\"dataframe\">\n", | |||||

| " <thead>\n", | |||||

| " <tr style=\"text-align: right;\">\n", | |||||

| " <th></th>\n", | |||||

| " <th>AV</th>\n", | |||||

| " <th>AC</th>\n", | |||||

| " <th>PR</th>\n", | |||||

| " <th>UI</th>\n", | |||||

| " <th>S</th>\n", | |||||

| " <th>C</th>\n", | |||||

| " <th>I</th>\n", | |||||

| " <th>A</th>\n", | |||||

| " </tr>\n", | |||||

| " </thead>\n", | |||||

| " <tbody>\n", | |||||

| " <tr>\n", | |||||

| " <th>0</th>\n", | |||||

| " <td>N</td>\n", | |||||

| " <td>L</td>\n", | |||||

| " <td>N</td>\n", | |||||

| " <td>N</td>\n", | |||||

| " <td>U</td>\n", | |||||

| " <td>H</td>\n", | |||||

| " <td>N</td>\n", | |||||

| " <td>N</td>\n", | |||||

| " </tr>\n", | |||||

| " <tr>\n", | |||||

| " <th>1</th>\n", | |||||

| " <td>N</td>\n", | |||||

| " <td>L</td>\n", | |||||

| " <td>N</td>\n", | |||||

| " <td>N</td>\n", | |||||

| " <td>U</td>\n", | |||||

| " <td>H</td>\n", | |||||

| " <td>H</td>\n", | |||||

| " <td>H</td>\n", | |||||

| " </tr>\n", | |||||

| " <tr>\n", | |||||

| " <th>2</th>\n", | |||||

| " <td>N</td>\n", | |||||

| " <td>L</td>\n", | |||||

| " <td>N</td>\n", | |||||

| " <td>N</td>\n", | |||||

| " <td>U</td>\n", | |||||

| " <td>H</td>\n", | |||||

| " <td>N</td>\n", | |||||

| " <td>N</td>\n", | |||||

| " </tr>\n", | |||||

| " <tr>\n", | |||||

| " <th>3</th>\n", | |||||

| " <td>N</td>\n", | |||||

| " <td>H</td>\n", | |||||

| " <td>N</td>\n", | |||||

| " <td>N</td>\n", | |||||

| " <td>U</td>\n", | |||||

| " <td>H</td>\n", | |||||

| " <td>H</td>\n", | |||||

| " <td>H</td>\n", | |||||

| " </tr>\n", | |||||

| " <tr>\n", | |||||

| " <th>4</th>\n", | |||||

| " <td>N</td>\n", | |||||

| " <td>L</td>\n", | |||||

| " <td>N</td>\n", | |||||

| " <td>R</td>\n", | |||||

| " <td>U</td>\n", | |||||

| " <td>H</td>\n", | |||||

| " <td>H</td>\n", | |||||

| " <td>H</td>\n", | |||||

| " </tr>\n", | |||||

| " <tr>\n", | |||||

| " <th>...</th>\n", | |||||

| " <td>...</td>\n", | |||||

| " <td>...</td>\n", | |||||

| " <td>...</td>\n", | |||||

| " <td>...</td>\n", | |||||

| " <td>...</td>\n", | |||||

| " <td>...</td>\n", | |||||

| " <td>...</td>\n", | |||||

| " <td>...</td>\n", | |||||

| " </tr>\n", | |||||

| " <tr>\n", | |||||

| " <th>5619</th>\n", | |||||

| " <td>N</td>\n", | |||||

| " <td>L</td>\n", | |||||

| " <td>N</td>\n", | |||||

| " <td>N</td>\n", | |||||

| " <td>U</td>\n", | |||||

| " <td>N</td>\n", | |||||

| " <td>N</td>\n", | |||||

| " <td>H</td>\n", | |||||

| " </tr>\n", | |||||

| " <tr>\n", | |||||

| " <th>5620</th>\n", | |||||

| " <td>N</td>\n", | |||||

| " <td>L</td>\n", | |||||

| " <td>N</td>\n", | |||||

| " <td>R</td>\n", | |||||

| " <td>C</td>\n", | |||||

| " <td>L</td>\n", | |||||

| " <td>L</td>\n", | |||||

| " <td>N</td>\n", | |||||

| " </tr>\n", | |||||

| " <tr>\n", | |||||

| " <th>5621</th>\n", | |||||

| " <td>N</td>\n", | |||||

| " <td>L</td>\n", | |||||

| " <td>N</td>\n", | |||||

| " <td>R</td>\n", | |||||

| " <td>U</td>\n", | |||||

| " <td>N</td>\n", | |||||

| " <td>H</td>\n", | |||||

| " <td>N</td>\n", | |||||

| " </tr>\n", | |||||

| " <tr>\n", | |||||

| " <th>5622</th>\n", | |||||

| " <td>N</td>\n", | |||||

| " <td>L</td>\n", | |||||

| " <td>N</td>\n", | |||||

| " <td>R</td>\n", | |||||

| " <td>U</td>\n", | |||||

| " <td>N</td>\n", | |||||

| " <td>H</td>\n", | |||||

| " <td>N</td>\n", | |||||

| " </tr>\n", | |||||

| " <tr>\n", | |||||

| " <th>5623</th>\n", | |||||

| " <td>N</td>\n", | |||||

| " <td>L</td>\n", | |||||

| " <td>L</td>\n", | |||||

| " <td>R</td>\n", | |||||

| " <td>C</td>\n", | |||||

| " <td>L</td>\n", | |||||

| " <td>L</td>\n", | |||||

| " <td>N</td>\n", | |||||

| " </tr>\n", | |||||

| " </tbody>\n", | |||||

| "</table>\n", | |||||

| "<p>5624 rows × 8 columns</p>\n", | |||||

| "</div>" | |||||

| ], | |||||

| "text/plain": [ | |||||

| " AV AC PR UI S C I A\n", | |||||

| "0 N L N N U H N N\n", | |||||

| "1 N L N N U H H H\n", | |||||

| "2 N L N N U H N N\n", | |||||

| "3 N H N N U H H H\n", | |||||

| "4 N L N R U H H H\n", | |||||

| "... .. .. .. .. .. .. .. ..\n", | |||||

| "5619 N L N N U N N H\n", | |||||

| "5620 N L N R C L L N\n", | |||||

| "5621 N L N R U N H N\n", | |||||

| "5622 N L N R U N H N\n", | |||||

| "5623 N L L R C L L N\n", | |||||

| "\n", | |||||

| "[5624 rows x 8 columns]" | |||||

| ] | |||||

| }, | |||||

| "execution_count": 12, | |||||

| "metadata": {}, | |||||

| "output_type": "execute_result" | |||||

| } | |||||

| ], | |||||

| "source": [ | |||||

| "lw = data_train[['AV', 'AC', 'PR', 'UI', 'S', 'C', 'I', 'A']]\n", | |||||

| "lw" | |||||

| ] | |||||

| }, | |||||

| { | |||||

| "cell_type": "code", | |||||

| "execution_count": 13, | |||||

| "id": "eef35137-c9f8-49cb-8232-506d564f1fb4", | |||||

| "metadata": {}, | |||||

| "outputs": [ | |||||

| { | |||||

| "name": "stdout", | |||||

| "output_type": "stream", | |||||

| "text": [ | |||||

| " AV_A AV_L AV_N AV_P AC_H AC_L PR_H PR_L PR_N UI_N ... S_U \\\n", | |||||

| "0 0.0 0.0 1.0 0.0 0.0 1.0 0.0 0.0 1.0 1.0 ... 1.0 \n", | |||||

| "1 0.0 0.0 1.0 0.0 0.0 1.0 0.0 0.0 1.0 1.0 ... 1.0 \n", | |||||

| "2 0.0 0.0 1.0 0.0 0.0 1.0 0.0 0.0 1.0 1.0 ... 1.0 \n", | |||||

| "3 0.0 0.0 1.0 0.0 1.0 0.0 0.0 0.0 1.0 1.0 ... 1.0 \n", | |||||

| "4 0.0 0.0 1.0 0.0 0.0 1.0 0.0 0.0 1.0 0.0 ... 1.0 \n", | |||||

| "... ... ... ... ... ... ... ... ... ... ... ... ... \n", | |||||

| "5619 0.0 0.0 1.0 0.0 0.0 1.0 0.0 0.0 1.0 1.0 ... 1.0 \n", | |||||

| "5620 0.0 0.0 1.0 0.0 0.0 1.0 0.0 0.0 1.0 0.0 ... 0.0 \n", | |||||

| "5621 0.0 0.0 1.0 0.0 0.0 1.0 0.0 0.0 1.0 0.0 ... 1.0 \n", | |||||

| "5622 0.0 0.0 1.0 0.0 0.0 1.0 0.0 0.0 1.0 0.0 ... 1.0 \n", | |||||

| "5623 0.0 0.0 1.0 0.0 0.0 1.0 0.0 1.0 0.0 0.0 ... 0.0 \n", | |||||

| "\n", | |||||

| " C_H C_L C_N I_H I_L I_N A_H A_L A_N \n", | |||||

| "0 1.0 0.0 0.0 0.0 0.0 1.0 0.0 0.0 1.0 \n", | |||||

| "1 1.0 0.0 0.0 1.0 0.0 0.0 1.0 0.0 0.0 \n", | |||||

| "2 1.0 0.0 0.0 0.0 0.0 1.0 0.0 0.0 1.0 \n", | |||||

| "3 1.0 0.0 0.0 1.0 0.0 0.0 1.0 0.0 0.0 \n", | |||||

| "4 1.0 0.0 0.0 1.0 0.0 0.0 1.0 0.0 0.0 \n", | |||||

| "... ... ... ... ... ... ... ... ... ... \n", | |||||

| "5619 0.0 0.0 1.0 0.0 0.0 1.0 1.0 0.0 0.0 \n", | |||||

| "5620 0.0 1.0 0.0 0.0 1.0 0.0 0.0 0.0 1.0 \n", | |||||

| "5621 0.0 0.0 1.0 1.0 0.0 0.0 0.0 0.0 1.0 \n", | |||||

| "5622 0.0 0.0 1.0 1.0 0.0 0.0 0.0 0.0 1.0 \n", | |||||

| "5623 0.0 1.0 0.0 0.0 1.0 0.0 0.0 0.0 1.0 \n", | |||||

| "\n", | |||||

| "[5624 rows x 22 columns]\n" | |||||

| ] | |||||

| }, | |||||

| { | |||||

| "name": "stderr", | |||||

| "output_type": "stream", | |||||

| "text": [ | |||||

| "C:\\Users\\lw\\AppData\\Local\\Programs\\Python\\Python311\\Lib\\site-packages\\sklearn\\preprocessing\\_encoders.py:972: FutureWarning: `sparse` was renamed to `sparse_output` in version 1.2 and will be removed in 1.4. `sparse_output` is ignored unless you leave `sparse` to its default value.\n", | |||||

| " warnings.warn(\n" | |||||

| ] | |||||

| } | |||||

| ], | |||||

| "source": [ | |||||

| "def encode(data):\n", | |||||

| " # 初始化 OneHotEncoder\n", | |||||

| " encoder = OneHotEncoder(sparse=False)\n", | |||||

| "\n", | |||||

| " # 转换字符数据为数值\n", | |||||

| " encoded_features = encoder.fit_transform(data[['AV', 'AC', 'PR', 'UI', 'S', 'C', 'I', 'A']])\n", | |||||

| " encoded_data = pd.DataFrame(encoded_features, columns=encoder.get_feature_names_out(['AV', 'AC', 'PR', 'UI', 'S', 'C', 'I', 'A']))\n", | |||||

| " return encoded_data\n", | |||||

| "print(encode(lw))\n" | |||||

| ] | |||||

| }, | |||||

| { | |||||

| "cell_type": "code", | |||||

| "execution_count": 16, | |||||

| "id": "25bbd901-d4aa-44cb-8f1f-2720c553bfad", | |||||

| "metadata": {}, | |||||

| "outputs": [ | |||||

| { | |||||

| "name": "stdout", | |||||

| "output_type": "stream", | |||||

| "text": [ | |||||

| " AV_A AV_L AV_N AV_P AC_H AC_L PR_H PR_L PR_N UI_N ... S_U \\\n", | |||||

| "0 0 0.0 1.0 0 0.0 1.0 0.0 0.0 1.0 0.0 ... 0.0 \n", | |||||

| "1 0 0.0 1.0 0 0.0 1.0 0.0 0.0 1.0 1.0 ... 1.0 \n", | |||||

| "2 0 0.0 1.0 0 0.0 1.0 0.0 0.0 1.0 1.0 ... 1.0 \n", | |||||

| "3 0 0.0 1.0 0 0.0 1.0 0.0 0.0 1.0 0.0 ... 0.0 \n", | |||||

| "4 0 0.0 1.0 0 0.0 1.0 0.0 0.0 1.0 0.0 ... 0.0 \n", | |||||

| ".. ... ... ... ... ... ... ... ... ... ... ... ... \n", | |||||

| "705 0 0.0 1.0 0 0.0 1.0 0.0 0.0 1.0 1.0 ... 1.0 \n", | |||||

| "706 0 1.0 0.0 0 0.0 1.0 0.0 1.0 0.0 1.0 ... 1.0 \n", | |||||

| "707 0 0.0 1.0 0 0.0 1.0 0.0 0.0 1.0 1.0 ... 1.0 \n", | |||||

| "708 0 0.0 1.0 0 0.0 1.0 0.0 0.0 1.0 1.0 ... 1.0 \n", | |||||